⭐ 가시다(gasida) 님이 진행하는 Terraform T101 4기 실습 스터디 게시글입니다.

책 '테라폼으로 시작하는 IaC'을 참고했습니다!

게시글 상 소스코드, 사진에서 **굵게** 혹은 '''코드쉘''' 에 대한 부분이 들어가있을수도 있습니다.Terraform 으로 사용 가능한 Provider를 찾다가

Confluent Cloud 를 참조하는 자료가 없는 것 같아, 직접 공부하며 정리한 자료입니다.

게시글에 틀린 내용이 있을수 있습니다! 이번게시글에서는 Confluent 에 대한 설명, 간단한 데모(계정생성, Kafka Cluster 배포, Producer-Consumer 실습) 을 진행하겠습니다.

What is Confluent ?

Confluent 는 오픈소스 Apache Kafka & Apache Flink 를 매니지드 서비스로 제공해주는 매니지드 클라우드 서비스입니다.

오픈소스 Kafka 에 비해 관리가 용의하고, Confluent에서 제공하는 다양한 기능을 사용할 수 있습니다. 이번게시글에서는 간단한 데모를 진행해보겠습니다.

Confluent Cloud 의 특징

Confluent Cloud 는 다양한 클라우드 공급자(AWS, GCP, Azure 등등)의 클라우드환경을 사용하는 서비스를 제공하고있습니다.

예를들어 Confluent Cloud 를 AWS의 특정리전, MS 의 특정 region에서 배포하되, 이를 Confluent Cloud에서 관리하는 방식입니다.

따라서, 클라우드 공급자에 따라 사용하는 코드의 내용이 조금씩은 변경됩니다.

그러나, 생각보다 크게 달라지는 내용은 없습니다. 예를들어 AWS, MS Azure 는 다음과같습니다.

#AWS

resource "confluent_kafka_cluster" "basic" {

display_name = "basic_kafka_cluster"

availability = "SINGLE_ZONE"

cloud = "AWS"

region = "us-east-2"

basic {}

environment {

id = confluent_environment.development.id

}

lifecycle {

prevent_destroy = true

}#Azure

resource "confluent_kafka_cluster" "basic" {

display_name = "basic_kafka_cluster"

availability = "SINGLE_ZONE"

cloud = "AZURE"

region = "centralus"

basic {}

environment {

id = confluent_environment.development.id

}

lifecycle {

prevent_destroy = true

}

}

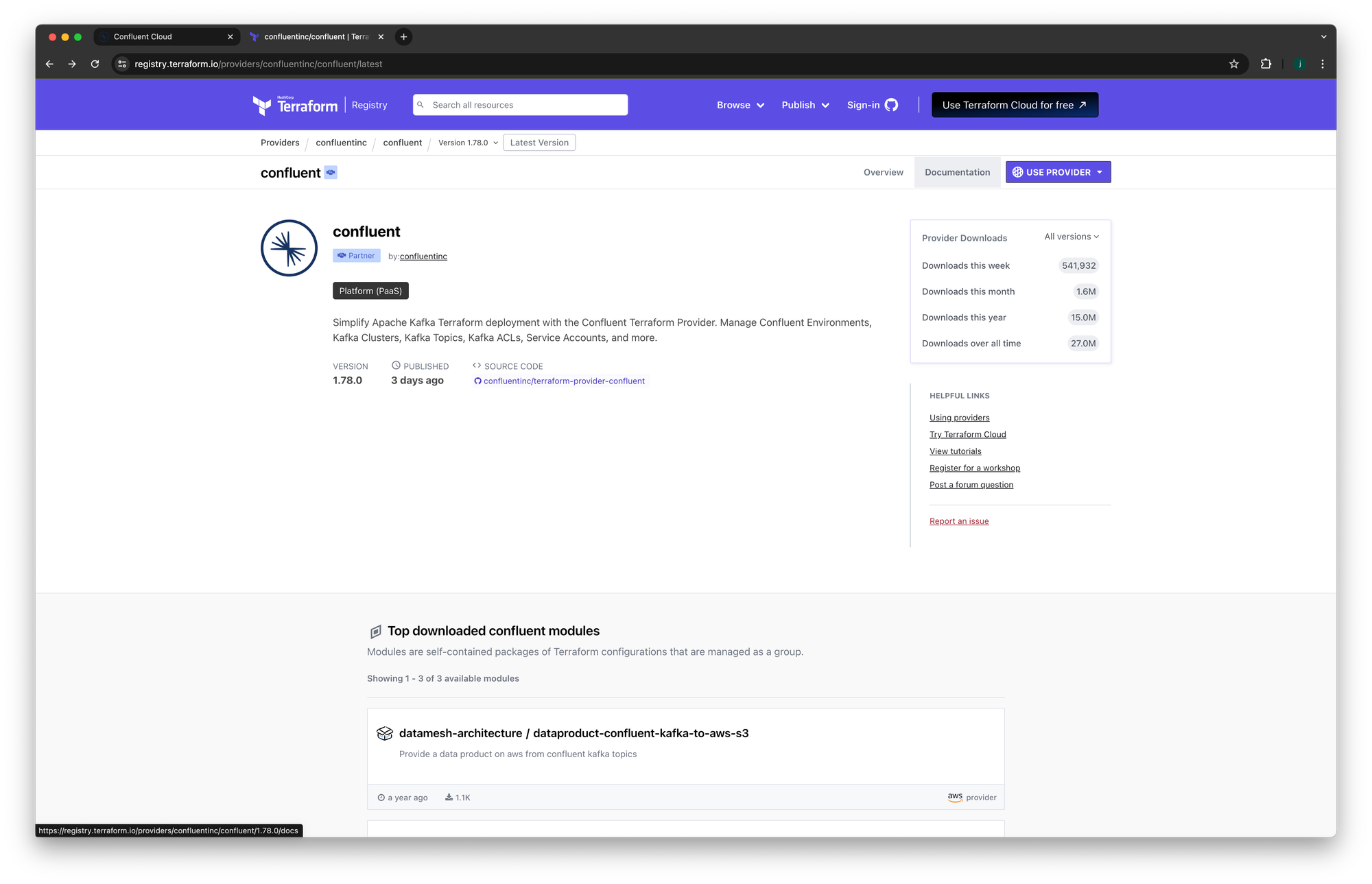

Terraform Confluent Provider

Terraform 에서 Confleunt Cloud 를 사용하기 위해, 프로바이더(Provider, 공급자) 페이지를 확인합니다.

현재 Partner 로 공급되고 있으며, Provider의 정보(서비스유형, 버전 등) 을 확인가능합니다.

아래 사진은 프로바이더 창 화면입니다. 우측상단 Documentation을 통해 공식문서를 확인가능합니다.

계정 추가 및 설정

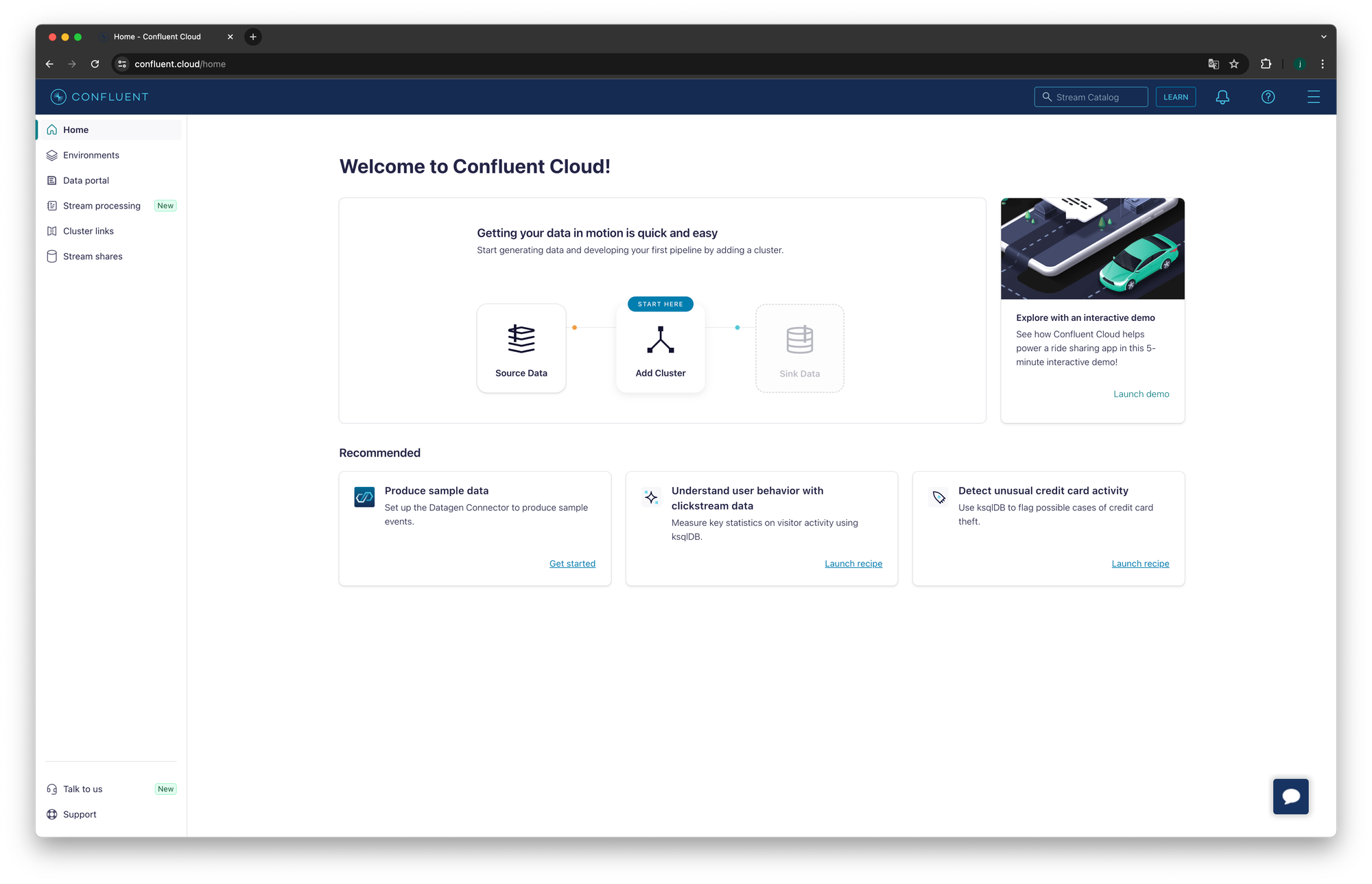

공식사이트( https://confluent.cloud/ ) 에 접근하여 계정을 생성해줍니다.

정상적으로 로그인이 되면 아래의 화면이 나타납니다.

메인 대시보드 화면이며, 현재 아무런 클러스터가 없는것을 확인가능합니다.

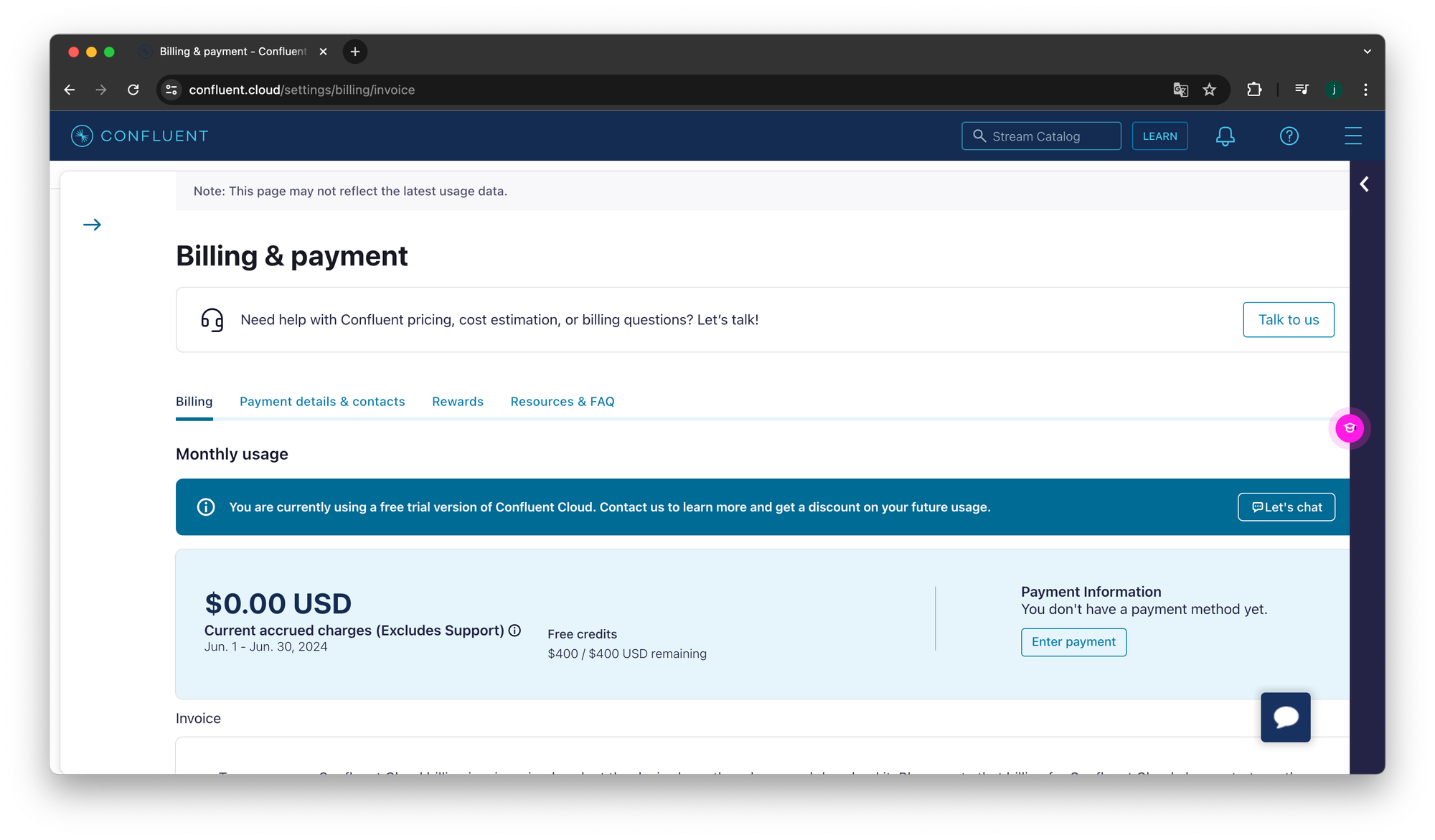

우측상단 메뉴바 → Administraion → Billing & payment 탭을 확인해보면 400크레딧이 제공된걸 확인가능합니다(30Day remaining)

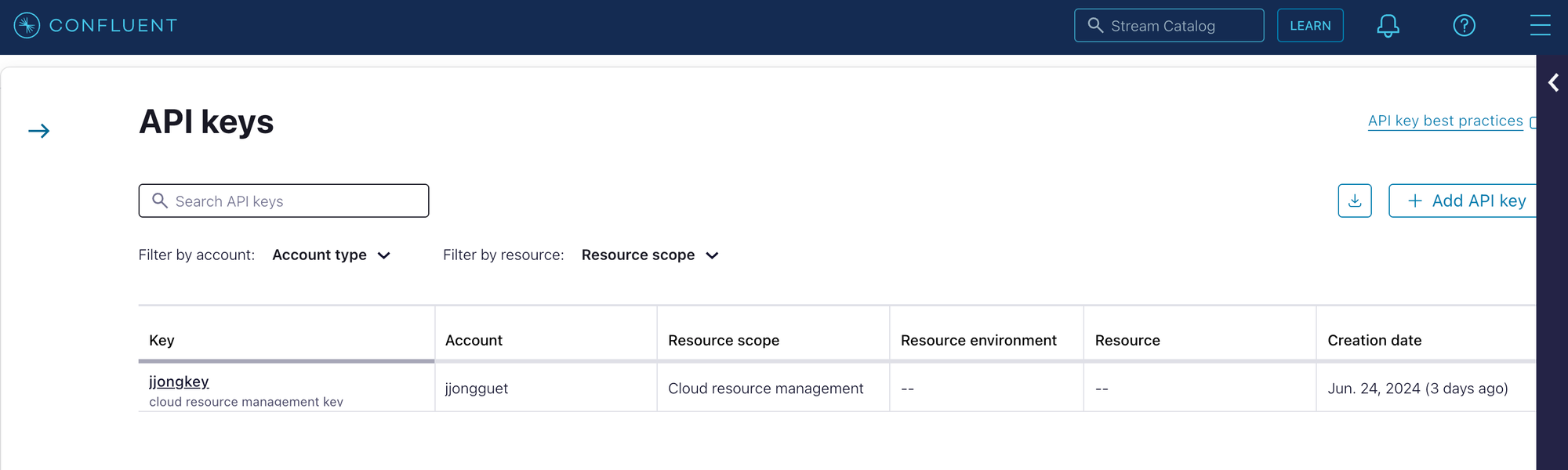

Confluent Cloud Access Key

Confleunt 대시보드 → 우측상단 메뉴 → API Keys 를 선택합니다.

아래의 화면을 확인할 수 있습니다. Add API Key 버튼을 눌러줍시다.

각각 순서대로

- Account: My account

- Resource scope: Cloud resource management

- API Key detail: Name(

jjongkey), Description(cloud resource management key)

를 선택하여 API Key를 발급받았습니다.

발급이 완료되면 API Key의 Key, Secret 값을 따로 저장해놓습니다.

발급이 완료되면 API Keys 항목에서 발급받은 Key를 확인가능합니다.

API Key의 Secret 값은 다시확인할 수 없으니, 꼭 API Key,Secret 을 저장해두세요.

Billing & Payment

추후 생길수 있는 이슈(e.g. terraform 코드는 제대로 구성되었으나, 결제설정이 되어있지 않아 에러가 생기는 문제)를 미리 대비해줍시다.

Confleunt Cloud → Billing & payment → 우측의 Enter payment 를 선택해서 신용카드를 등록해줍시다. 저는 Credit card/bank 로 등록했습니다.

간단한 데모 진행해보기

이번 게시글에서는 간단한 카프카 데모를 배포해보겠습니다.

https://github.com/confluentinc/terraform-provider-confluent

컨플루언트 공식 깃허브에 다양한 예제가 존재하며, RBAC기반의 standard-kafka 를 배포해보겠습니다.

git clone https://github.com/confluentinc/terraform-provider-confluent.git

cd terraform-provider-confluent/examples/configurations/standard-kafka-rbac

code . #vscode 에디터 사용

confluent 프로바이더를 사용하기 위하여, cloud_api_key와 cloud_api_secret 를 저장해줘야합니다. 대표적으로는 두가지 방식이 있습니다.

첫번째 방식: Terraform 코드에 variable로 선언하여 참조하기

main.tf파일에서 변수를 가져와서 사용하며,variables.tf파일에 변수를 선언하여 사용합니다.

두번째 방식: 쉘에서 variable로 선언하여 참조하기

main.tf파일과variables.tf파일에 변수를 선언하지않고, 터미널에서 직접 선언하여 사용합니다.

이번게시글에서는 두번째 방식으로 사용합니다. 적절하게 코드를 수정해줍시다.

실습용 코드수정

main.tf수정

#변경 전

provider "confluent" {

cloud_api_key = var.confluent_cloud_api_key

cloud_api_secret = var.confluent_cloud_api_secret

}

#변경 후

provider "confluent" {

}

variables.tf수정

#변경 전

variable "confluent_cloud_api_key" {

description = "Confluent Cloud API Key (also referred as Cloud API ID)"

type = string

sensitive = true

}

variable "confluent_cloud_api_secret" {

description = "Confluent Cloud API Secret"

type = string

sensitive = true

}

#변경 후

# variable "confluent_cloud_api_key" {

# description = "Confluent Cloud API Key (also referred as Cloud API ID)"

# type = string

# sensitive = true

# }

# variable "confluent_cloud_api_secret" {

# description = "Confluent Cloud API Secret"

# type = string

# sensitive = true

# }

배포 프로세스 설명

테라폼을 배포하기 위한 순서는 아래순서로 진행됩니다.

terraform init → API Key 입력 → terraform plan → terraform apply 순서로 구성됩니다.

배포단계1: init

terraform init

terraform init

Initializing the backend...

Initializing provider plugins...

- Finding confluentinc/confluent versions matching "1.78.0"...

- Installing confluentinc/confluent v1.78.0...

- Installed confluentinc/confluent v1.78.0 (self-signed, key ID 5186AD92BC23B670)

Partner and community providers are signed by their developers.

If you'd like to know more about provider signing, you can read about it here:

https://www.terraform.io/docs/cli/plugins/signing.html

Terraform has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that Terraform can guarantee to make the same selections by default when

you run "terraform init" in the future.

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

배포단계2: API 키 입력

<cloud_api_key>, <cloud_api_secret>에 아까발급받은 API KEY 를 입력하여, 터미널에서 실행합니다.

export CONFLUENT_CLOUD_API_KEY="<cloud_api_key>"

export CONFLUENT_CLOUD_API_SECRET="<cloud_api_secret>"

배포단계3: Plan

terraform plan

배포될 리소스에 대해, 배포계획을 확인할 수 있습니다. 여기서는 중요한 리소스만 명시해서보겠습니다.

confluent_environment: Environment는 Kafka Cluster 를 구성하는 그룹을 지칭합니다. 하나의 Environment는 여러개의 Kafka Cluster로 구성될 수 있습니다.

# confluent_environment.staging will be created

+ resource "confluent_environment" "staging" {

+ display_name = "Staging"

+ id = (known after apply)

+ resource_name = (known after apply)

}

confluent_kafka_cluster: Confluent 에서 제공하는 Cluster 리소스 타입은 4가지(Basic, Standard, Enterprise, Dedicated) 입니다. 그 중 standard 로 선택하여 배포합니다.

- 리소스 별로 스펙이 다릅니다. 자세한 내용은 https://docs.confluent.io/cloud/current/clusters/cluster-types.html?ajs_aid=022949c0-481a-430d-ad9d-54d1e9ebaba9&ajs_uid=3425482#cluster-limit-comparison 에서 비교가능합니다.

- 사용하는 클라우드 공급자(AWS), 리전(us-east-2) 등의 정보를 확인할 수 있습니다.

# confluent_kafka_cluster.standard will be created

+ resource "confluent_kafka_cluster" "standard" {

+ api_version = (known after apply)

+ availability = "SINGLE_ZONE"

+ bootstrap_endpoint = (known after apply)

+ cloud = "AWS"

+ display_name = "inventory"

+ id = (known after apply)

+ kind = (known after apply)

+ rbac_crn = (known after apply)

+ region = "us-east-2"

+ rest_endpoint = (known after apply)

+ environment {

+ id = (known after apply)

}

+ standard {}

}

confluent_kafka_topic: Kafka에서 사용할 토픽에 대한 정보입니다.

- 파티션 갯수(6), topic 을 사용할 Kafka Cluster id가 입력됩니다.

# confluent_kafka_topic.orders will be created

+ resource "confluent_kafka_topic" "orders" {

+ config = (known after apply)

+ id = (known after apply)

+ partitions_count = 6

+ rest_endpoint = (known after apply)

+ topic_name = "orders"

+ credentials {

+ key = (sensitive value)

+ secret = (sensitive value)

}

+ kafka_cluster {

+ id = (known after apply)

}

}

confluent_service_account: Service account 는 각각의 역할을 하는 계정을 지칭합니다.

- Consumer 역할의 app-consumer, Producer 역할의 app-producer, Admin 역할의 app-manager 를 생성했습니다.

# confluent_service_account.app-consumer will be created

+ resource "confluent_service_account" "app-consumer" {

+ api_version = (known after apply)

+ description = "Service account to consume from 'orders' topic of 'inventory' Kafka cluster"

+ display_name = "app-consumer"

+ id = (known after apply)

+ kind = (known after apply)

}

# confluent_service_account.app-manager will be created

+ resource "confluent_service_account" "app-manager" {

+ api_version = (known after apply)

+ description = "Service account to manage 'inventory' Kafka cluster"

+ display_name = "app-manager"

+ id = (known after apply)

+ kind = (known after apply)

}

# confluent_service_account.app-producer will be created

+ resource "confluent_service_account" "app-producer" {

+ api_version = (known after apply)

+ description = "Service account to produce to 'orders' topic of 'inventory' Kafka cluster"

+ display_name = "app-producer"

+ id = (known after apply)

+ kind = (known after apply)

}

confluent_role_binding: 현재 RBAC 기반으로 권한을 관리하는 방법을 사용합니다

- 각각의 계정(app-consumer, app-manager, app-producer) 에 대해 적절한 권한을 구성합니다.

- 각각 계정마다 부여한 권한이 다르단것을 확인가능합니다.

# confluent_role_binding.app-consumer-developer-read-from-group will be created

+ resource "confluent_role_binding" "app-consumer-developer-read-from-group" {

+ crn_pattern = (known after apply)

+ id = (known after apply)

+ principal = (known after apply)

+ role_name = "DeveloperRead"

}

# confluent_role_binding.app-consumer-developer-read-from-topic will be created

+ resource "confluent_role_binding" "app-consumer-developer-read-from-topic" {

+ crn_pattern = (known after apply)

+ id = (known after apply)

+ principal = (known after apply)

+ role_name = "DeveloperRead"

}

# confluent_role_binding.app-manager-kafka-cluster-admin will be created

+ resource "confluent_role_binding" "app-manager-kafka-cluster-admin" {

+ crn_pattern = (known after apply)

+ id = (known after apply)

+ principal = (known after apply)

+ role_name = "CloudClusterAdmin"

}

# confluent_role_binding.app-producer-developer-write will be created

+ resource "confluent_role_binding" "app-producer-developer-write" {

+ crn_pattern = (known after apply)

+ id = (known after apply)

+ principal = (known after apply)

+ role_name = "DeveloperWrite"

}

전체로그는 다음과같습니다.

- 내용이 꽤 길지만, 아래의 Warning 가 확인됩니다. 주된 내용으로는 Deprecated Resource 가 있으며,

confluent_schema_registry_region데이터소스 가 다음버전부터 더이상 사용되지 않을거라는 이슈입니다.- 근데, error 도 아니고, warning 이니까 크게신경쓰지맙시다. 어차피 리소스최적화를 하려는게 아니고, 그냥 배포만 해보려는거니까요!

terraform plan

data.confluent_schema_registry_region.essentials: Reading...

data.confluent_schema_registry_region.essentials: Read complete after 1s [id=sgreg-1]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the

following symbols:

+ create

Terraform will perform the following actions:

# confluent_api_key.app-consumer-kafka-api-key will be created

+ resource "confluent_api_key" "app-consumer-kafka-api-key" {

+ description = "Kafka API Key that is owned by 'app-consumer' service account"

+ disable_wait_for_ready = false

+ display_name = "app-consumer-kafka-api-key"

+ id = (known after apply)

+ secret = (sensitive value)

+ managed_resource {

+ api_version = (known after apply)

+ id = (known after apply)

+ kind = (known after apply)

+ environment {

+ id = (known after apply)

}

}

+ owner {

+ api_version = (known after apply)

+ id = (known after apply)

+ kind = (known after apply)

}

}

# confluent_api_key.app-manager-kafka-api-key will be created

+ resource "confluent_api_key" "app-manager-kafka-api-key" {

+ description = "Kafka API Key that is owned by 'app-manager' service account"

+ disable_wait_for_ready = false

+ display_name = "app-manager-kafka-api-key"

+ id = (known after apply)

+ secret = (sensitive value)

+ managed_resource {

+ api_version = (known after apply)

+ id = (known after apply)

+ kind = (known after apply)

+ environment {

+ id = (known after apply)

}

}

+ owner {

+ api_version = (known after apply)

+ id = (known after apply)

+ kind = (known after apply)

}

}

# confluent_api_key.app-producer-kafka-api-key will be created

+ resource "confluent_api_key" "app-producer-kafka-api-key" {

+ description = "Kafka API Key that is owned by 'app-producer' service account"

+ disable_wait_for_ready = false

+ display_name = "app-producer-kafka-api-key"

+ id = (known after apply)

+ secret = (sensitive value)

+ managed_resource {

+ api_version = (known after apply)

+ id = (known after apply)

+ kind = (known after apply)

+ environment {

+ id = (known after apply)

}

}

+ owner {

+ api_version = (known after apply)

+ id = (known after apply)

+ kind = (known after apply)

}

}

# confluent_environment.staging will be created

+ resource "confluent_environment" "staging" {

+ display_name = "Staging"

+ id = (known after apply)

+ resource_name = (known after apply)

}

# confluent_kafka_cluster.standard will be created

+ resource "confluent_kafka_cluster" "standard" {

+ api_version = (known after apply)

+ availability = "SINGLE_ZONE"

+ bootstrap_endpoint = (known after apply)

+ cloud = "AWS"

+ display_name = "inventory"

+ id = (known after apply)

+ kind = (known after apply)

+ rbac_crn = (known after apply)

+ region = "us-east-2"

+ rest_endpoint = (known after apply)

+ environment {

+ id = (known after apply)

}

+ standard {}

}

# confluent_kafka_topic.orders will be created

+ resource "confluent_kafka_topic" "orders" {

+ config = (known after apply)

+ id = (known after apply)

+ partitions_count = 6

+ rest_endpoint = (known after apply)

+ topic_name = "orders"

+ credentials {

+ key = (sensitive value)

+ secret = (sensitive value)

}

+ kafka_cluster {

+ id = (known after apply)

}

}

# confluent_role_binding.app-consumer-developer-read-from-group will be created

+ resource "confluent_role_binding" "app-consumer-developer-read-from-group" {

+ crn_pattern = (known after apply)

+ id = (known after apply)

+ principal = (known after apply)

+ role_name = "DeveloperRead"

}

# confluent_role_binding.app-consumer-developer-read-from-topic will be created

+ resource "confluent_role_binding" "app-consumer-developer-read-from-topic" {

+ crn_pattern = (known after apply)

+ id = (known after apply)

+ principal = (known after apply)

+ role_name = "DeveloperRead"

}

# confluent_role_binding.app-manager-kafka-cluster-admin will be created

+ resource "confluent_role_binding" "app-manager-kafka-cluster-admin" {

+ crn_pattern = (known after apply)

+ id = (known after apply)

+ principal = (known after apply)

+ role_name = "CloudClusterAdmin"

}

# confluent_role_binding.app-producer-developer-write will be created

+ resource "confluent_role_binding" "app-producer-developer-write" {

+ crn_pattern = (known after apply)

+ id = (known after apply)

+ principal = (known after apply)

+ role_name = "DeveloperWrite"

}

# confluent_schema_registry_cluster.essentials will be created

+ resource "confluent_schema_registry_cluster" "essentials" {

+ api_version = (known after apply)

+ display_name = (known after apply)

+ id = (known after apply)

+ kind = (known after apply)

+ package = "ESSENTIALS"

+ resource_name = (known after apply)

+ rest_endpoint = (known after apply)

+ environment {

+ id = (known after apply)

}

+ region {

+ id = "sgreg-1"

}

}

# confluent_service_account.app-consumer will be created

+ resource "confluent_service_account" "app-consumer" {

+ api_version = (known after apply)

+ description = "Service account to consume from 'orders' topic of 'inventory' Kafka cluster"

+ display_name = "app-consumer"

+ id = (known after apply)

+ kind = (known after apply)

}

# confluent_service_account.app-manager will be created

+ resource "confluent_service_account" "app-manager" {

+ api_version = (known after apply)

+ description = "Service account to manage 'inventory' Kafka cluster"

+ display_name = "app-manager"

+ id = (known after apply)

+ kind = (known after apply)

}

# confluent_service_account.app-producer will be created

+ resource "confluent_service_account" "app-producer" {

+ api_version = (known after apply)

+ description = "Service account to produce to 'orders' topic of 'inventory' Kafka cluster"

+ display_name = "app-producer"

+ id = (known after apply)

+ kind = (known after apply)

}

Plan: 14 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ resource-ids = (sensitive value)

╷

│ Warning: Deprecated Resource

│

│ with data.confluent_schema_registry_region.essentials,

│ on main.tf line 19, in data "confluent_schema_registry_region" "essentials":

│ 19: data "confluent_schema_registry_region" "essentials" {

│

│ The "schema_registry_region" data source has been deprecated and will be removed in the next major version of the provider

│ (2.0.0). Refer to the Upgrade Guide at

│ https://registry.terraform.io/providers/confluentinc/confluent/latest/docs/guides/version-2-upgrade for more details. The

│ guide will be published once version 2.0.0 is released.

│

│ (and 3 more similar warnings elsewhere)

╵

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Note: You didn't use the -out option to save this plan, so Terraform can't guarantee to take exactly these actions if you run

"terraform apply" now.

배포단계4: apply

terraform apply

입력문구가 보이면 yes 를 입력하여 배포합니다.

- 주의할 점은, 예제에서 사용중인 리소스의 이름(e.g.

confluetn_service_account 의 app-producer, app-consumer)가 기 배포된 내역이 있을경우, 409 Conflict: Service name is already in use. 등의 에러가 발생할 수 있다는점입니다.- 리소스 중복에 주의해주세요.

terraform apply

data.confluent_schema_registry_region.essentials: Reading...

data.confluent_schema_registry_region.essentials: Read complete after 1s [id=sgreg-1]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the

following symbols:

+ create

Terraform will perform the following actions:

# confluent_api_key.app-consumer-kafka-api-key will be created

+ resource "confluent_api_key" "app-consumer-kafka-api-key" {

+ description = "Kafka API Key that is owned by 'app-consumer' service account"

+ disable_wait_for_ready = false

+ display_name = "app-consumer-kafka-api-key"

+ id = (known after apply)

+ secret = (sensitive value)

+ managed_resource {

+ api_version = (known after apply)

+ id = (known after apply)

+ kind = (known after apply)

+ environment {

+ id = (known after apply)

}

}

+ owner {

+ api_version = (known after apply)

+ id = (known after apply)

+ kind = (known after apply)

}

}

# confluent_api_key.app-manager-kafka-api-key will be created

+ resource "confluent_api_key" "app-manager-kafka-api-key" {

+ description = "Kafka API Key that is owned by 'app-manager' service account"

+ disable_wait_for_ready = false

+ display_name = "app-manager-kafka-api-key"

+ id = (known after apply)

+ secret = (sensitive value)

+ managed_resource {

+ api_version = (known after apply)

+ id = (known after apply)

+ kind = (known after apply)

+ environment {

+ id = (known after apply)

}

}

+ owner {

+ api_version = (known after apply)

+ id = (known after apply)

+ kind = (known after apply)

}

}

# confluent_api_key.app-producer-kafka-api-key will be created

+ resource "confluent_api_key" "app-producer-kafka-api-key" {

+ description = "Kafka API Key that is owned by 'app-producer' service account"

+ disable_wait_for_ready = false

+ display_name = "app-producer-kafka-api-key"

+ id = (known after apply)

+ secret = (sensitive value)

+ managed_resource {

+ api_version = (known after apply)

+ id = (known after apply)

+ kind = (known after apply)

+ environment {

+ id = (known after apply)

}

}

+ owner {

+ api_version = (known after apply)

+ id = (known after apply)

+ kind = (known after apply)

}

}

# confluent_environment.staging will be created

+ resource "confluent_environment" "staging" {

+ display_name = "Staging"

+ id = (known after apply)

+ resource_name = (known after apply)

}

# confluent_kafka_cluster.standard will be created

+ resource "confluent_kafka_cluster" "standard" {

+ api_version = (known after apply)

+ availability = "SINGLE_ZONE"

+ bootstrap_endpoint = (known after apply)

+ cloud = "AWS"

+ display_name = "inventory"

+ id = (known after apply)

+ kind = (known after apply)

+ rbac_crn = (known after apply)

+ region = "us-east-2"

+ rest_endpoint = (known after apply)

+ environment {

+ id = (known after apply)

}

+ standard {}

}

# confluent_kafka_topic.orders will be created

+ resource "confluent_kafka_topic" "orders" {

+ config = (known after apply)

+ id = (known after apply)

+ partitions_count = 6

+ rest_endpoint = (known after apply)

+ topic_name = "orders"

+ credentials {

+ key = (sensitive value)

+ secret = (sensitive value)

}

+ kafka_cluster {

+ id = (known after apply)

}

}

# confluent_role_binding.app-consumer-developer-read-from-group will be created

+ resource "confluent_role_binding" "app-consumer-developer-read-from-group" {

+ crn_pattern = (known after apply)

+ id = (known after apply)

+ principal = (known after apply)

+ role_name = "DeveloperRead"

}

# confluent_role_binding.app-consumer-developer-read-from-topic will be created

+ resource "confluent_role_binding" "app-consumer-developer-read-from-topic" {

+ crn_pattern = (known after apply)

+ id = (known after apply)

+ principal = (known after apply)

+ role_name = "DeveloperRead"

}

# confluent_role_binding.app-manager-kafka-cluster-admin will be created

+ resource "confluent_role_binding" "app-manager-kafka-cluster-admin" {

+ crn_pattern = (known after apply)

+ id = (known after apply)

+ principal = (known after apply)

+ role_name = "CloudClusterAdmin"

}

# confluent_role_binding.app-producer-developer-write will be created

+ resource "confluent_role_binding" "app-producer-developer-write" {

+ crn_pattern = (known after apply)

+ id = (known after apply)

+ principal = (known after apply)

+ role_name = "DeveloperWrite"

}

# confluent_schema_registry_cluster.essentials will be created

+ resource "confluent_schema_registry_cluster" "essentials" {

+ api_version = (known after apply)

+ display_name = (known after apply)

+ id = (known after apply)

+ kind = (known after apply)

+ package = "ESSENTIALS"

+ resource_name = (known after apply)

+ rest_endpoint = (known after apply)

+ environment {

+ id = (known after apply)

}

+ region {

+ id = "sgreg-1"

}

}

# confluent_service_account.app-consumer will be created

+ resource "confluent_service_account" "app-consumer" {

+ api_version = (known after apply)

+ description = "Service account to consume from 'orders' topic of 'inventory' Kafka cluster"

+ display_name = "app-consumer"

+ id = (known after apply)

+ kind = (known after apply)

}

# confluent_service_account.app-manager will be created

+ resource "confluent_service_account" "app-manager" {

+ api_version = (known after apply)

+ description = "Service account to manage 'inventory' Kafka cluster"

+ display_name = "app-manager"

+ id = (known after apply)

+ kind = (known after apply)

}

# confluent_service_account.app-producer will be created

+ resource "confluent_service_account" "app-producer" {

+ api_version = (known after apply)

+ description = "Service account to produce to 'orders' topic of 'inventory' Kafka cluster"

+ display_name = "app-producer"

+ id = (known after apply)

+ kind = (known after apply)

}

Plan: 14 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ resource-ids = (sensitive value)

╷

│ Warning: Deprecated Resource

│

│ with data.confluent_schema_registry_region.essentials,

│ on main.tf line 19, in data "confluent_schema_registry_region" "essentials":

│ 19: data "confluent_schema_registry_region" "essentials" {

│

│ The "schema_registry_region" data source has been deprecated and will be removed in the next major version of the provider

│ (2.0.0). Refer to the Upgrade Guide at

│ https://registry.terraform.io/providers/confluentinc/confluent/latest/docs/guides/version-2-upgrade for more details. The

│ guide will be published once version 2.0.0 is released.

│

│ (and 3 more similar warnings elsewhere)

╵

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

confluent_environment.staging: Creating...

confluent_service_account.app-producer: Creating...

confluent_service_account.app-consumer: Creating...

confluent_service_account.app-manager: Creating...

confluent_environment.staging: Creation complete after 1s [id=env-w10xjg]

confluent_schema_registry_cluster.essentials: Creating...

confluent_kafka_cluster.standard: Creating...

confluent_service_account.app-producer: Creation complete after 2s [id=sa-2n2v51]

confluent_service_account.app-manager: Creation complete after 2s [id=sa-rxrdv0]

confluent_service_account.app-consumer: Creation complete after 2s [id=sa-02xo55]

confluent_kafka_cluster.standard: Creation complete after 7s [id=lkc-1dgvzz]

confluent_role_binding.app-manager-kafka-cluster-admin: Creating...

confluent_role_binding.app-consumer-developer-read-from-group: Creating...

confluent_api_key.app-producer-kafka-api-key: Creating...

confluent_api_key.app-consumer-kafka-api-key: Creating...

confluent_schema_registry_cluster.essentials: Creation complete after 7s [id=lsrc-n528nz]

confluent_role_binding.app-consumer-developer-read-from-group: Still creating... [10s elapsed]

confluent_api_key.app-consumer-kafka-api-key: Still creating... [10s elapsed]

confluent_api_key.app-producer-kafka-api-key: Still creating... [10s elapsed]

confluent_role_binding.app-manager-kafka-cluster-admin: Still creating... [10s elapsed]

confluent_api_key.app-consumer-kafka-api-key: Still creating... [20s elapsed]

confluent_role_binding.app-consumer-developer-read-from-group: Still creating... [20s elapsed]

confluent_api_key.app-producer-kafka-api-key: Still creating... [20s elapsed]

confluent_role_binding.app-manager-kafka-cluster-admin: Still creating... [20s elapsed]

confluent_api_key.app-consumer-kafka-api-key: Still creating... [30s elapsed]

confluent_api_key.app-producer-kafka-api-key: Still creating... [30s elapsed]

confluent_role_binding.app-consumer-developer-read-from-group: Still creating... [30s elapsed]

confluent_role_binding.app-manager-kafka-cluster-admin: Still creating... [30s elapsed]

confluent_role_binding.app-consumer-developer-read-from-group: Still creating... [40s elapsed]

confluent_api_key.app-producer-kafka-api-key: Still creating... [40s elapsed]

confluent_api_key.app-consumer-kafka-api-key: Still creating... [40s elapsed]

confluent_role_binding.app-manager-kafka-cluster-admin: Still creating... [40s elapsed]

confluent_api_key.app-consumer-kafka-api-key: Still creating... [50s elapsed]

confluent_role_binding.app-consumer-developer-read-from-group: Still creating... [50s elapsed]

confluent_api_key.app-producer-kafka-api-key: Still creating... [50s elapsed]

confluent_role_binding.app-manager-kafka-cluster-admin: Still creating... [50s elapsed]

confluent_role_binding.app-consumer-developer-read-from-group: Still creating... [1m0s elapsed]

confluent_api_key.app-producer-kafka-api-key: Still creating... [1m0s elapsed]

confluent_api_key.app-consumer-kafka-api-key: Still creating... [1m0s elapsed]

confluent_role_binding.app-manager-kafka-cluster-admin: Still creating... [1m0s elapsed]

confluent_role_binding.app-consumer-developer-read-from-group: Still creating... [1m10s elapsed]

confluent_api_key.app-consumer-kafka-api-key: Still creating... [1m10s elapsed]

confluent_api_key.app-producer-kafka-api-key: Still creating... [1m10s elapsed]

confluent_role_binding.app-manager-kafka-cluster-admin: Still creating... [1m10s elapsed]

confluent_role_binding.app-manager-kafka-cluster-admin: Still creating... [1m20s elapsed]

confluent_api_key.app-consumer-kafka-api-key: Still creating... [1m20s elapsed]

confluent_role_binding.app-consumer-developer-read-from-group: Still creating... [1m20s elapsed]

confluent_api_key.app-producer-kafka-api-key: Still creating... [1m20s elapsed]

confluent_role_binding.app-consumer-developer-read-from-group: Still creating... [1m30s elapsed]

confluent_api_key.app-producer-kafka-api-key: Still creating... [1m30s elapsed]

confluent_role_binding.app-manager-kafka-cluster-admin: Still creating... [1m30s elapsed]

confluent_api_key.app-consumer-kafka-api-key: Still creating... [1m30s elapsed]

confluent_role_binding.app-consumer-developer-read-from-group: Creation complete after 1m31s [id=rb-dLQllK]

confluent_role_binding.app-manager-kafka-cluster-admin: Creation complete after 1m31s [id=rb-54rEEy]

confluent_api_key.app-manager-kafka-api-key: Creating...

confluent_api_key.app-consumer-kafka-api-key: Still creating... [1m40s elapsed]

confluent_api_key.app-producer-kafka-api-key: Still creating... [1m40s elapsed]

confluent_api_key.app-manager-kafka-api-key: Still creating... [10s elapsed]

confluent_api_key.app-producer-kafka-api-key: Still creating... [1m50s elapsed]

confluent_api_key.app-consumer-kafka-api-key: Still creating... [1m50s elapsed]

confluent_api_key.app-manager-kafka-api-key: Still creating... [20s elapsed]

confluent_api_key.app-consumer-kafka-api-key: Still creating... [2m0s elapsed]

confluent_api_key.app-producer-kafka-api-key: Still creating... [2m0s elapsed]

confluent_api_key.app-manager-kafka-api-key: Still creating... [30s elapsed]

confluent_api_key.app-consumer-kafka-api-key: Creation complete after 2m3s [id=CPXXMHDM5NKKXEPV]

confluent_api_key.app-producer-kafka-api-key: Creation complete after 2m3s [id=3NSTHIB4E7WLHYOS]

confluent_api_key.app-manager-kafka-api-key: Still creating... [40s elapsed]

confluent_api_key.app-manager-kafka-api-key: Still creating... [50s elapsed]

confluent_api_key.app-manager-kafka-api-key: Still creating... [1m0s elapsed]

confluent_api_key.app-manager-kafka-api-key: Still creating... [1m10s elapsed]

confluent_api_key.app-manager-kafka-api-key: Still creating... [1m20s elapsed]

confluent_api_key.app-manager-kafka-api-key: Still creating... [1m30s elapsed]

confluent_api_key.app-manager-kafka-api-key: Still creating... [1m40s elapsed]

confluent_api_key.app-manager-kafka-api-key: Still creating... [1m50s elapsed]

confluent_api_key.app-manager-kafka-api-key: Still creating... [2m0s elapsed]

confluent_api_key.app-manager-kafka-api-key: Creation complete after 2m4s [id=YQDCNRPAKBHH3HWR]

confluent_kafka_topic.orders: Creating...

confluent_kafka_topic.orders: Still creating... [10s elapsed]

confluent_kafka_topic.orders: Creation complete after 12s [id=lkc-1dgvzz/orders]

confluent_role_binding.app-producer-developer-write: Creating...

confluent_role_binding.app-consumer-developer-read-from-topic: Creating...

confluent_role_binding.app-producer-developer-write: Still creating... [10s elapsed]

confluent_role_binding.app-consumer-developer-read-from-topic: Still creating... [10s elapsed]

confluent_role_binding.app-consumer-developer-read-from-topic: Still creating... [20s elapsed]

confluent_role_binding.app-producer-developer-write: Still creating... [20s elapsed]

confluent_role_binding.app-producer-developer-write: Still creating... [30s elapsed]

confluent_role_binding.app-consumer-developer-read-from-topic: Still creating... [30s elapsed]

confluent_role_binding.app-consumer-developer-read-from-topic: Still creating... [40s elapsed]

confluent_role_binding.app-producer-developer-write: Still creating... [40s elapsed]

confluent_role_binding.app-producer-developer-write: Still creating... [50s elapsed]

confluent_role_binding.app-consumer-developer-read-from-topic: Still creating... [50s elapsed]

confluent_role_binding.app-consumer-developer-read-from-topic: Still creating... [1m0s elapsed]

confluent_role_binding.app-producer-developer-write: Still creating... [1m0s elapsed]

confluent_role_binding.app-producer-developer-write: Still creating... [1m10s elapsed]

confluent_role_binding.app-consumer-developer-read-from-topic: Still creating... [1m10s elapsed]

confluent_role_binding.app-producer-developer-write: Still creating... [1m20s elapsed]

confluent_role_binding.app-consumer-developer-read-from-topic: Still creating... [1m20s elapsed]

confluent_role_binding.app-consumer-developer-read-from-topic: Still creating... [1m30s elapsed]

confluent_role_binding.app-producer-developer-write: Still creating... [1m30s elapsed]

confluent_role_binding.app-consumer-developer-read-from-topic: Creation complete after 1m32s [id=rb-3PrKW9]

confluent_role_binding.app-producer-developer-write: Creation complete after 1m32s [id=rb-Y1EYMl]

╷

│ Warning: Deprecated Resource

│

│ with confluent_schema_registry_cluster.essentials,

│ on main.tf line 25, in resource "confluent_schema_registry_cluster" "essentials":

│ 25: resource "confluent_schema_registry_cluster" "essentials" {

│

│ The "schema_registry_cluster" resource has been deprecated and will be removed in the next major version of the provider

│ (2.0.0). Refer to the Upgrade Guide at

│ https://registry.terraform.io/providers/confluentinc/confluent/latest/docs/guides/version-2-upgrade for more details. The

│ guide will be published once version 2.0.0 is released.

╵

Apply complete! Resources: 14 added, 0 changed, 0 destroyed.

Outputs:

resource-ids = <sensitive>

배포확인

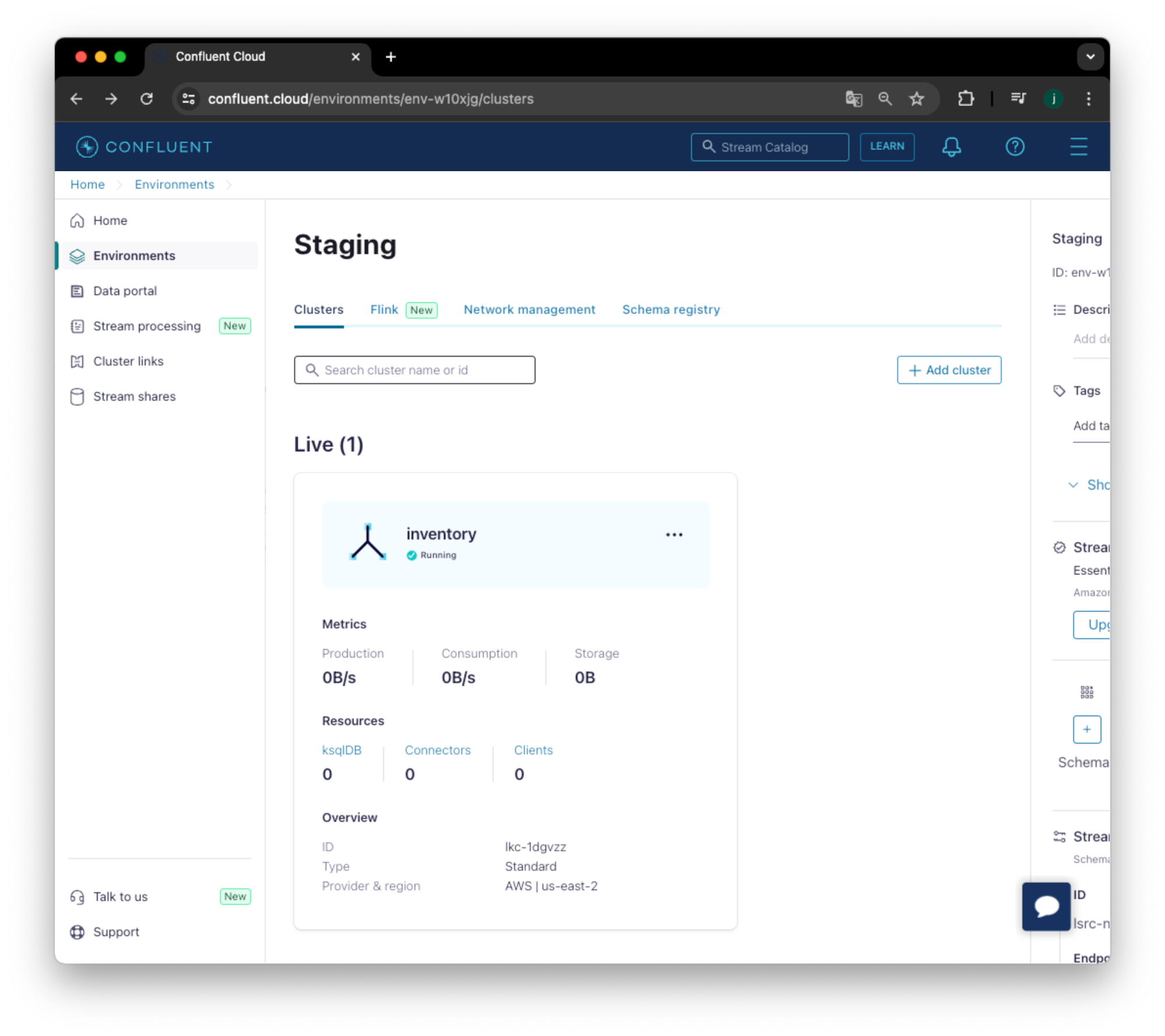

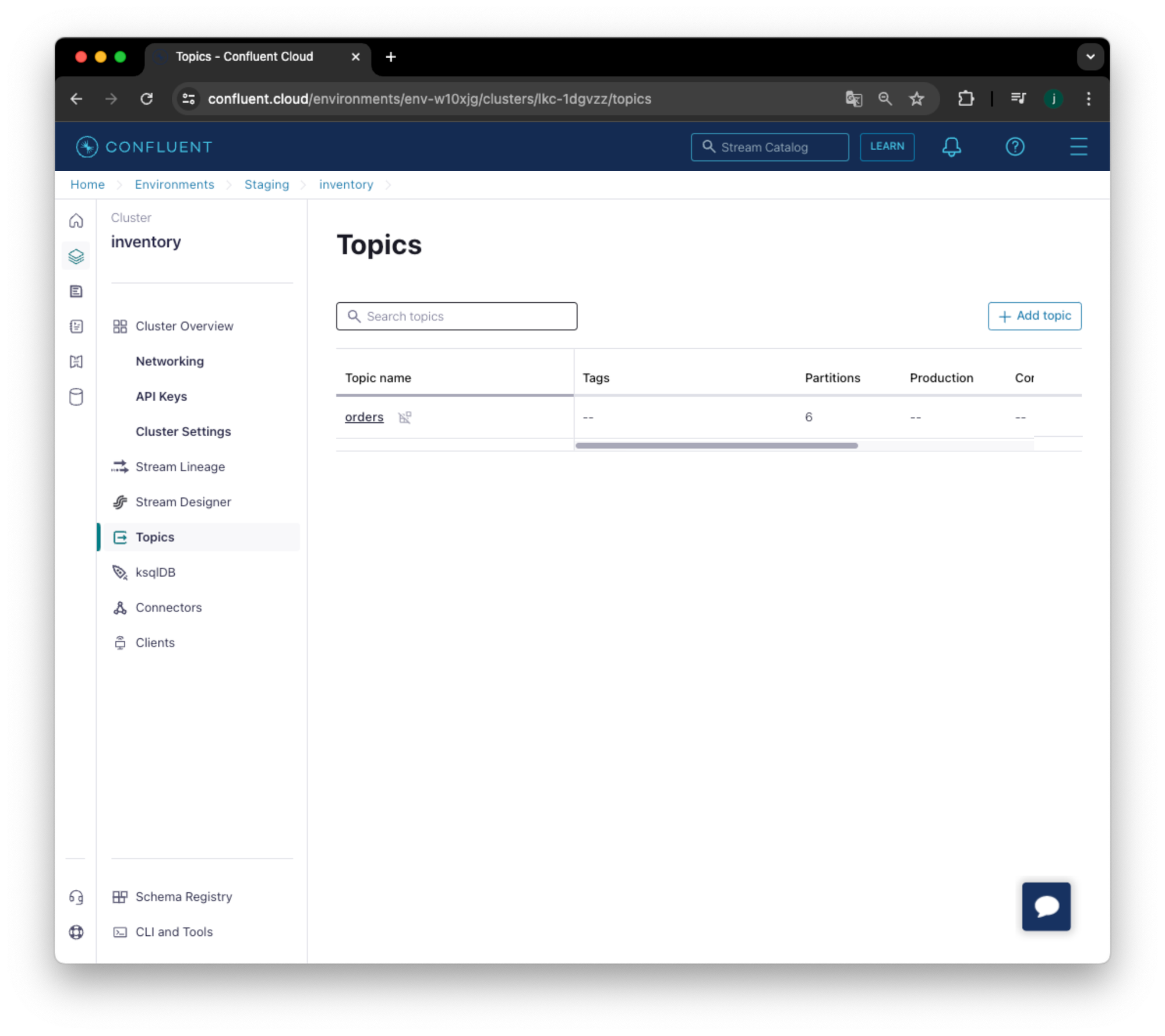

정상적으로 배포가 완료되었습니다. 배포된 리소스를 대시보드에서 확인할 수 있습니다.

배포된 리소스를 terraform 명령어를 통해서도 확인할 수 있습니다.

여기서는 outputs.tf파일에 명시된 내용을 확인하도록 resource-ids 를 확인하겠습니다.

terraform output resource-idsterraform output resource-ids

<<EOT

Environment ID: env-w10xjg

Kafka Cluster ID: lkc-1dgvzz

Kafka topic name: orders

Service Accounts and their Kafka API Keys (API Keys inherit the permissions granted to the owner):

app-manager: sa-rxrdv0

app-manager's Kafka API Key: "YQDCNRPAKBHH3HWR"

app-manager's Kafka API Secret: "D8qofo/h+1hi0YZVfSwzGXO2Z0Dot8wmSZCY4lXfvC6QXSxth40iH4DW55rncu1X"

app-producer: sa-2n2v51

app-producer's Kafka API Key: "3NSTHIB4E7WLHYOS"

app-producer's Kafka API Secret: "W9pbChceKy0V99w9o+vOEhzLfxEMa5XgkK8lXbz30iGFR/XS5m+RCbGci3s3EEXv"

app-consumer: sa-02xo55

app-consumer's Kafka API Key: "CPXXMHDM5NKKXEPV"

app-consumer's Kafka API Secret: "ATU6uaj2ygAVw9Cc+fnYrjN6fr1DT8EAgtXmPFYF+oYjS9Fo2xjcMGg2t50s9G91"

In order to use the Confluent CLI v2 to produce and consume messages from topic 'orders' using Kafka API Keys

of app-producer and app-consumer service accounts

run the following commands:

# 1. Log in to Confluent Cloud

$ confluent login

# 2. Produce key-value records to topic 'orders' by using app-producer's Kafka API Key

$ confluent kafka topic produce orders --environment env-w10xjg --cluster lkc-1dgvzz --api-key "3NSTHIB4E7WLHYOS" --api-secret "W9pbChceKy0V99w9o+vOEhzLfxEMa5XgkK8lXbz30iGFR/XS5m+RCbGci3s3EEXv"

# Enter a few records and then press 'Ctrl-C' when you're done.

# Sample records:

# {"number":1,"date":18500,"shipping_address":"899 W Evelyn Ave, Mountain View, CA 94041, USA","cost":15.00}

# {"number":2,"date":18501,"shipping_address":"1 Bedford St, London WC2E 9HG, United Kingdom","cost":5.00}

# {"number":3,"date":18502,"shipping_address":"3307 Northland Dr Suite 400, Austin, TX 78731, USA","cost":10.00}

# 3. Consume records from topic 'orders' by using app-consumer's Kafka API Key

$ confluent kafka topic consume orders --from-beginning --environment env-w10xjg --cluster lkc-1dgvzz --api-key "CPXXMHDM5NKKXEPV" --api-secret "ATU6uaj2ygAVw9Cc+fnYrjN6fr1DT8EAgtXmPFYF+oYjS9Fo2xjcMGg2t50s9G91"

# When you are done, press 'Ctrl-C'.

EOT

리소스 배포 검증

resource-ids 에서 표시된 내용으로 직접 테스트하겠습니다.

우선 confluent 를 설치하며, 로그인합니다

#brew

brew install confluentinc/tap/cli

#apt

sudo apt install curl gnupg

sudo mkdir -p /etc/apt/keyrings

curl https://packages.confluent.io/confluent-cli/deb/archive.key | sudo gpg --dearmor -o /etc/apt/keyrings/confluent-cli.gpg

sudo chmod go+r /etc/apt/keyrings/confluent-cli.gpg

sudo apt update

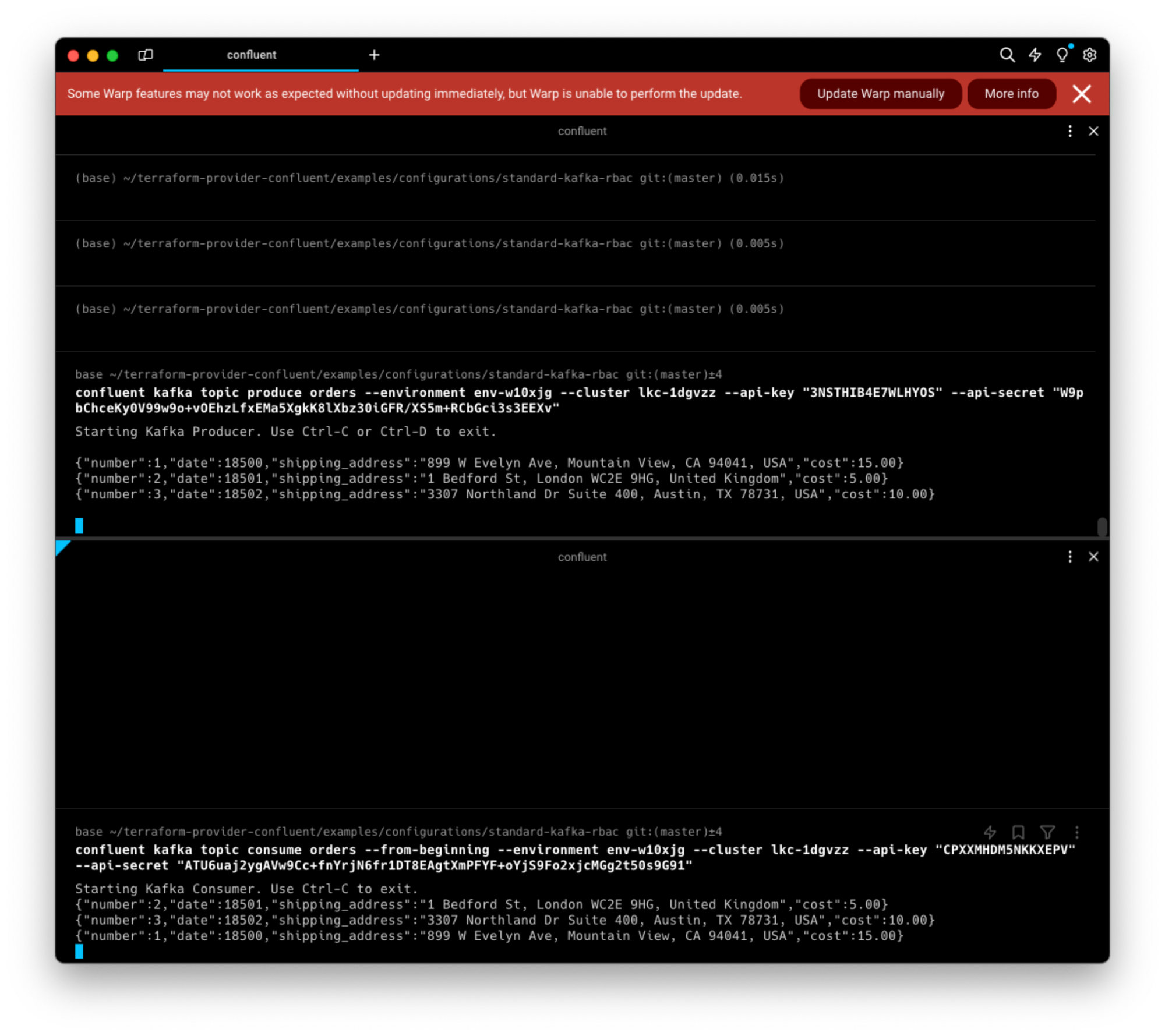

sudo apt install confluent-cliconfluent login이후 2. Produce key-value records to topic 'orders' by using app-producer's Kafka API Key 밑에서 나온 부분을 입력해줍니다.

- 이때

confluent login이 되지 않은경우, cli 파라미터로 bootstrap 주소를 넣으라는 에러가 발생합니다.

아래 메세지가 뜬다면 Kafka Producer 가 실행된것입니다.

confluent kafka topic produce orders --environment env-w10xjg --cluster lkc-1dgvzz --api-key "3NSTHIB4E7WLHYOS" --api-secret "W9pbChceKy0V99w9o+vOEhzLfxEMa5XgkK8lXbz30iGFR/XS5m+RCbGci3s3EEXv"

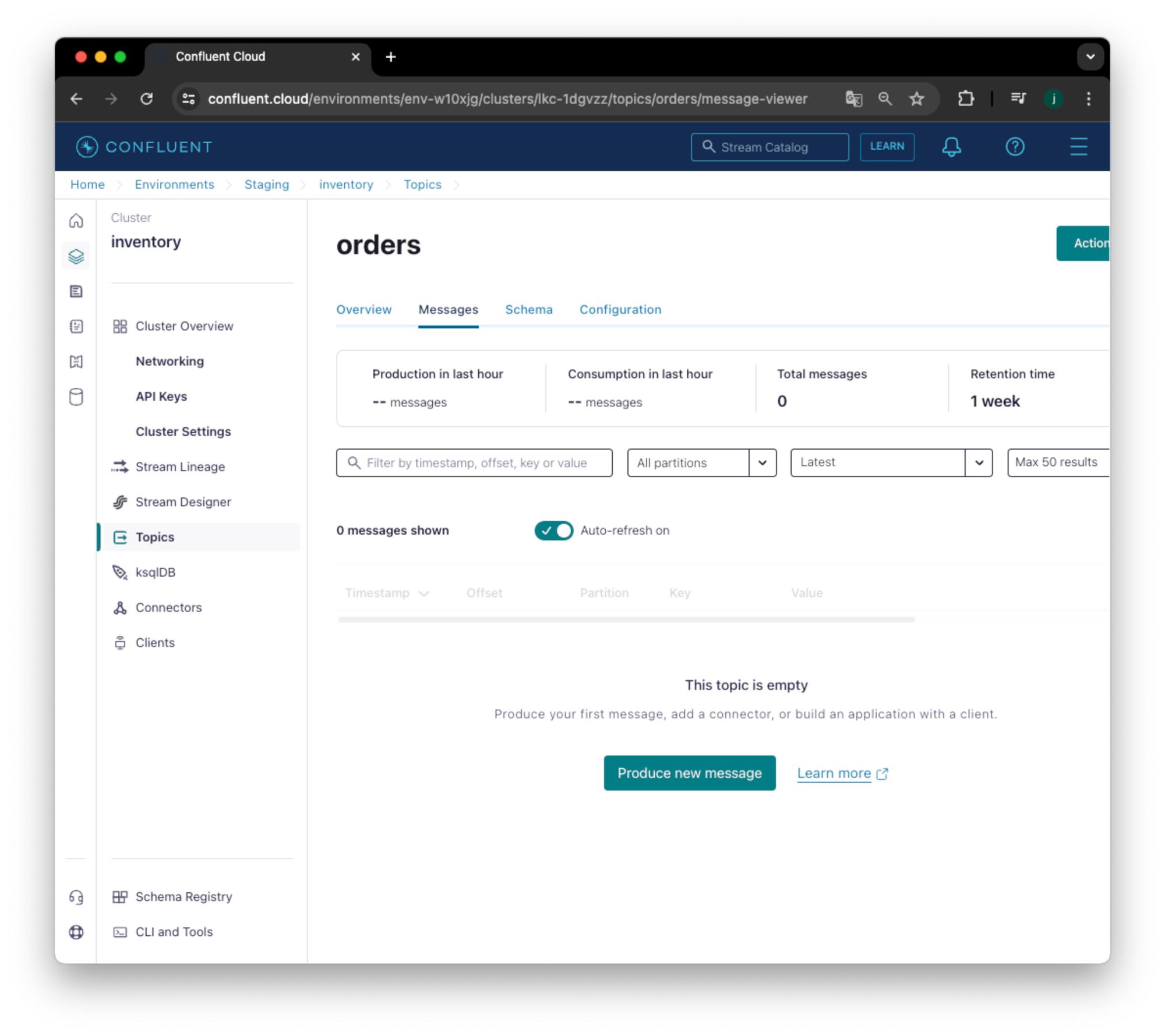

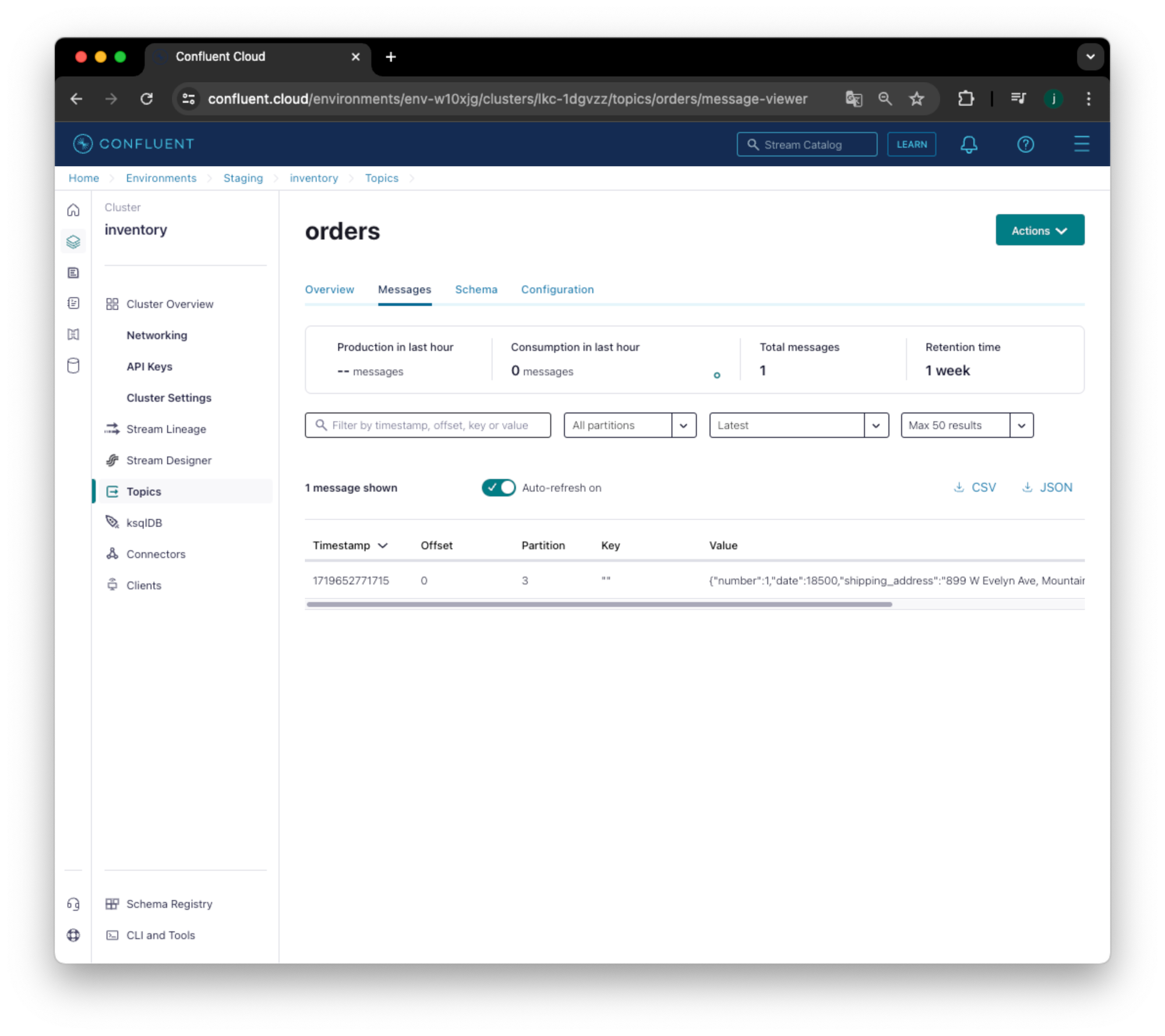

Starting Kafka Producer. Use Ctrl-C or Ctrl-D to exit.지금상황에서 Topic 의 상태는 다음과같습니다. 아무것도 없습니다.

예시 메세지 1건을 넣어봅니다.

{"number":1,"date":18500,"shipping_address":"899 W Evelyn Ave, Mountain View, CA 94041, USA","cost":15.00}Kafka Producer로 메세지를 넣은 즉시 Topic 의 메세지 상황이 변경됩니다.

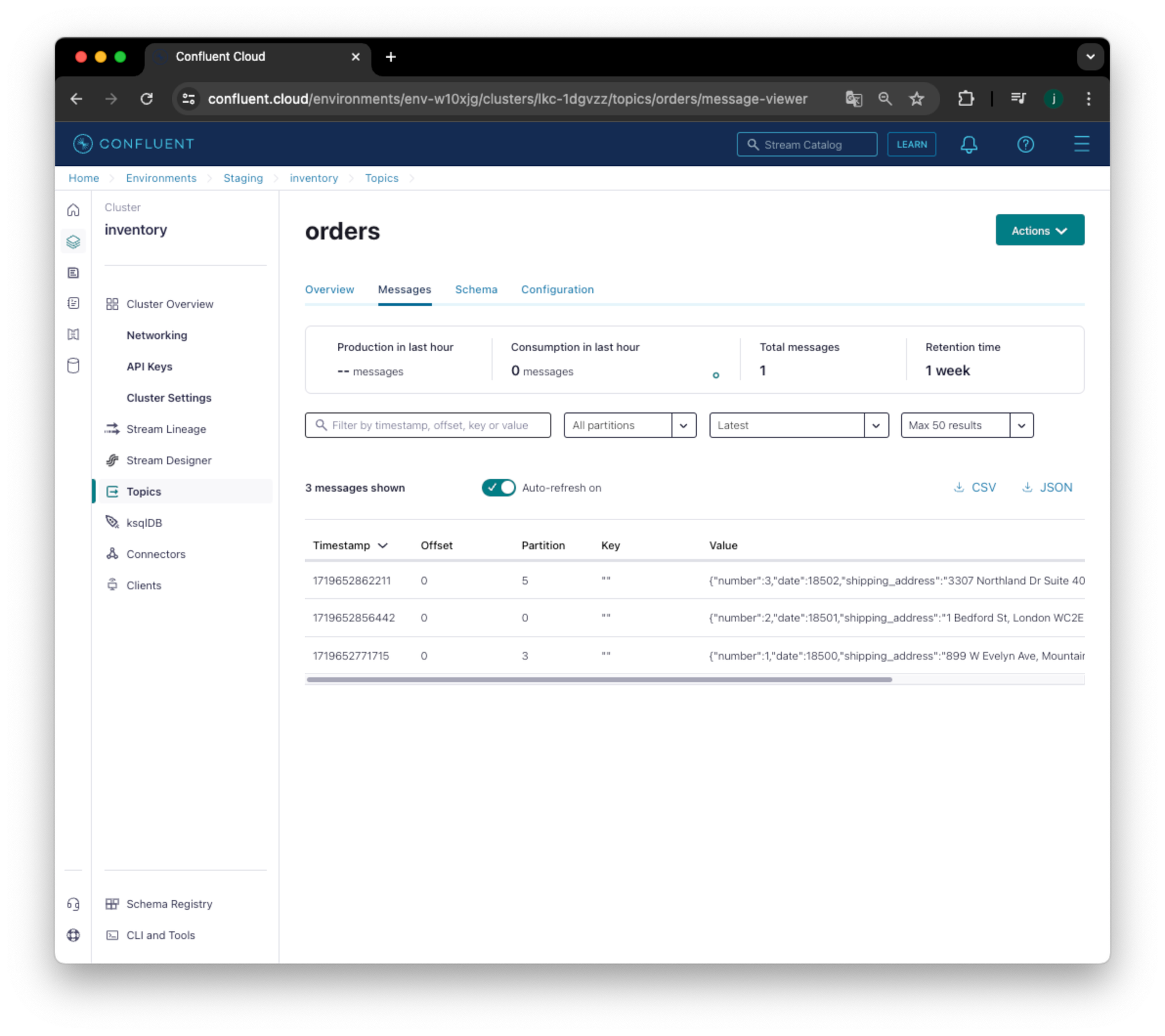

다른 두 건의 메세지도 넣어봅니다

{"number":2,"date":18501,"shipping_address":"1 Bedford St, London WC2E 9HG, United Kingdom","cost":5.00}

{"number":3,"date":18502,"shipping_address":"3307 Northland Dr Suite 400, Austin, TX 78731, USA","cost":10.00}토픽에서 아래 메세지가 적재되어 있음을 확인가능하며, 특정 메세지가 적재된 Partition 번호, 저장된 Value 를 확인할 수 있습니다.

이번엔 Kakfa consumer 를 실행시켜 CLI에서 확인해봅시다.

- 마찬가지로 3. Consume records from topic 'orders' by using app-consumer's Kafka API Key 하단에 나온 명령어를 입력합니다.

실습종료: destroy

간단한 실습은 이정도로 하겠습니다. 배포된 리소스를 모두 제거해보겠습니다.

terraform apply 로 배포한 리소스는 terraform destroy 로 제거가능합니다.

terraform destroy

전체로그는 다음과같습니다.

terraform destroy

confluent_service_account.app-consumer: Refreshing state... [id=sa-02xo55]

confluent_service_account.app-producer: Refreshing state... [id=sa-2n2v51]

confluent_environment.staging: Refreshing state... [id=env-w10xjg]

confluent_service_account.app-manager: Refreshing state... [id=sa-rxrdv0]

data.confluent_schema_registry_region.essentials: Reading...

confluent_kafka_cluster.standard: Refreshing state... [id=lkc-1dgvzz]

data.confluent_schema_registry_region.essentials: Read complete after 0s [id=sgreg-1]

confluent_schema_registry_cluster.essentials: Refreshing state... [id=lsrc-n528nz]

confluent_role_binding.app-manager-kafka-cluster-admin: Refreshing state... [id=rb-54rEEy]

confluent_api_key.app-consumer-kafka-api-key: Refreshing state... [id=CPXXMHDM5NKKXEPV]

confluent_api_key.app-producer-kafka-api-key: Refreshing state... [id=3NSTHIB4E7WLHYOS]

confluent_role_binding.app-consumer-developer-read-from-group: Refreshing state... [id=rb-dLQllK]

confluent_api_key.app-manager-kafka-api-key: Refreshing state... [id=YQDCNRPAKBHH3HWR]

confluent_kafka_topic.orders: Refreshing state... [id=lkc-1dgvzz/orders]

confluent_role_binding.app-consumer-developer-read-from-topic: Refreshing state... [id=rb-3PrKW9]

confluent_role_binding.app-producer-developer-write: Refreshing state... [id=rb-Y1EYMl]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the

following symbols:

- destroy

Terraform will perform the following actions:

# confluent_api_key.app-consumer-kafka-api-key will be destroyed

- resource "confluent_api_key" "app-consumer-kafka-api-key" {

- description = "Kafka API Key that is owned by 'app-consumer' service account" -> null

- disable_wait_for_ready = false -> null

- display_name = "app-consumer-kafka-api-key" -> null

- id = "CPXXMHDM5NKKXEPV" -> null

- secret = (sensitive value) -> null

- managed_resource {

- api_version = "cmk/v2" -> null

- id = "lkc-1dgvzz" -> null

- kind = "Cluster" -> null

- environment {

- id = "env-w10xjg" -> null

}

}

- owner {

- api_version = "iam/v2" -> null

- id = "sa-02xo55" -> null

- kind = "ServiceAccount" -> null

}

}

# confluent_api_key.app-manager-kafka-api-key will be destroyed

- resource "confluent_api_key" "app-manager-kafka-api-key" {

- description = "Kafka API Key that is owned by 'app-manager' service account" -> null

- disable_wait_for_ready = false -> null

- display_name = "app-manager-kafka-api-key" -> null

- id = "YQDCNRPAKBHH3HWR" -> null

- secret = (sensitive value) -> null

- managed_resource {

- api_version = "cmk/v2" -> null

- id = "lkc-1dgvzz" -> null

- kind = "Cluster" -> null

- environment {

- id = "env-w10xjg" -> null

}

}

- owner {

- api_version = "iam/v2" -> null

- id = "sa-rxrdv0" -> null

- kind = "ServiceAccount" -> null

}

}

# confluent_api_key.app-producer-kafka-api-key will be destroyed

- resource "confluent_api_key" "app-producer-kafka-api-key" {

- description = "Kafka API Key that is owned by 'app-producer' service account" -> null

- disable_wait_for_ready = false -> null

- display_name = "app-producer-kafka-api-key" -> null

- id = "3NSTHIB4E7WLHYOS" -> null

- secret = (sensitive value) -> null

- managed_resource {

- api_version = "cmk/v2" -> null

- id = "lkc-1dgvzz" -> null

- kind = "Cluster" -> null

- environment {

- id = "env-w10xjg" -> null

}

}

- owner {

- api_version = "iam/v2" -> null

- id = "sa-2n2v51" -> null

- kind = "ServiceAccount" -> null

}

}

# confluent_environment.staging will be destroyed

- resource "confluent_environment" "staging" {

- display_name = "Staging" -> null

- id = "env-w10xjg" -> null

- resource_name = "crn://confluent.cloud/organization=a444b16f-2eed-49a6-8e61-cc6faf9cb820/environment=env-w10xjg" -> null

- stream_governance {

# (1 unchanged attribute hidden)

}

}

# confluent_kafka_cluster.standard will be destroyed

- resource "confluent_kafka_cluster" "standard" {

- api_version = "cmk/v2" -> null

- availability = "SINGLE_ZONE" -> null

- bootstrap_endpoint = "SASL_SSL://pkc-921jm.us-east-2.aws.confluent.cloud:9092" -> null

- cloud = "AWS" -> null

- display_name = "inventory" -> null

- id = "lkc-1dgvzz" -> null

- kind = "Cluster" -> null

- rbac_crn = "crn://confluent.cloud/organization=a444b16f-2eed-49a6-8e61-cc6faf9cb820/environment=env-w10xjg/cloud-cluster=lkc-1dgvzz" -> null

- region = "us-east-2" -> null

- rest_endpoint = "https://pkc-921jm.us-east-2.aws.confluent.cloud:443" -> null

- byok_key {

id = null

}

- environment {

- id = "env-w10xjg" -> null

}

- network {

id = null

}

- standard {}

}

# confluent_kafka_topic.orders will be destroyed

- resource "confluent_kafka_topic" "orders" {

- config = {} -> null

- id = "lkc-1dgvzz/orders" -> null

- partitions_count = 6 -> null

- rest_endpoint = "https://pkc-921jm.us-east-2.aws.confluent.cloud:443" -> null

- topic_name = "orders" -> null

- credentials {

- key = (sensitive value) -> null

- secret = (sensitive value) -> null

}

- kafka_cluster {

- id = "lkc-1dgvzz" -> null

}

}

# confluent_role_binding.app-consumer-developer-read-from-group will be destroyed

- resource "confluent_role_binding" "app-consumer-developer-read-from-group" {

- crn_pattern = "crn://confluent.cloud/organization=a444b16f-2eed-49a6-8e61-cc6faf9cb820/environment=env-w10xjg/cloud-cluster=lkc-1dgvzz/kafka=lkc-1dgvzz/group=confluent_cli_consumer_*" -> null

- id = "rb-dLQllK" -> null

- principal = "User:sa-02xo55" -> null

- role_name = "DeveloperRead" -> null

}

# confluent_role_binding.app-consumer-developer-read-from-topic will be destroyed

- resource "confluent_role_binding" "app-consumer-developer-read-from-topic" {

- crn_pattern = "crn://confluent.cloud/organization=a444b16f-2eed-49a6-8e61-cc6faf9cb820/environment=env-w10xjg/cloud-cluster=lkc-1dgvzz/kafka=lkc-1dgvzz/topic=orders" -> null

- id = "rb-3PrKW9" -> null

- principal = "User:sa-02xo55" -> null

- role_name = "DeveloperRead" -> null

}

# confluent_role_binding.app-manager-kafka-cluster-admin will be destroyed

- resource "confluent_role_binding" "app-manager-kafka-cluster-admin" {

- crn_pattern = "crn://confluent.cloud/organization=a444b16f-2eed-49a6-8e61-cc6faf9cb820/environment=env-w10xjg/cloud-cluster=lkc-1dgvzz" -> null

- id = "rb-54rEEy" -> null

- principal = "User:sa-rxrdv0" -> null

- role_name = "CloudClusterAdmin" -> null

}

# confluent_role_binding.app-producer-developer-write will be destroyed

- resource "confluent_role_binding" "app-producer-developer-write" {

- crn_pattern = "crn://confluent.cloud/organization=a444b16f-2eed-49a6-8e61-cc6faf9cb820/environment=env-w10xjg/cloud-cluster=lkc-1dgvzz/kafka=lkc-1dgvzz/topic=orders" -> null

- id = "rb-Y1EYMl" -> null

- principal = "User:sa-2n2v51" -> null

- role_name = "DeveloperWrite" -> null

}

# confluent_schema_registry_cluster.essentials will be destroyed

- resource "confluent_schema_registry_cluster" "essentials" {

- api_version = "srcm/v2" -> null

- display_name = "Stream Governance Package" -> null

- id = "lsrc-n528nz" -> null

- kind = "Cluster" -> null

- package = "ESSENTIALS" -> null

- resource_name = "crn://confluent.cloud/organization=a444b16f-2eed-49a6-8e61-cc6faf9cb820/environment=env-w10xjg/schema-registry=lsrc-n528nz" -> null

- rest_endpoint = "https://psrc-knmwm.us-east-2.aws.confluent.cloud" -> null

- environment {

- id = "env-w10xjg" -> null

}

- region {

- id = "sgreg-1" -> null

}

}

# confluent_service_account.app-consumer will be destroyed

- resource "confluent_service_account" "app-consumer" {

- api_version = "iam/v2" -> null

- description = "Service account to consume from 'orders' topic of 'inventory' Kafka cluster" -> null

- display_name = "app-consumer" -> null

- id = "sa-02xo55" -> null

- kind = "ServiceAccount" -> null

}

# confluent_service_account.app-manager will be destroyed

- resource "confluent_service_account" "app-manager" {

- api_version = "iam/v2" -> null

- description = "Service account to manage 'inventory' Kafka cluster" -> null

- display_name = "app-manager" -> null

- id = "sa-rxrdv0" -> null

- kind = "ServiceAccount" -> null

}

# confluent_service_account.app-producer will be destroyed

- resource "confluent_service_account" "app-producer" {

- api_version = "iam/v2" -> null

- description = "Service account to produce to 'orders' topic of 'inventory' Kafka cluster" -> null

- display_name = "app-producer" -> null

- id = "sa-2n2v51" -> null

- kind = "ServiceAccount" -> null

}

Plan: 0 to add, 0 to change, 14 to destroy.

Changes to Outputs:

- resource-ids = (sensitive value) -> null

╷

│ Warning: Deprecated Resource

│

│ with data.confluent_schema_registry_region.essentials,

│ on main.tf line 19, in data "confluent_schema_registry_region" "essentials":

│ 19: data "confluent_schema_registry_region" "essentials" {

│

│ The "schema_registry_region" data source has been deprecated and will be removed in the next major version of the provider

│ (2.0.0). Refer to the Upgrade Guide at

│ https://registry.terraform.io/providers/confluentinc/confluent/latest/docs/guides/version-2-upgrade for more details. The

│ guide will be published once version 2.0.0 is released.

│

│ (and one more similar warning elsewhere)

╵

Do you really want to destroy all resources?

Terraform will destroy all your managed infrastructure, as shown above.

There is no undo. Only 'yes' will be accepted to confirm.

Enter a value: yes

confluent_role_binding.app-producer-developer-write: Destroying... [id=rb-Y1EYMl]

confluent_api_key.app-consumer-kafka-api-key: Destroying... [id=CPXXMHDM5NKKXEPV]

confluent_role_binding.app-consumer-developer-read-from-topic: Destroying... [id=rb-3PrKW9]

confluent_role_binding.app-consumer-developer-read-from-group: Destroying... [id=rb-dLQllK]

confluent_api_key.app-producer-kafka-api-key: Destroying... [id=3NSTHIB4E7WLHYOS]

confluent_schema_registry_cluster.essentials: Destroying... [id=lsrc-n528nz]

confluent_api_key.app-consumer-kafka-api-key: Destruction complete after 0s

confluent_role_binding.app-consumer-developer-read-from-topic: Destruction complete after 0s

confluent_role_binding.app-producer-developer-write: Destruction complete after 0s

confluent_kafka_topic.orders: Destroying... [id=lkc-1dgvzz/orders]

confluent_api_key.app-producer-kafka-api-key: Destruction complete after 0s

confluent_service_account.app-producer: Destroying... [id=sa-2n2v51]

confluent_role_binding.app-consumer-developer-read-from-group: Destruction complete after 0s

confluent_service_account.app-consumer: Destroying... [id=sa-02xo55]

confluent_schema_registry_cluster.essentials: Destruction complete after 0s

confluent_service_account.app-consumer: Destruction complete after 1s

confluent_service_account.app-producer: Destruction complete after 1s

confluent_kafka_topic.orders: Still destroying... [id=lkc-1dgvzz/orders, 10s elapsed]

confluent_kafka_topic.orders: Destruction complete after 11s

confluent_api_key.app-manager-kafka-api-key: Destroying... [id=YQDCNRPAKBHH3HWR]

confluent_api_key.app-manager-kafka-api-key: Destruction complete after 1s

confluent_role_binding.app-manager-kafka-cluster-admin: Destroying... [id=rb-54rEEy]

confluent_role_binding.app-manager-kafka-cluster-admin: Destruction complete after 0s

confluent_service_account.app-manager: Destroying... [id=sa-rxrdv0]

confluent_kafka_cluster.standard: Destroying... [id=lkc-1dgvzz]

confluent_service_account.app-manager: Destruction complete after 0s

confluent_kafka_cluster.standard: Destruction complete after 2s

confluent_environment.staging: Destroying... [id=env-w10xjg]

confluent_environment.staging: Destruction complete after 0s

Destroy complete! Resources: 14 destroyed.

끝으로

이상 간단한 Kafka cluster, Topic, User account 를 배포해보고, Producer, Consumer 로 메세지 전달 실습을 진행해보았습니다.

다음게시글에서는 좀더 다양한 내용으로 가져와보겠습니다.

끝~!

'외부활동' 카테고리의 다른 글

| Notion 서드파티 오픈소스에 기여한 썰 푼다 (0) | 2024.07.17 |

|---|---|

| [T101] 4기 스터디: Module (3) | 2024.07.14 |

| [AEWS] 2기 스터디: Terraform (0) | 2024.04.28 |

| [AEWS] 2기 스터디: CI (1) | 2024.04.21 |

| [AEWS] 2기 스터디: EKS의 인증/인가 절차2 (0) | 2024.04.12 |