주의사항

이 글은 DIOK2스터디에서 진행한 내용을 바탕으로 작성한 내용입니다. 공부중인 내용이기때문에, 틀린 부분이 있을수 있습니다.

CloudNativePG

이번에 정리한 DB는 CloudNativePG 입니다.

쿠버네티스에서 PostgreSQL 워크로드를 관리하는 프로젝트라고 이해하면 될것같습니다.

링크: https://cloudnative-pg.io/documentation/current/

CloudNativePG

CloudNativePG CloudNativePG is an open source operator designed to manage PostgreSQL workloads on any supported Kubernetes cluster running in private, public, hybrid, or multi-cloud environments. CloudNativePG adheres to DevOps principles and concepts such

cloudnative-pg.io

우선 PostgreSQL를 조금 보겠습니다.

일반적으로 PostgreSQL을 부를때는 ‘포스트그레에스큐엘’ 혹은 ‘포스트그레씨퀄’ 중 하나로 지칭합니다. ‘포스트그레씨퀄’ 이라고 많이부르는것이 정론인것같습니다.

PostgreSQL의 프로세스

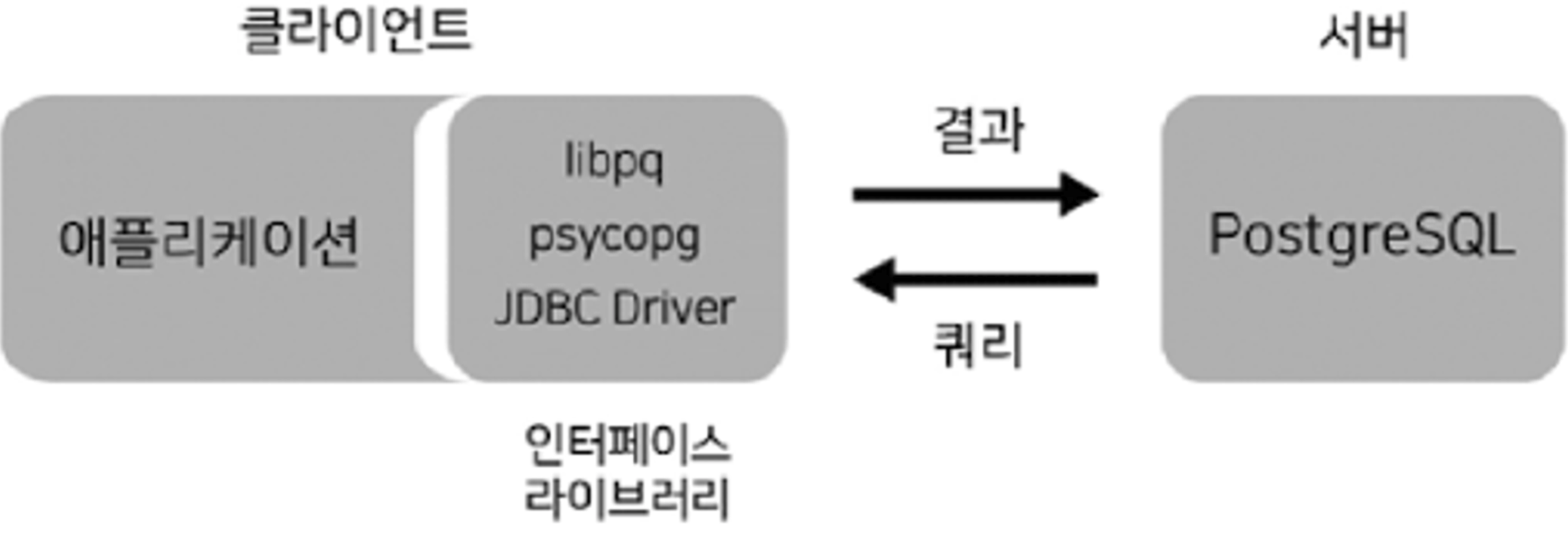

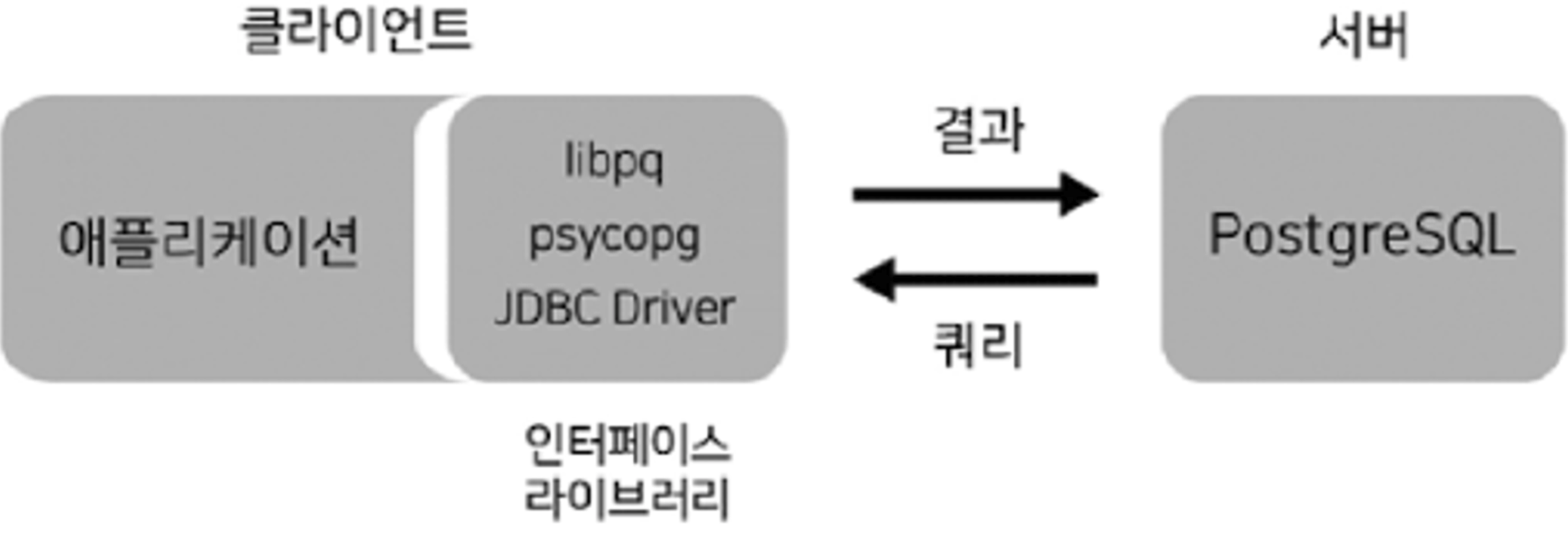

구조: 클라이언트 - 서버

일반론: 데이터베이스 계층구조

Cluster: 데이터베이스의 집합

Schema: Object(e.g. table, view, index …)의 논리적인 집합

- PostgreSQL은 테이블의 집합을 Schema로 사용한다.

- Schema의 집합을 Database로 사용한다.

- MySQL은 테이블의 집합을 Database로 사용한다.

Table: 가장 기본구조

- Row based vs Column based로 구성된다

- 데이터간 관계라는 의미에서는, Table을 데이터간 relation이라고한다

CloudNativePG 소개

CloudNativePG 이름의 뜻

CloudNative + PostGresql : 포스트그레씨퀄을 클라우드친화적으로 지원하게 만들었다는 뜻을 의미한다.

대표적인 지원기능

- 자체 HA 지원: Direct integration with Kubernetes API server for High Availability, without requiring an external tool

- 셀프힐링: Self-Healing capability, through:

- failover of the primary instance by promoting the most aligned replica

- automated recreation of a replica

- 스위칭: Planned switchover of the primary instance by promoting a selected replica

PostgreSQL Architecture

복제전략: 애플리케이션 수준 복제

- PostgreSQL은 스토리지수준 복제를 권장하지 않는다

WAL(Write Ahead Log):

- PostgreSQL에서 데이터를 일관성 있게 유지하기위해서 사용한다.

- WAL 로그를 기반해서 HA와 Scale을 구성한다.

- Log: DBMS에서 변경되는 모든 작업들을 표시하고 기록하는 개념이다. 로그가 저장되는곳은 데이터가 손실되지 않아야하는 보장이 있어야한다.

- WAL file

- 데이터 변경을 하기 전에, 변경사항을 미리 기록해 두는 파일이다.

- 데이터 변경 작업을 처리하는 순서가 [변경사항을 미리 기록 → 데이터 변경] 의 형태로 진행되기 떄문에, 데이터 변경 작업에서 장애가 발생해도 다시복구하는데 능하다.

스트리밍복제: 비동기/동기 선택해서 스트리밍 복제를 지원한다.

- 복제서버는 Hot Standby 기능으로 읽기처리만 가능하다.

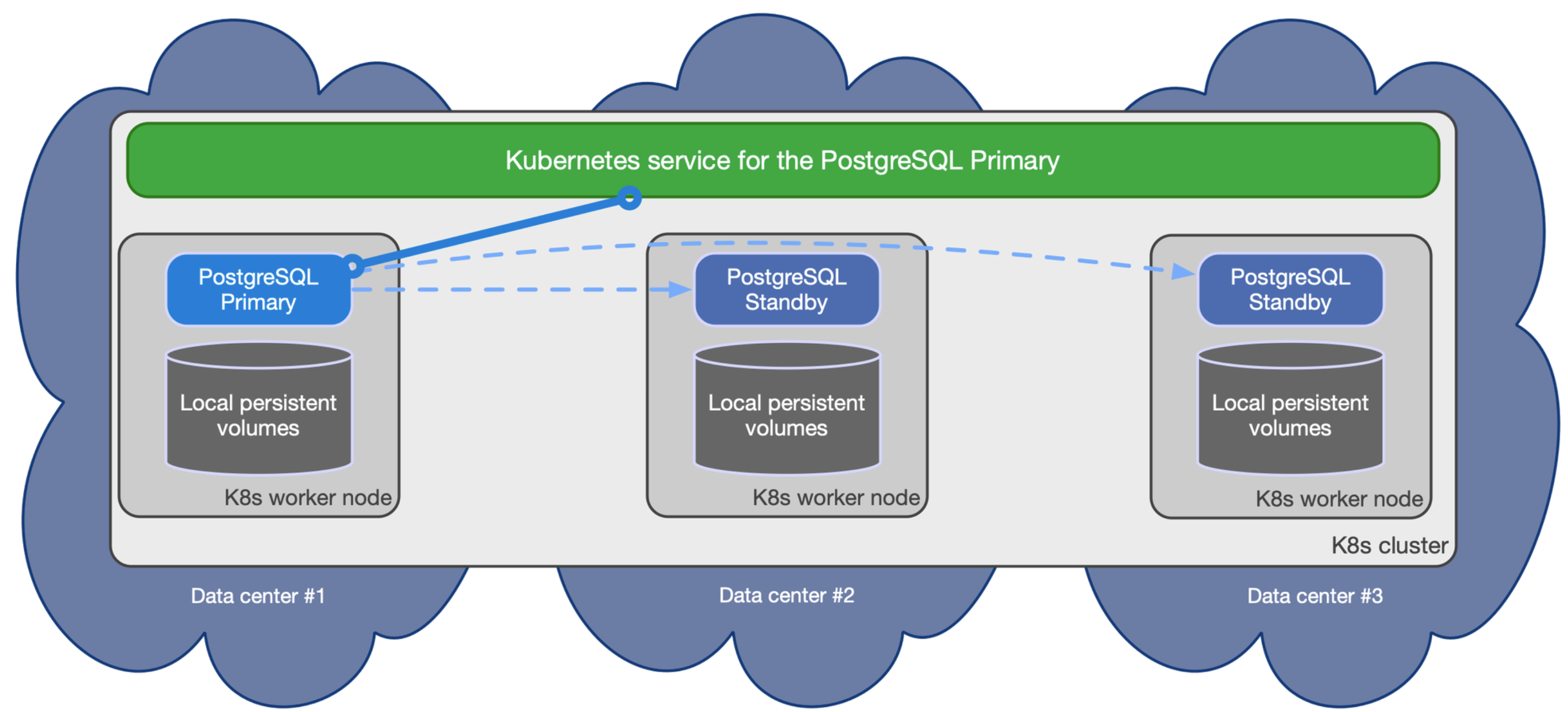

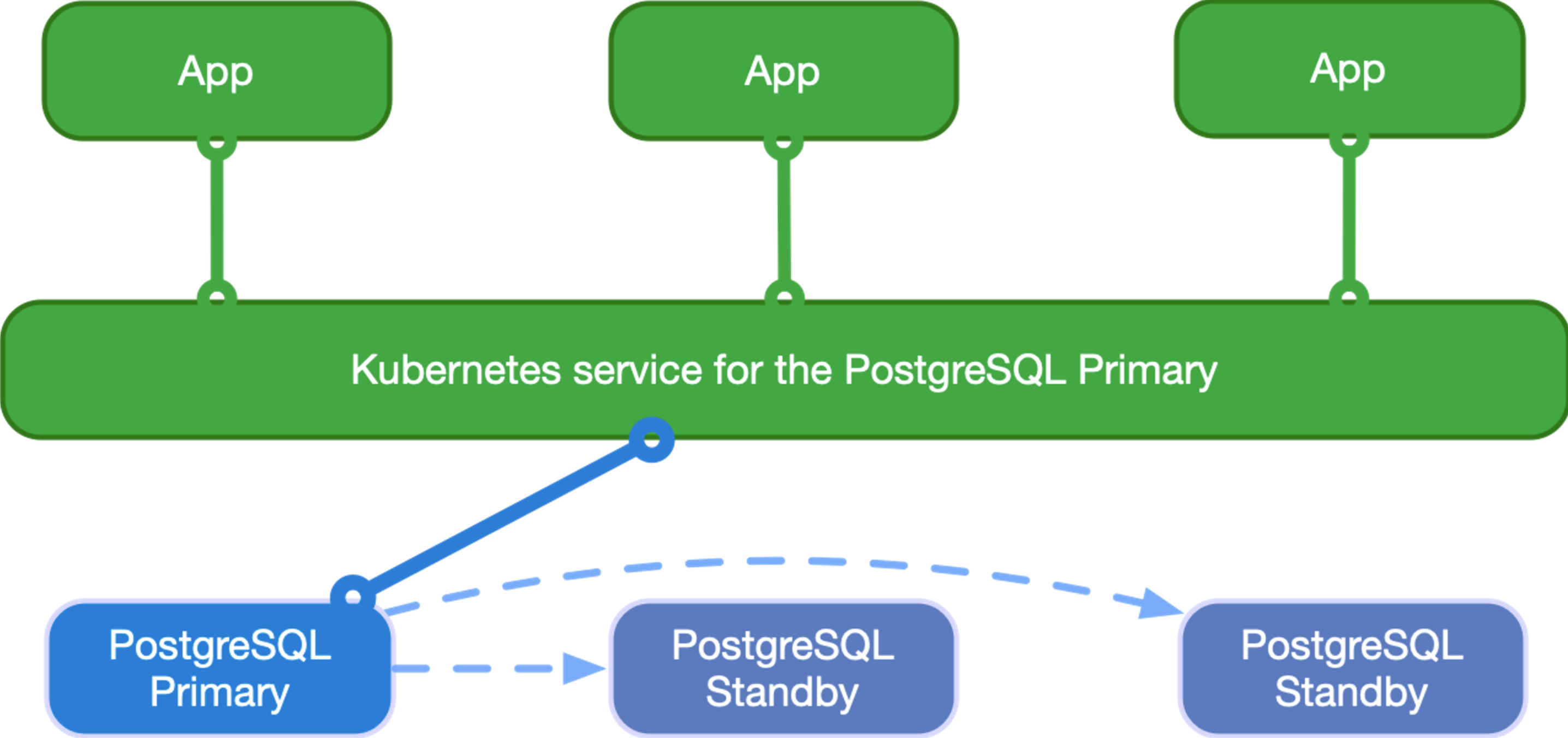

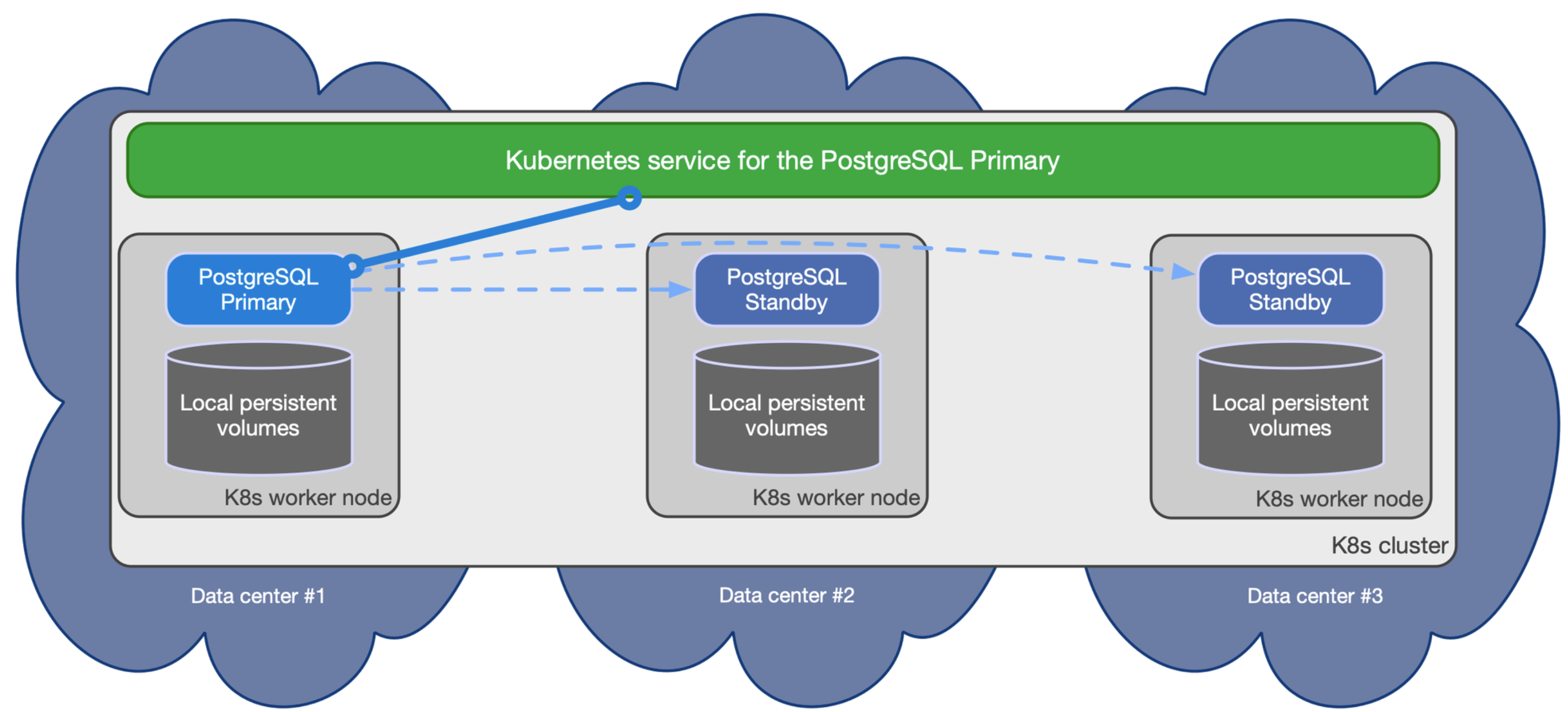

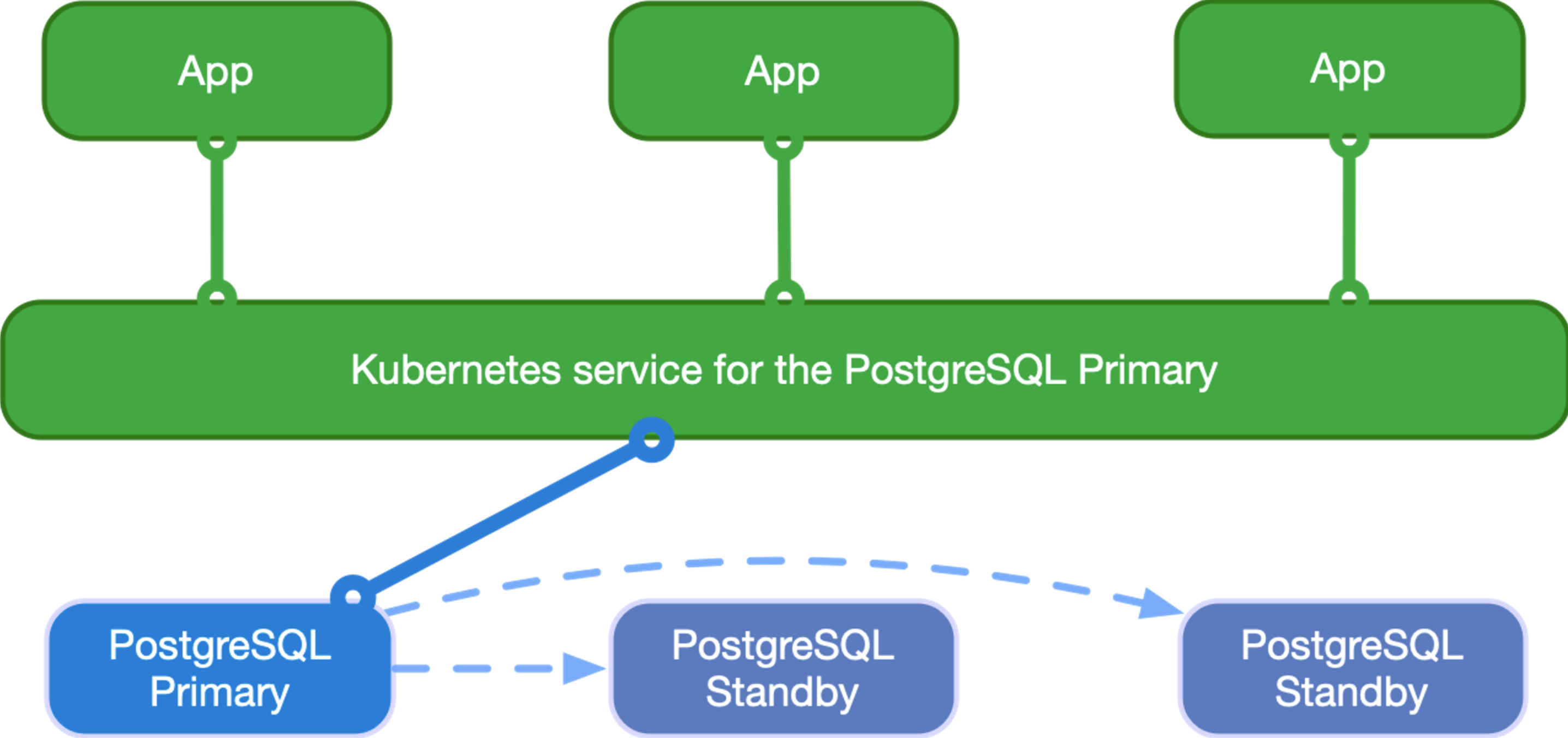

CloudNativePG HA구성

CloudNativePG: 비동기/동기 스트리밍 구성

- HA구성: 하나의 Primary + 여러개의 Standby

Primary: RW(Read/Write)

애플리케이션이 쿠버네티스 Operator를 통해 PostgreSQL Primary에 접근해서 rw(read/write)를 요청할수 있다.

인스턴스에서 -rw 인자를 사용한다.

Standby: RO(Read only)

애플리케이션이 쿠버네티스 Operator를 통해 PostgreSQL hot Standby에 접근해서 ro(read only)를 요청할 수 있다.

인스턴스에서 -ro 혹은 -r 인자를 사용한다.

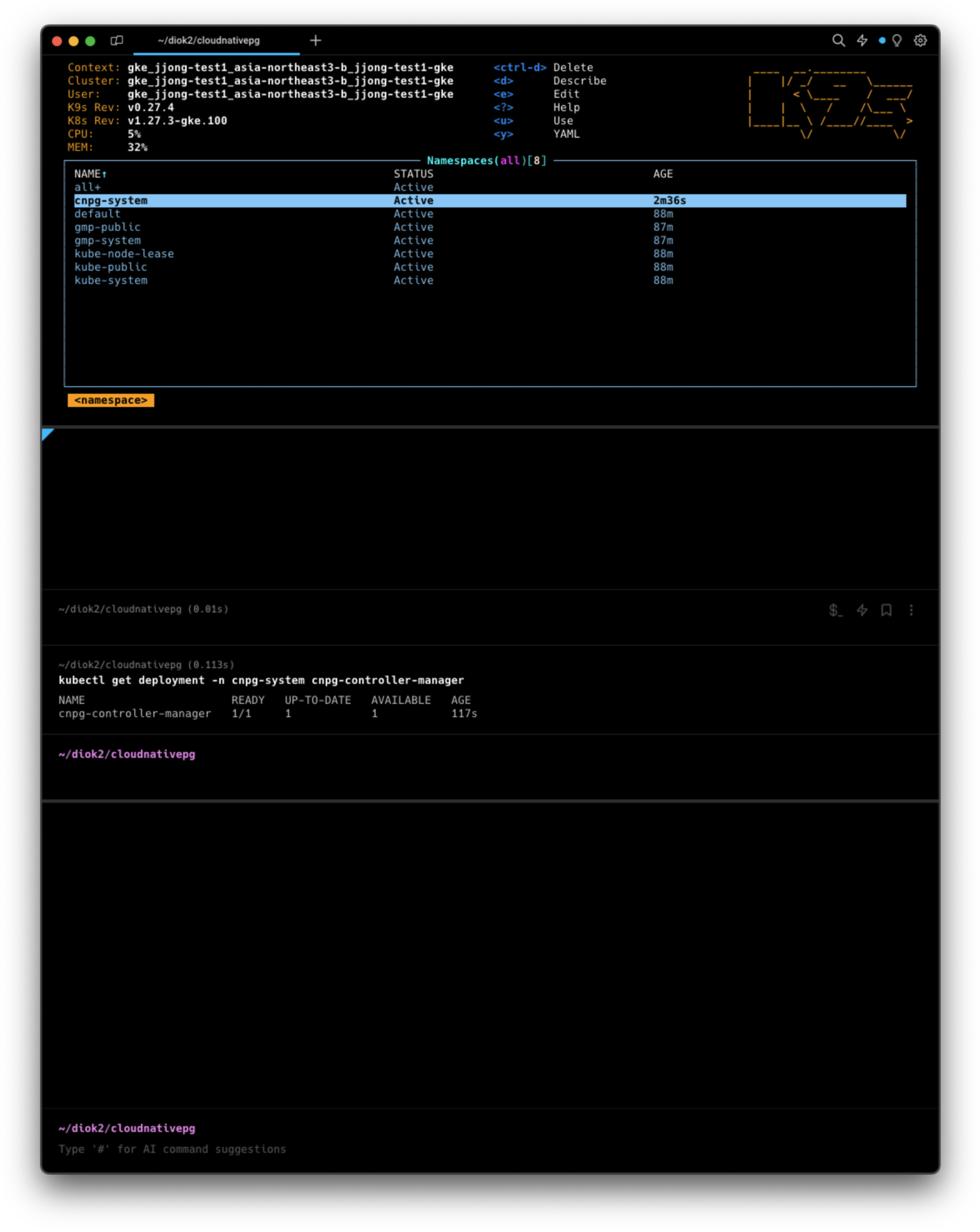

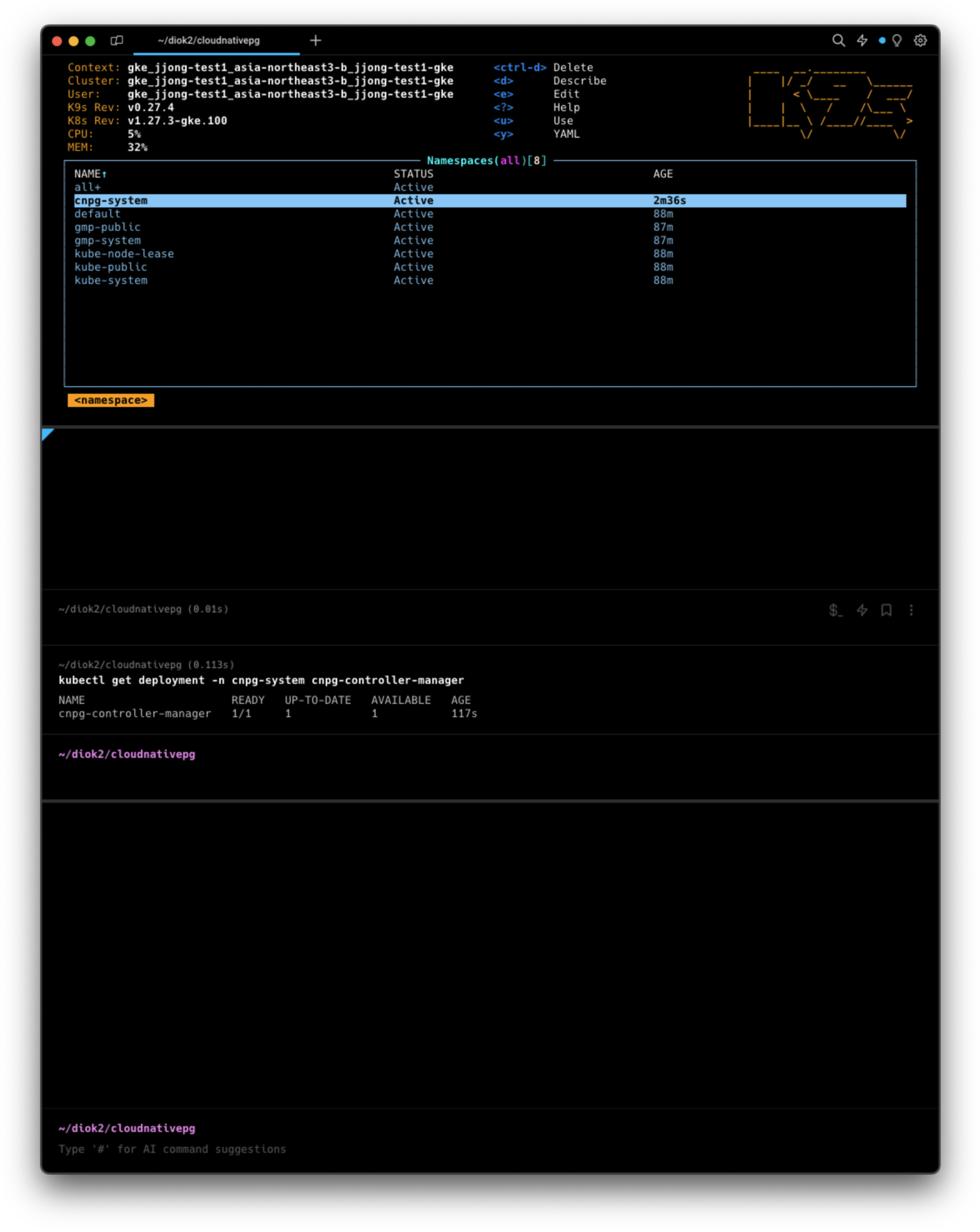

CloudNativePG 설치

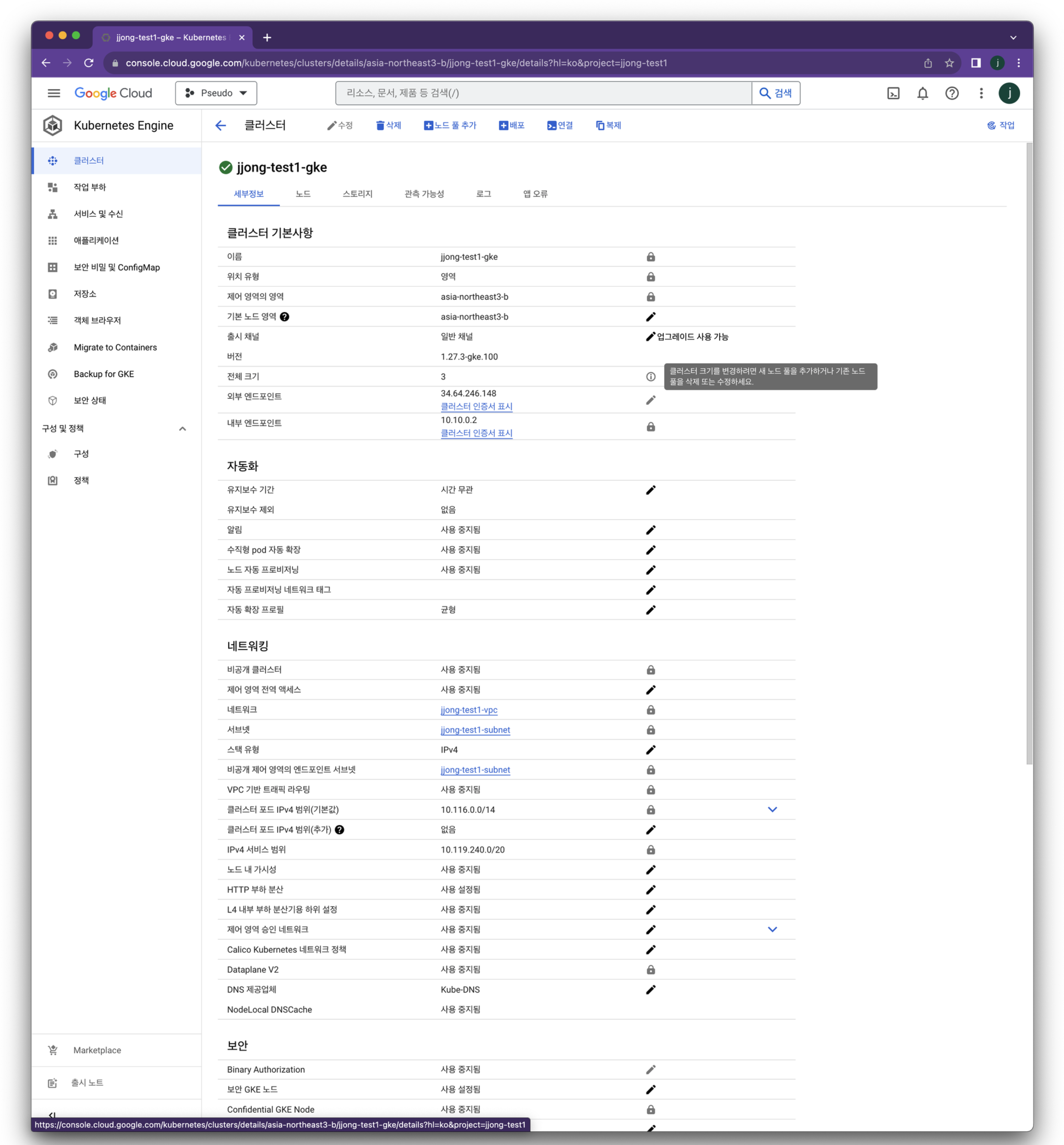

우선 실습환경은 다음과같습니다.

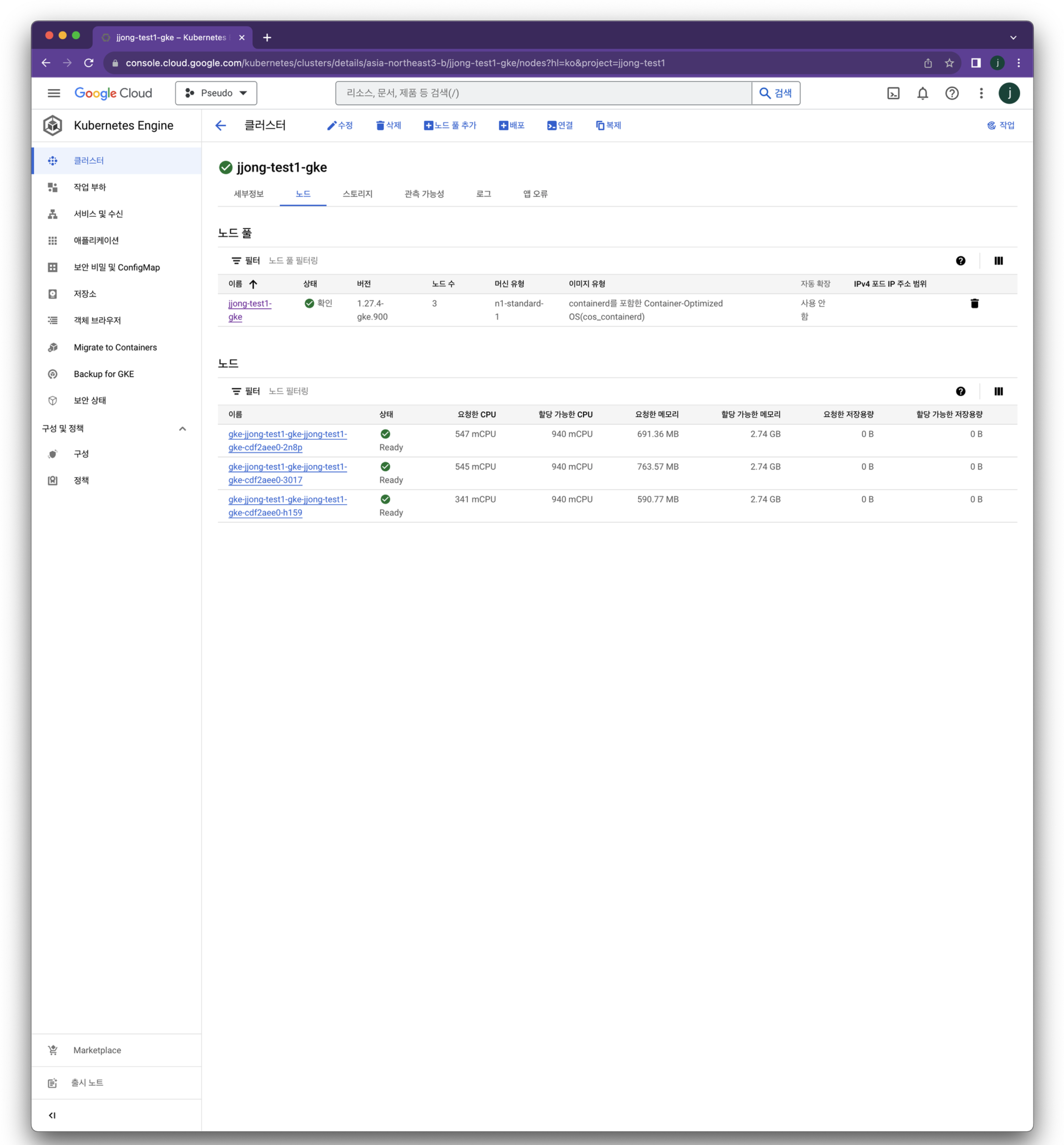

- 무료계정을 사용한 GKE에서 진행하며, 노드 5개로 구성하고있습니다.

- GKE를 배포하는 내용은 다루지않습니다.

- 설치과정 진행중 발생한 이슈로 노드풀(노드의 크기) 를 5→3으로 하향조정합니다.

- 로컬환경에는

kubectl이 설치되어있고, GKE에서 제공하는 노드의 인증정보를 사용합니다.

- GKE를 배포하는 내용은 다루지않습니다.

- CLI를 효율적으로 사용하기위해 k9s를 사용합니다.

- ALIAS 로

k=kubectl이 지정되어있습니다. - 로컬

~/diok2/cloudnativepg디렉토리에서 작업을 진행합니다. - 해당 문서에서는 공식 깃허브 Docs를 참조해서 실습합니다

GKE 정보 확인

Operator manifest 배포

#파일 다운로드

curl -LO https://raw.githubusercontent.com/cloudnative-pg/cloudnative-pg/release-1.21/releases/cnpg-1.21.0.yaml

#배포

k apply -f cnpg-1.21.0.yaml

#확인 명령어

kubectl get deployment -n cnpg-system cnpg-controller-manager

NAME READY UP-TO-DATE AVAILABLE AGE

cnpg-controller-manager 1/1 1 1 57s

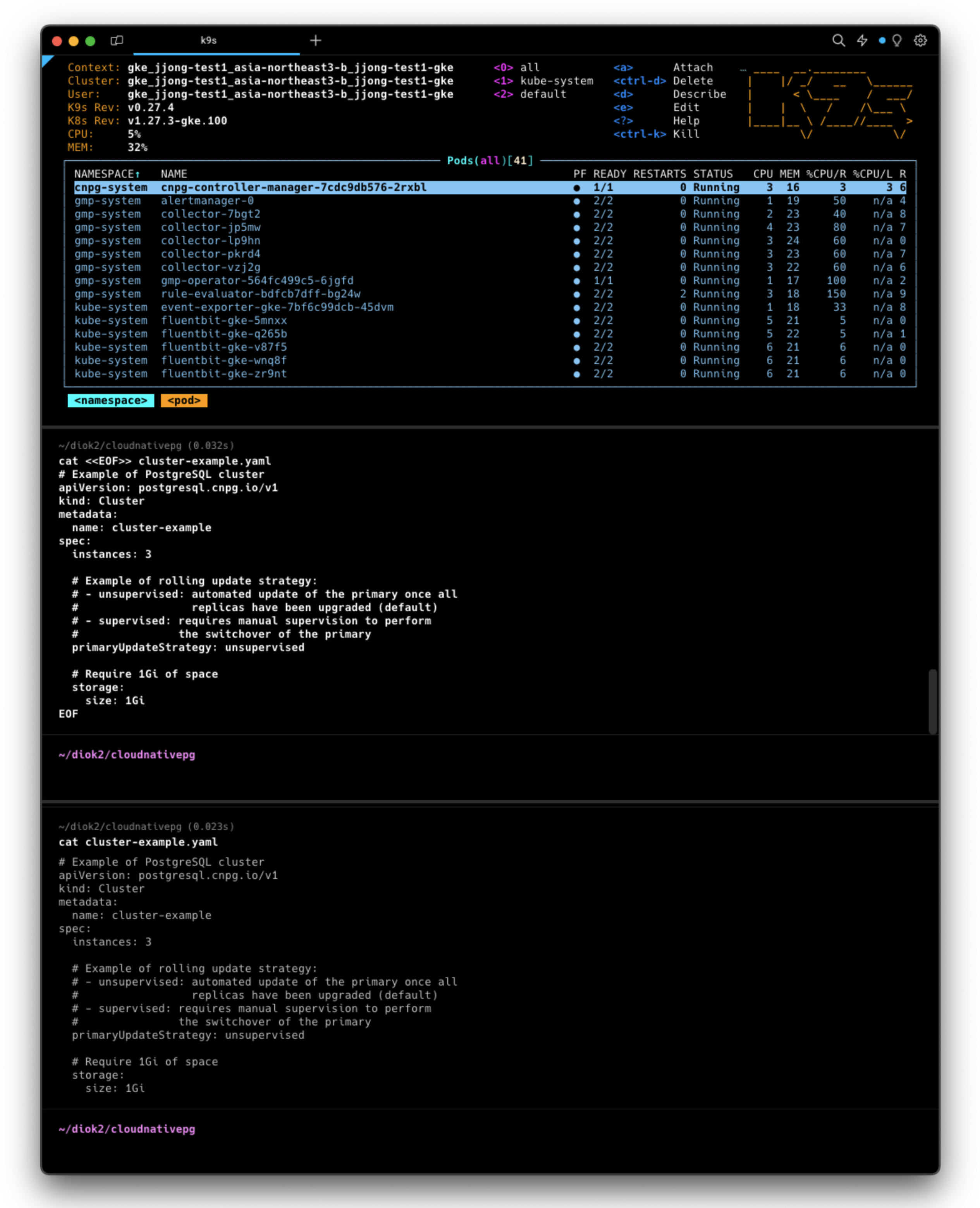

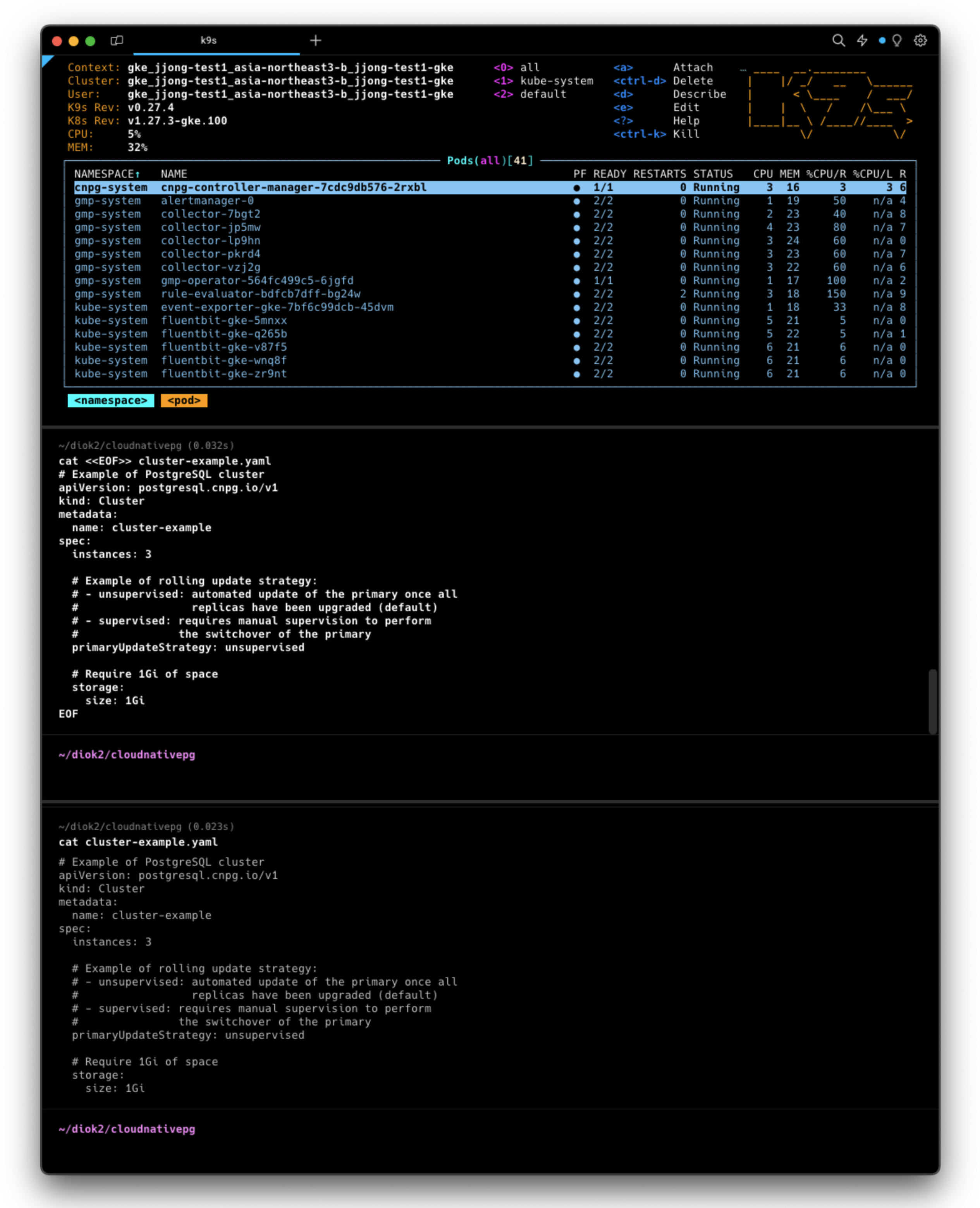

Deploy PostgreSQL Cluster

- 이때 배포한 클러스터의 이름은 cluster-example 이라는 것을 명심하자

#파일 생성

cat <<EOF>> cluster-example.yaml

# Example of PostgreSQL cluster

apiVersion: postgresql.cnpg.io/v1

kind: Cluster

metadata:

name: cluster-example

spec:

instances: 3

# Example of rolling update strategy:

# - unsupervised: automated update of the primary once all

# replicas have been upgraded (default)

# - supervised: requires manual supervision to perform

# the switchover of the primary

primaryUpdateStrategy: unsupervised

# Require 1Gi of space

storage:

size: 1Gi

EOF

#배포

kubectl apply -f cluster-example.yaml

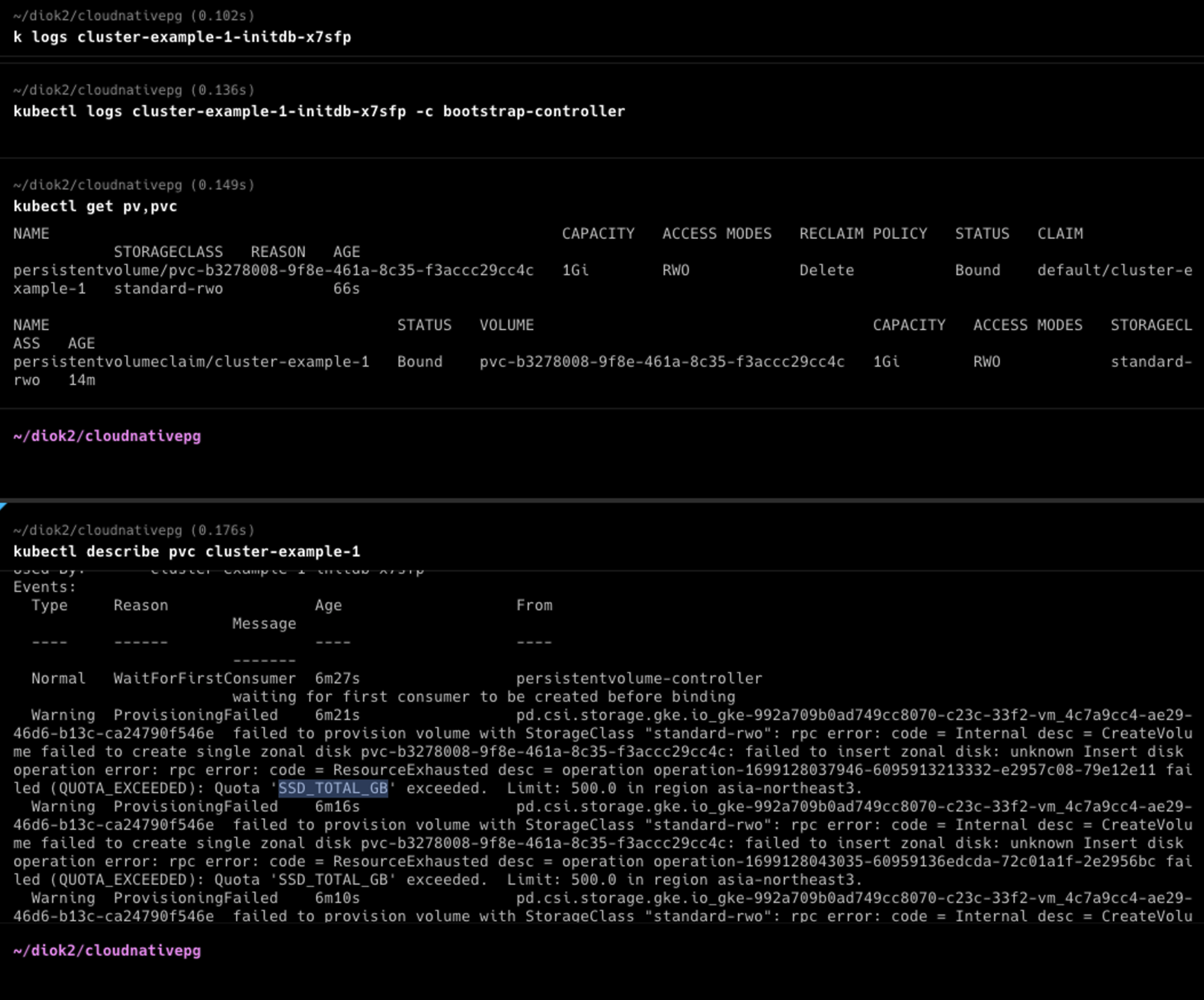

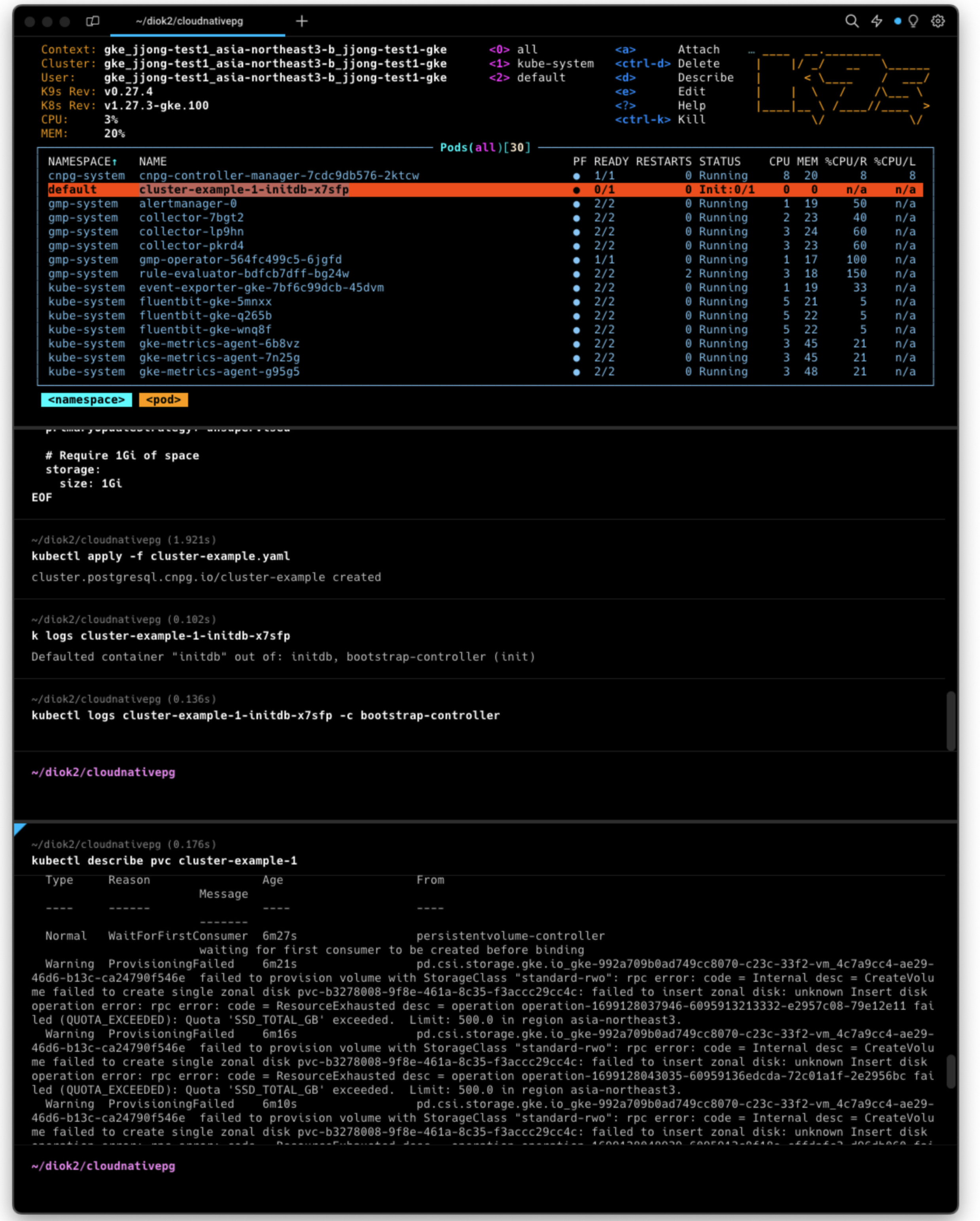

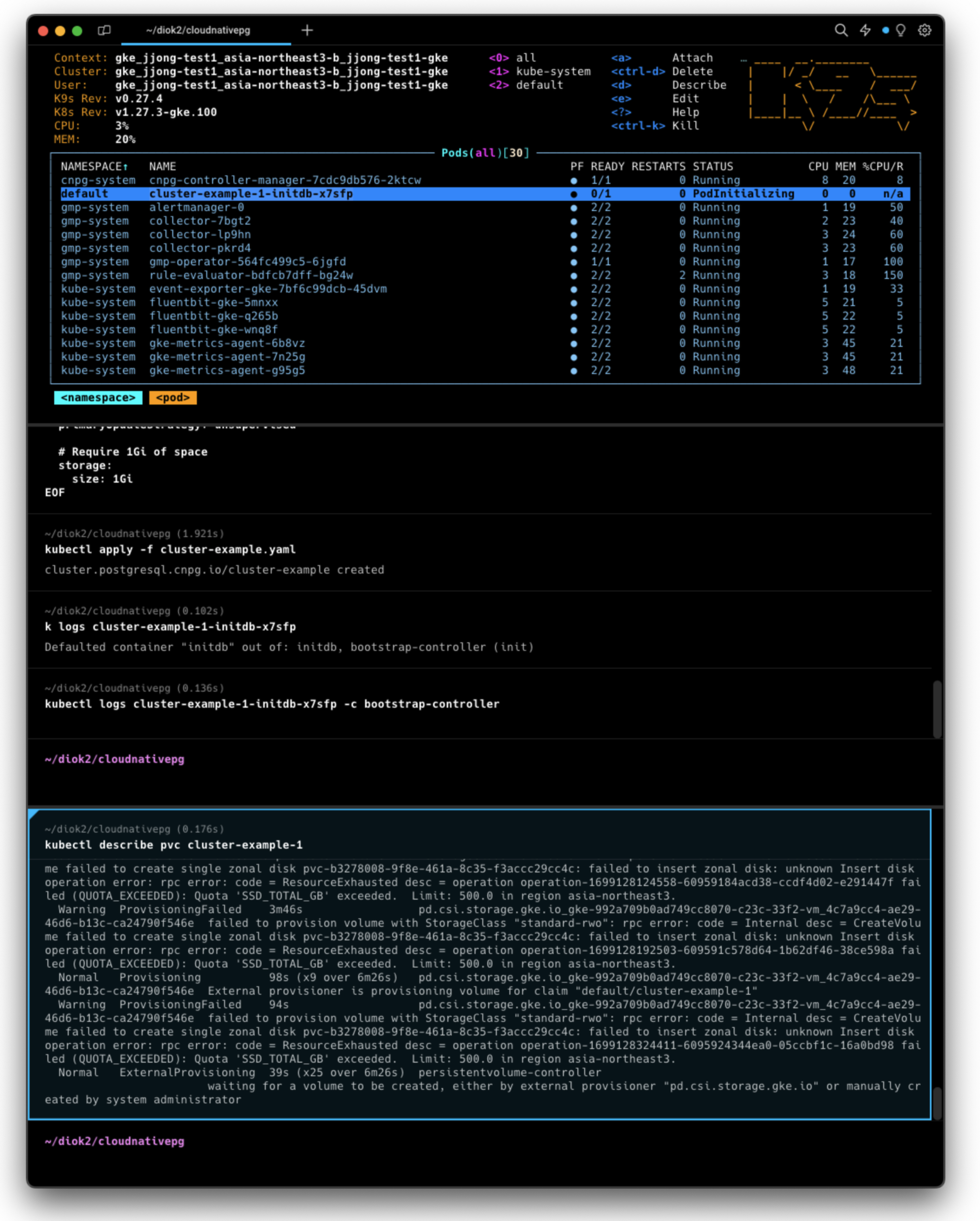

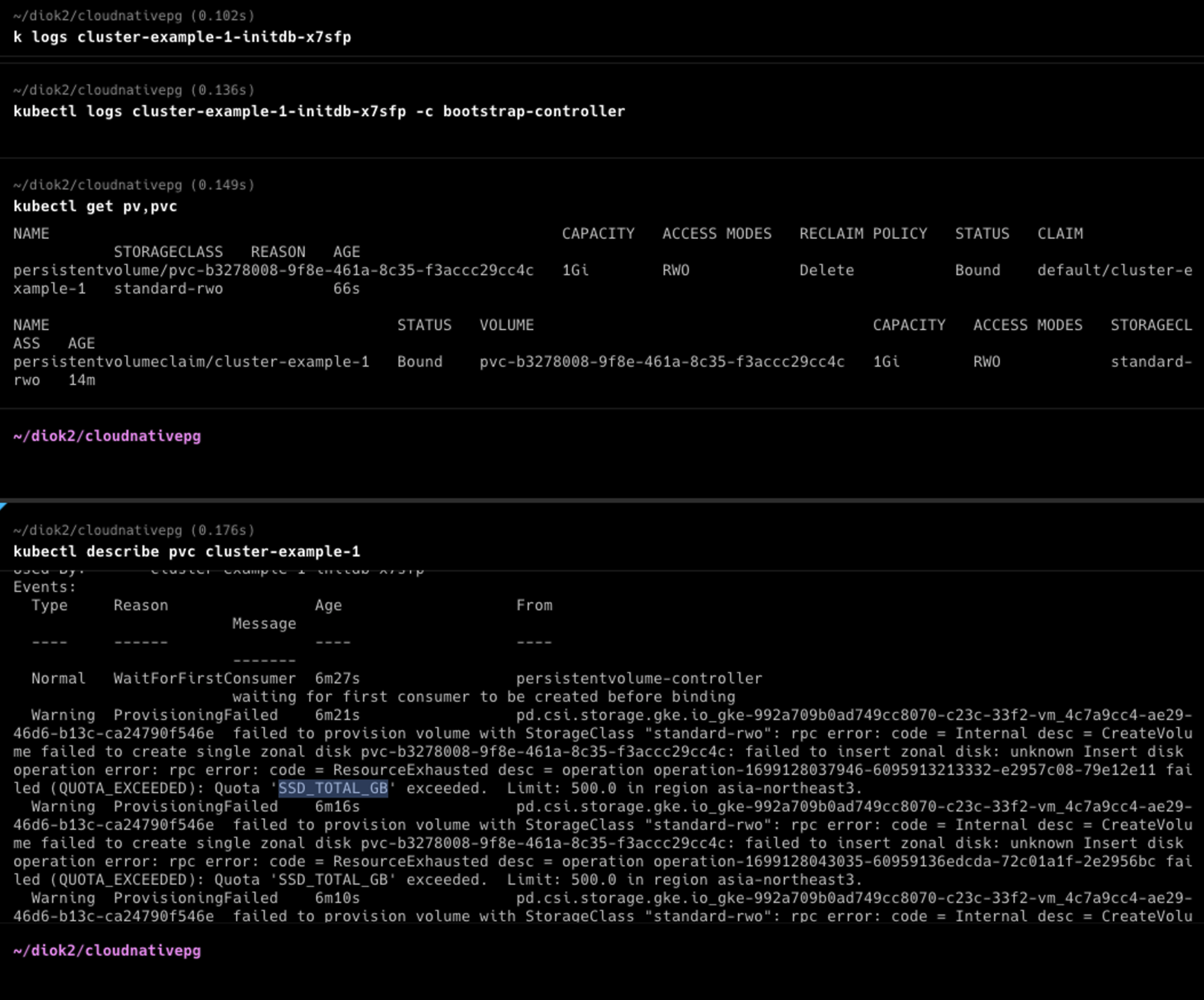

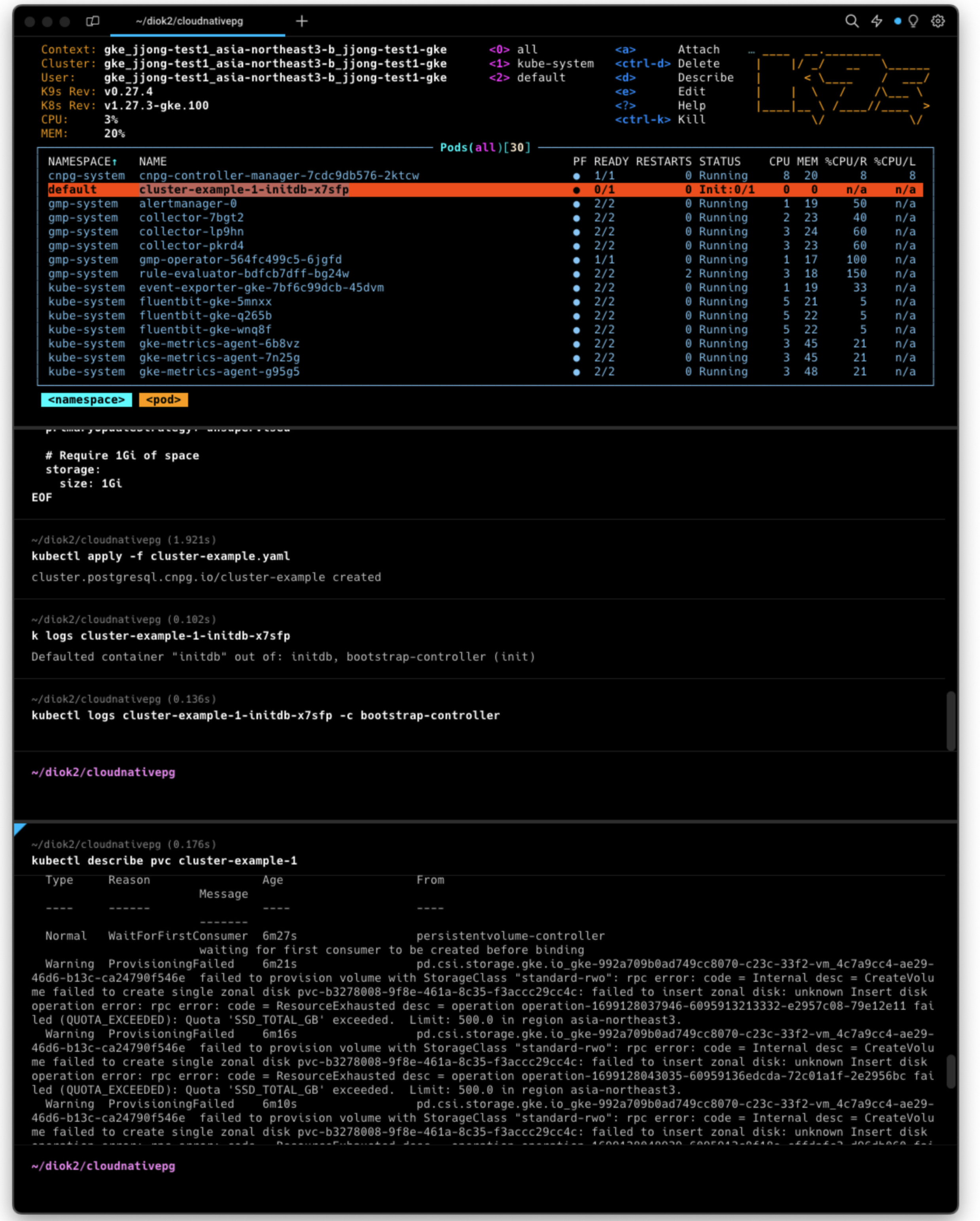

GKE 이슈: Cluster가 만들어지지 않는 이슈 확인

현상발견: 분명히 cluster-exmaple.yaml을 배포하면 Cluster가 만들어져야 하는데, 만들어지 않는 증상을 확인

증상

- 만들어지려는 Pod(

cluster-example-1-initdb-x7sfp) 의 로그를 확인했을때initdb, bootstrap-controller이슈 확인 - Pod의 상세상태를 보면 Pending 인것을 확인

원인을 못 찾고 헤매다가, kubectl get pv,pvc 를 했을때 PVC만 존재하길래, 뭔가 이상하다는 낌새를 느꼈다. 뭐지 싶어서 이벤트로그를 확인했다. 그때, 아래의 내용을 확인했고, 원인을 알았다.

(참조링크: https://jjongguet.tistory.com/183#PVC%2FPV%3A 사용자의 요청이 생기면 Pod랑 연결하는 방법-1 )

우리가 주목해야할 내용은 SSD_TOTAL_GB 이다.

대충 이유를 확인해보자면… 아래와같다.

GKE가 배포된 Region에서 제한된 QUOTA보다 많이 할당했기 때문이라고 한다.

노드 프로비저닝 예제( https://jjongguet.tistory.com/171#6-1. ERROR 발생-1 )에서도 비슷한 이슈가 발생했었다.

해당 페이지의 문제는 요청량(600GB) 이 제공량(500GB)보다 많다는것이였고, 노드풀의 노드갯수를 6→5로 하향 조정해서 요청량을 500GB로 맞췄다.

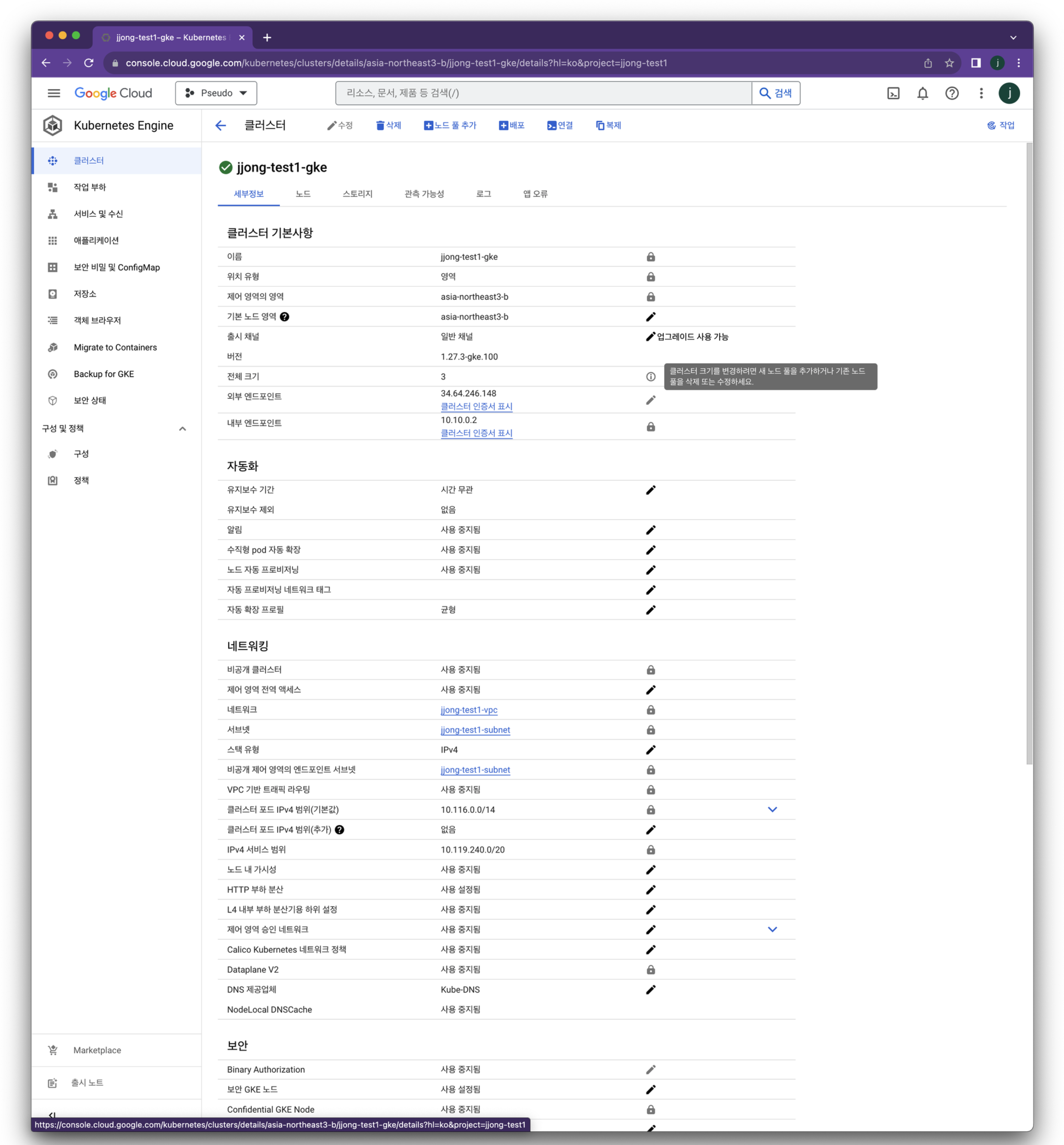

따라서 이번 문제의경우에도 노드풀의 갯수를 줄여서 문제를 해결하면 되겠다고 접근했다.

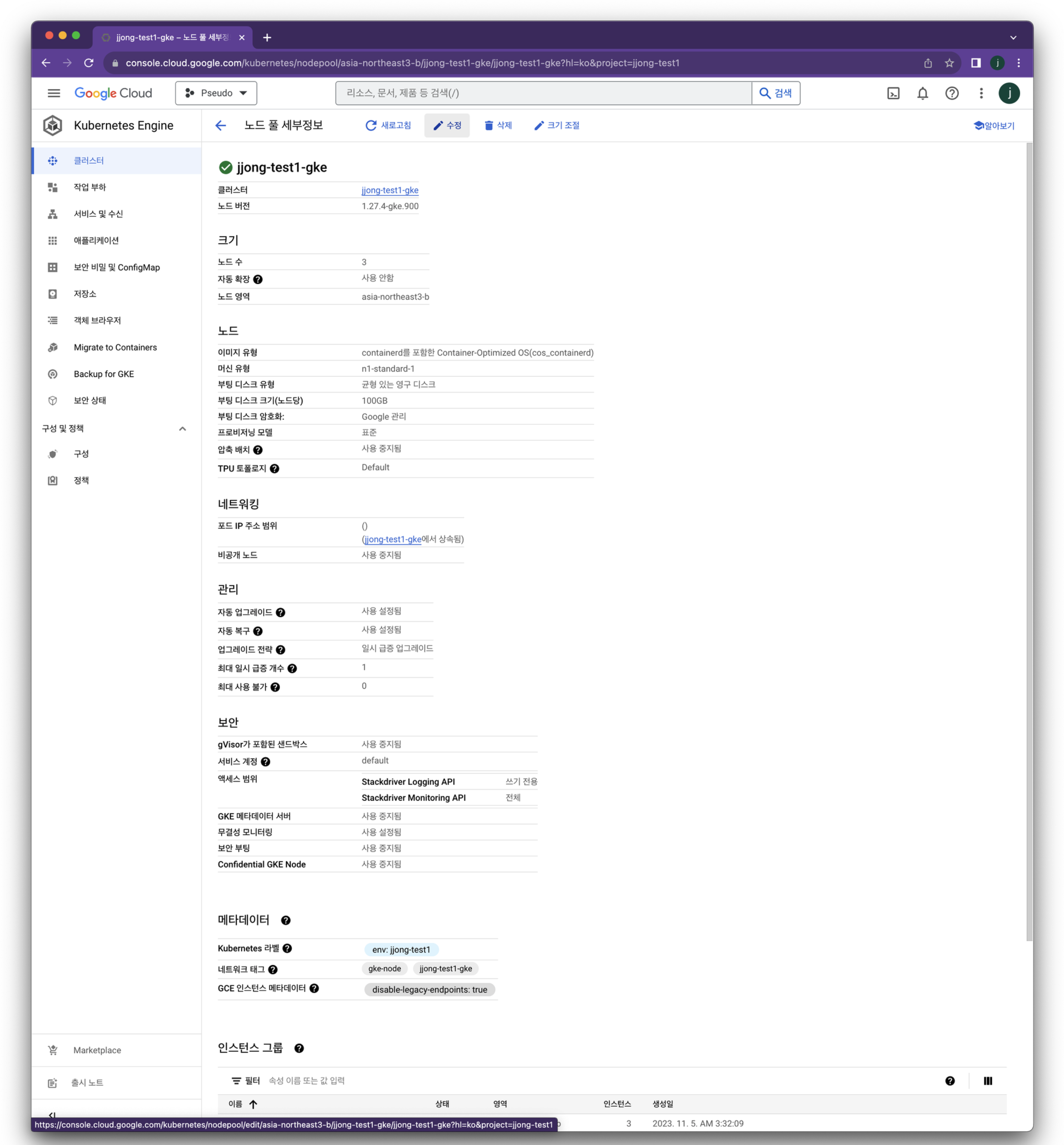

GCP 콘솔 → Kubernetes Engine → 클러스터 → 클러스터 선택

- GKE에서는 노드풀 에서 지정한 노드갯수를 항상 유지하기때문에, 클러스터의 노드갯수를 수정하려면, 노드풀 갯수를 수정해야한다

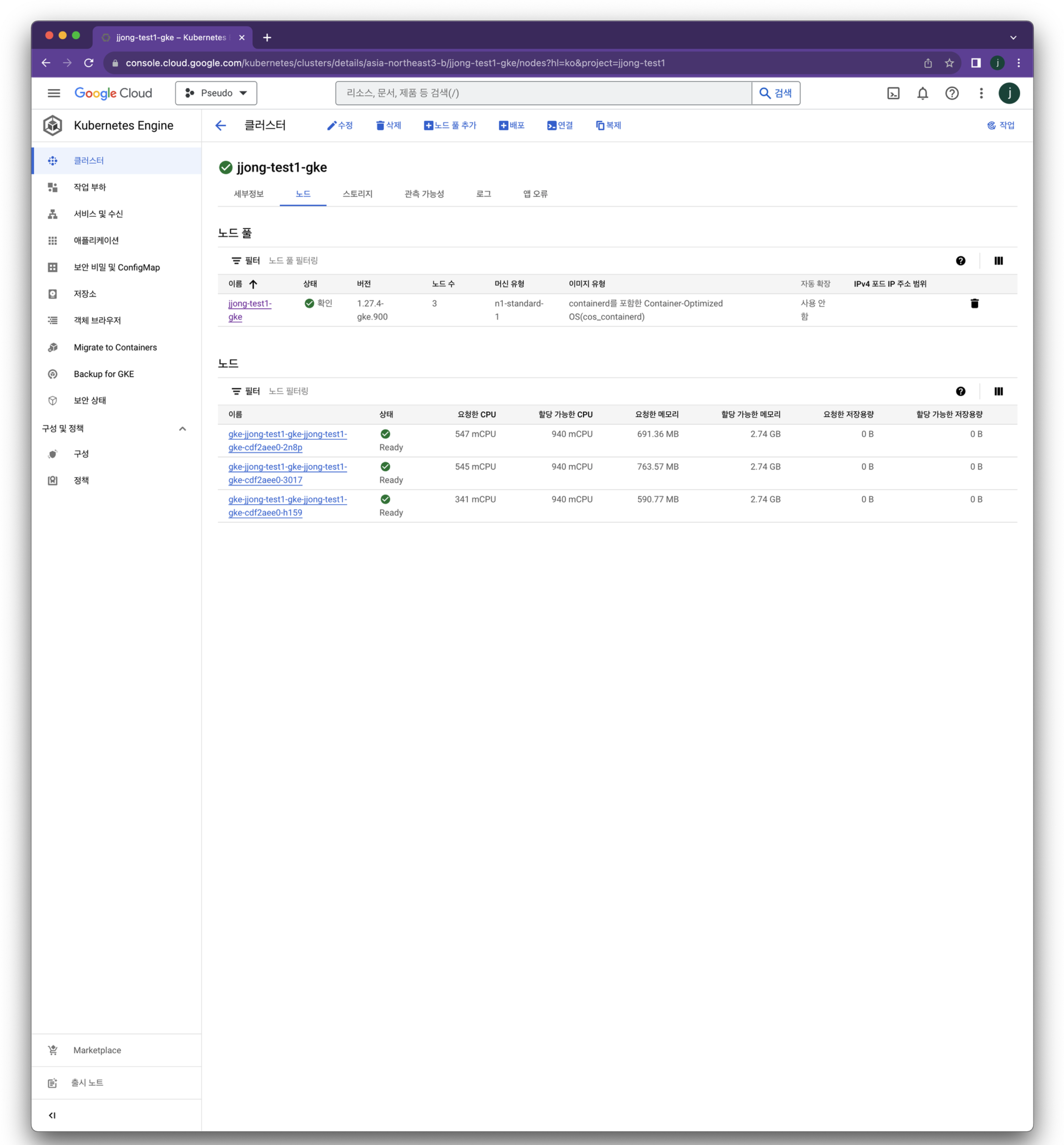

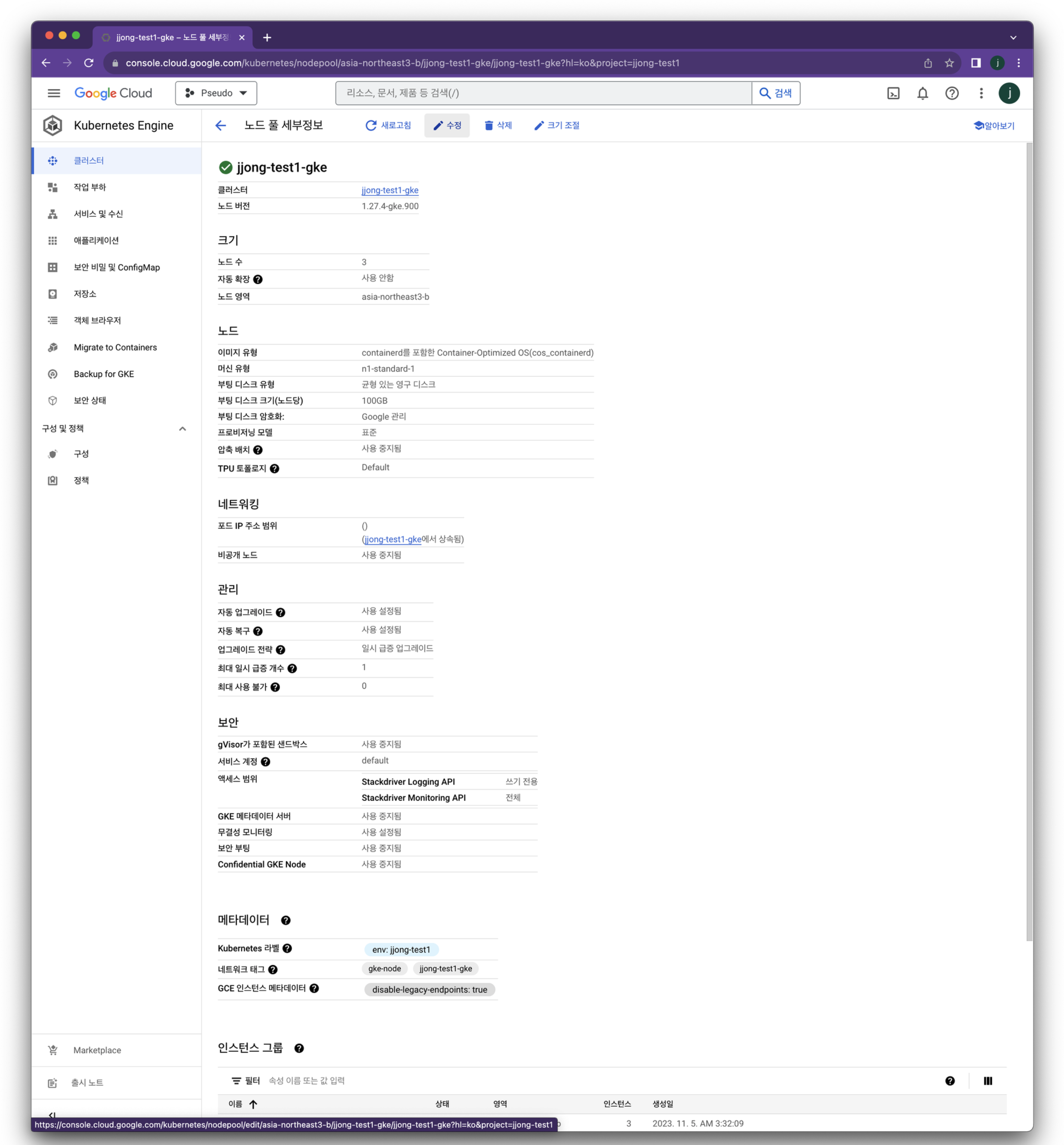

상단의 옵션 중 노드 → 노드 풀 선택

수정 → (노드 수 5→3 으로 조정) → 저장

노드풀 갯수 설정이 완료되었다.

노드풀은 쿠버네티스 노드인스턴스와 연결되어있는거라, 갯수설정이후 적용이되기까지 조금 시간이 걸린다.

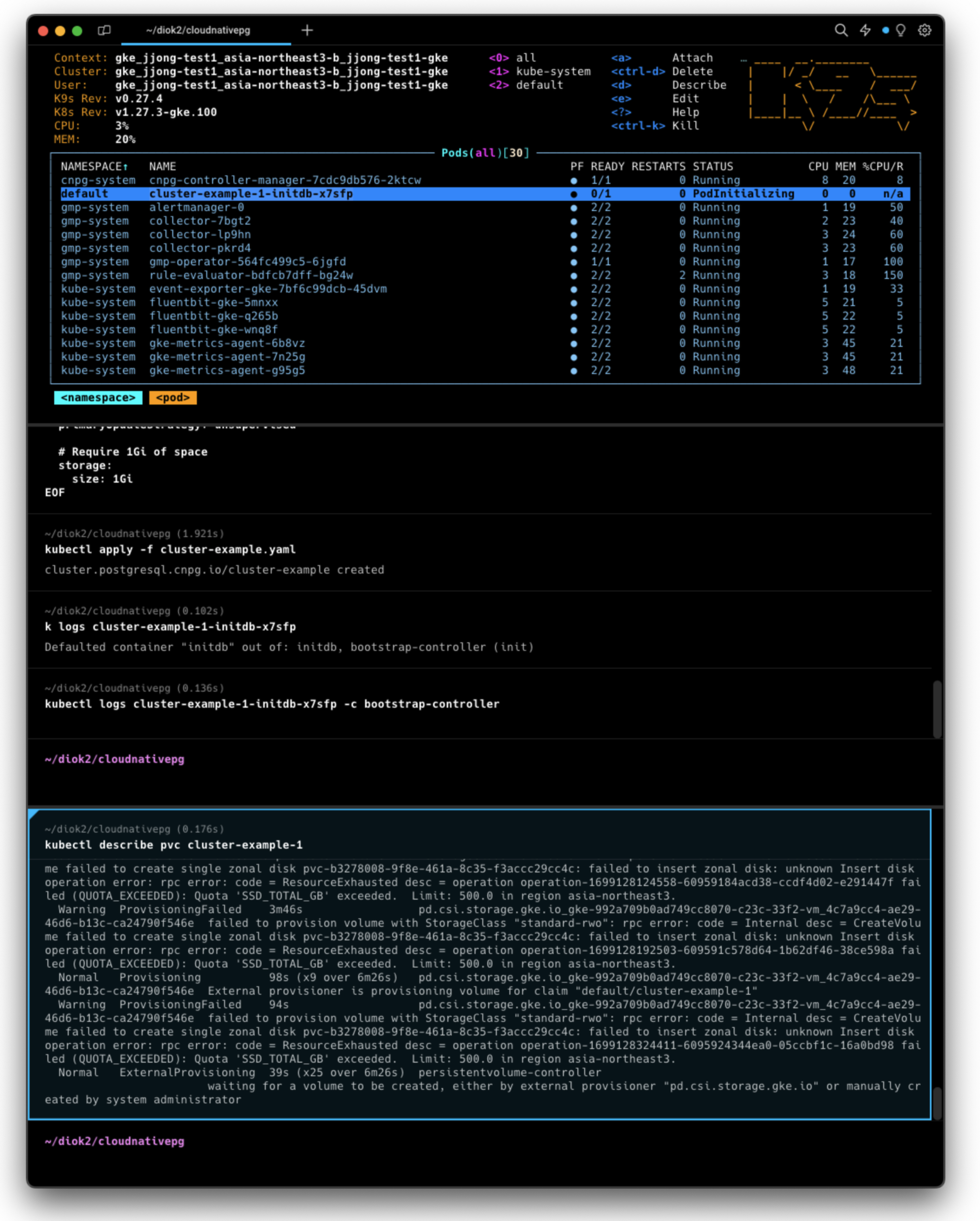

GKE 이슈: Cluster가 만들어지지 않는 이슈 해결

위에서 노드풀갯수를 설정한 이후 아래처럼 Pod에 init 이 되기 시작했다

Pod가 initializing 되는것을 볼수 있다.

배포확인 명령어

#클러스터 배포 확인

kubectl get pod,pvc,svc,ep

NAME READY STATUS RESTARTS AGE

pod/cluster-example-1 1/1 Running 0 16m

pod/cluster-example-2 1/1 Running 0 15m

pod/cluster-example-3 1/1 Running 0 14m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/cluster-example-1 Bound pvc-b3278008-9f8e-461a-8c35-f3accc29cc4c 1Gi RWO standard-rwo 30m

persistentvolumeclaim/cluster-example-2 Bound pvc-76170d1e-cd2f-4e86-8c32-de129e76db01 1Gi RWO standard-rwo 15m

persistentvolumeclaim/cluster-example-3 Bound pvc-295c9118-de7a-499e-b3cb-944278a5a7fb 1Gi RWO standard-rwo 15m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cluster-example-r ClusterIP 10.119.247.82 <none> 5432/TCP 30m

service/cluster-example-ro ClusterIP 10.119.241.203 <none> 5432/TCP 30m

service/cluster-example-rw ClusterIP 10.119.253.195 <none> 5432/TCP 30m

service/kubernetes ClusterIP 10.119.240.1 <none> 443/TCP 123m

NAME ENDPOINTS AGE

endpoints/cluster-example-r 10.116.1.7:5432,10.116.4.15:5432,10.116.5.9:5432 30m

endpoints/cluster-example-ro 10.116.4.15:5432,10.116.5.9:5432 30m

endpoints/cluster-example-rw 10.116.1.7:5432 30m

endpoints/kubernetes 10.10.0.2:443 123m#파드 라벨로 배포확인

kubectl get pods -l cnpg.io/cluster=cluster-example

NAME READY STATUS RESTARTS AGE

cluster-example-1 1/1 Running 0 20m

cluster-example-2 1/1 Running 0 19m

cluster-example-3 1/1 Running 0 18m

로그 확인

#Pod의 로그 확인

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"setup","msg":"Starting CloudNativePG Instance Manager","logging_pod":"cluster-example-1","version":"1.21.0","build":{"Version":"1.21.0","Commit":"9bc5b9b2","Date":"2023-10-12"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"setup","msg":"starting controller-runtime manager","logging_pod":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Starting EventSource","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","source":"kind source: *v1.Cluster"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Starting Controller","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Starting webserver","logging_pod":"cluster-example-1","address":":9187"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"roles_reconciler","msg":"starting up the runnable","logging_pod":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"roles_reconciler","msg":"setting up RoleSynchronizer loop","logging_pod":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Starting webserver","logging_pod":"cluster-example-1","address":"localhost:8010"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Starting webserver","logging_pod":"cluster-example-1","address":":8000"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Starting workers","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","worker count":1}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Refreshed configuration file","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","filename":"/controller/certificates/server.crt","secret":"cluster-example-server"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Refreshed configuration file","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","filename":"/controller/certificates/server.key","secret":"cluster-example-server"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Refreshed configuration file","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","filename":"/controller/certificates/streaming_replica.crt","secret":"cluster-example-replication"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Refreshed configuration file","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","filename":"/controller/certificates/streaming_replica.key","secret":"cluster-example-replication"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Refreshed configuration file","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","filename":"/controller/certificates/client-ca.crt","secret":"cluster-example-ca"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Refreshed configuration file","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","filename":"/controller/certificates/server-ca.crt","secret":"cluster-example-ca"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Installed configuration file","logging_pod":"cluster-example-1","pgdata":"/var/lib/postgresql/data/pgdata","filename":"pg_hba.conf"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Installed configuration file","logging_pod":"cluster-example-1","pgdata":"/var/lib/postgresql/data/pgdata","filename":"custom.conf"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Cluster status","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","currentPrimary":"","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"First primary instance bootstrap, marking myself as primary","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","currentPrimary":"","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Extracting pg_controldata information","logging_pod":"cluster-example-1","reason":"postmaster start up"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"pg_controldata","msg":"pg_control version number: 1300\nCatalog version number: 202307071\nDatabase system identifier: 7297702981666938898\nDatabase cluster state: shut down\npg_control last modified: Sat 04 Nov 2023 08:14:44 PM UTC\nLatest checkpoint location: 0/2000028\nLatest checkpoint's REDO location: 0/2000028\nLatest checkpoint's REDO WAL file: 000000010000000000000002\nLatest checkpoint's TimeLineID: 1\nLatest checkpoint's PrevTimeLineID: 1\nLatest checkpoint's full_page_writes: on\nLatest checkpoint's NextXID: 0:732\nLatest checkpoint's NextOID: 16386\nLatest checkpoint's NextMultiXactId: 1\nLatest checkpoint's NextMultiOffset: 0\nLatest checkpoint's oldestXID: 722\nLatest checkpoint's oldestXID's DB: 1\nLatest checkpoint's oldestActiveXID: 0\nLatest checkpoint's oldestMultiXid: 1\nLatest checkpoint's oldestMulti's DB: 1\nLatest checkpoint's oldestCommitTsXid:0\nLatest checkpoint's newestCommitTsXid:0\nTime of latest checkpoint: Sat 04 Nov 2023 08:14:44 PM UTC\nFake LSN counter for unlogged rels: 0/3E8\nMinimum recovery ending location: 0/0\nMin recovery ending loc's timeline: 0\nBackup start location: 0/0\nBackup end location: 0/0\nEnd-of-backup record required: no\nwal_level setting: replica\nwal_log_hints setting: off\nmax_connections setting: 100\nmax_worker_processes setting: 32\nmax_wal_senders setting: 10\nmax_prepared_xacts setting: 0\nmax_locks_per_xact setting: 64\ntrack_commit_timestamp setting: off\nMaximum data alignment: 8\nDatabase block size: 8192\nBlocks per segment of large relation: 131072\nWAL block size: 8192\nBytes per WAL segment: 16777216\nMaximum length of identifiers: 64\nMaximum columns in an index: 32\nMaximum size of a TOAST chunk: 1996\nSize of a large-object chunk: 2048\nDate/time type storage: 64-bit integers\nFloat8 argument passing: by value\nData page checksum version: 0\nMock authentication nonce: 974d70f58f0b1e1286845e11b2f31b9a023e3dc9bad2d1863d7d41d90c6122ec\n","pipe":"stdout","logging_pod":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"DB not available, will retry","logging_pod":"cluster-example-1","err":"failed to connect to `host=/controller/run user=postgres database=postgres`: dial error (dial unix /controller/run/.s.PGSQL.5432: connect: no such file or directory)"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"2023-11-04 20:15:17.370 UTC [19] LOG: redirecting log output to logging collector process","pipe":"stderr","logging_pod":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"2023-11-04 20:15:17.370 UTC [19] HINT: Future log output will appear in directory \"/controller/log\".","pipe":"stderr","logging_pod":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.371 UTC","process_id":"19","session_id":"6546a655.13","session_line_num":"1","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"ending log output to stderr","hint":"Future log output will go to log destination \"csvlog\".","backend_type":"postmaster","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"2023-11-04 20:15:17.371 UTC [19] LOG: ending log output to stderr","source":"/controller/log/postgres","logging_pod":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"2023-11-04 20:15:17.371 UTC [19] HINT: Future log output will go to log destination \"csvlog\".","source":"/controller/log/postgres","logging_pod":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.371 UTC","process_id":"19","session_id":"6546a655.13","session_line_num":"2","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"starting PostgreSQL 16.0 (Debian 16.0-1.pgdg110+1) on x86_64-pc-linux-gnu, compiled by gcc (Debian 10.2.1-6) 10.2.1 20210110, 64-bit","backend_type":"postmaster","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.371 UTC","process_id":"19","session_id":"6546a655.13","session_line_num":"3","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"listening on IPv4 address \"0.0.0.0\", port 5432","backend_type":"postmaster","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.371 UTC","process_id":"19","session_id":"6546a655.13","session_line_num":"4","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"listening on IPv6 address \"::\", port 5432","backend_type":"postmaster","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.377 UTC","process_id":"19","session_id":"6546a655.13","session_line_num":"5","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"listening on Unix socket \"/controller/run/.s.PGSQL.5432\"","backend_type":"postmaster","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.392 UTC","process_id":"23","session_id":"6546a655.17","session_line_num":"1","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"database system was shut down at 2023-11-04 20:14:44 UTC","backend_type":"startup","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.398 UTC","user_name":"postgres","database_name":"postgres","process_id":"24","connection_from":"[local]","session_id":"6546a655.18","session_line_num":"1","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"FATAL","sql_state_code":"57P03","message":"the database system is starting up","backend_type":"client backend","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.402 UTC","user_name":"postgres","database_name":"postgres","process_id":"25","connection_from":"[local]","session_id":"6546a655.19","session_line_num":"1","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"FATAL","sql_state_code":"57P03","message":"the database system is starting up","backend_type":"client backend","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"DB not available, will retry","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","err":"failed to connect to `host=/controller/run user=postgres database=postgres`: server error (FATAL: the database system is starting up (SQLSTATE 57P03))"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.407 UTC","process_id":"19","session_id":"6546a655.13","session_line_num":"6","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"database system is ready to accept connections","backend_type":"postmaster","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving","logging_pod":"cluster-example-1","walName":"pg_wal/000000010000000000000001","currentPrimary":"cluster-example-1","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:18Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:18Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:18Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:18Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:18Z","msg":"Readiness probe failing","logging_pod":"cluster-example-1","err":"instance is not ready yet"}

postgres {"level":"info","ts":"2023-11-04T20:15:19Z","msg":"Readiness probe failing","logging_pod":"cluster-example-1","err":"instance is not ready yet"}

postgres {"level":"info","ts":"2023-11-04T20:15:42Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:42.129 UTC","process_id":"21","session_id":"6546a655.15","session_line_num":"1","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"checkpoint starting: force wait","backend_type":"checkpointer","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:42Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving","logging_pod":"cluster-example-1","walName":"pg_wal/000000010000000000000002","currentPrimary":"cluster-example-1","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:44Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:44.008 UTC","process_id":"21","session_id":"6546a655.15","session_line_num":"2","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"checkpoint complete: wrote 20 buffers (0.1%); 0 WAL file(s) added, 0 removed, 0 recycled; write=1.711 s, sync=0.005 s, total=1.880 s; sync files=15, longest=0.003 s, average=0.001 s; distance=16384 kB, estimate=16384 kB; lsn=0/3000060, redo lsn=0/3000028","backend_type":"checkpointer","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:44Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving","logging_pod":"cluster-example-1","walName":"pg_wal/000000010000000000000003","currentPrimary":"cluster-example-1","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:44Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving","logging_pod":"cluster-example-1","walName":"pg_wal/000000010000000000000003.00000028.backup","currentPrimary":"cluster-example-1","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:16:39Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving","logging_pod":"cluster-example-1","walName":"pg_wal/000000010000000000000004","currentPrimary":"cluster-example-1","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:16:39Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:16:39.375 UTC","process_id":"21","session_id":"6546a655.15","session_line_num":"3","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"checkpoint starting: force wait","backend_type":"checkpointer","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:16:43Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:16:43.306 UTC","process_id":"21","session_id":"6546a655.15","session_line_num":"4","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"checkpoint complete: wrote 40 buffers (0.2%); 0 WAL file(s) added, 0 removed, 0 recycled; write=3.915 s, sync=0.004 s, total=3.931 s; sync files=10, longest=0.003 s, average=0.001 s; distance=32768 kB, estimate=32768 kB; lsn=0/5000098, redo lsn=0/5000060","backend_type":"checkpointer","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:16:43Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving","logging_pod":"cluster-example-1","walName":"pg_wal/000000010000000000000005","currentPrimary":"cluster-example-1","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:16:43Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving","logging_pod":"cluster-example-1","walName":"pg_wal/000000010000000000000005.00000060.backup","currentPrimary":"cluster-example-1","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:21:39Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:21:39.486 UTC","process_id":"21","session_id":"6546a655.15","session_line_num":"5","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"checkpoint starting: time","backend_type":"checkpointer","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:21:42Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:21:42.412 UTC","process_id":"21","session_id":"6546a655.15","session_line_num":"6","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"checkpoint complete: wrote 29 buffers (0.2%); 0 WAL file(s) added, 0 removed, 0 recycled; write=2.909 s, sync=0.002 s, total=2.927 s; sync files=5, longest=0.002 s, average=0.001 s; distance=16581 kB, estimate=31149 kB; lsn=0/60316B8, redo lsn=0/6031680","backend_type":"checkpointer","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:21:43Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving","logging_pod":"cluster-example-1","walName":"pg_wal/000000010000000000000006","currentPrimary":"cluster-example-1","targetPrimary":"cluster-example-1"}

bootstrap-controller {"level":"info","ts":"2023-11-04T20:14:58Z","msg":"Installing the manager executable","destination":"/controller/manager","version":"1.21.0","build":{"Version":"1.21.0","Commit":"9bc5b9b2","Date":"2023-10-12"}}

bootstrap-controller {"level":"info","ts":"2023-11-04T20:14:58Z","msg":"Setting 0750 permissions"}

bootstrap-controller {"level":"info","ts":"2023-11-04T20:14:58Z","msg":"Bootstrap completed"}

Stream closed EOF for default/cluster-example-1 (bootstrap-controller)

주의사항: Pod label 로 검색했을시 확인이 안되는 경우

공식문서(https://github.com/cloudnative-pg/cloudnative-pg/blob/main/docs/src/quickstart.md#part-3-deploy-a-postgresql-cluster) 에서는 아래의 커맨드로 파드를 확인하라고 한다.

kubectl get pods -l cnpg.io/cluster=<CLUSTER>이때 주의사항으로 현재 버전에서는 라벨값을 cnpg.io/cluster 를 사용하고 있는데

이전 버전에서는 postgresql 을 사용하고 있다고 한다. 라벨 검색시 주의하도록 하자.

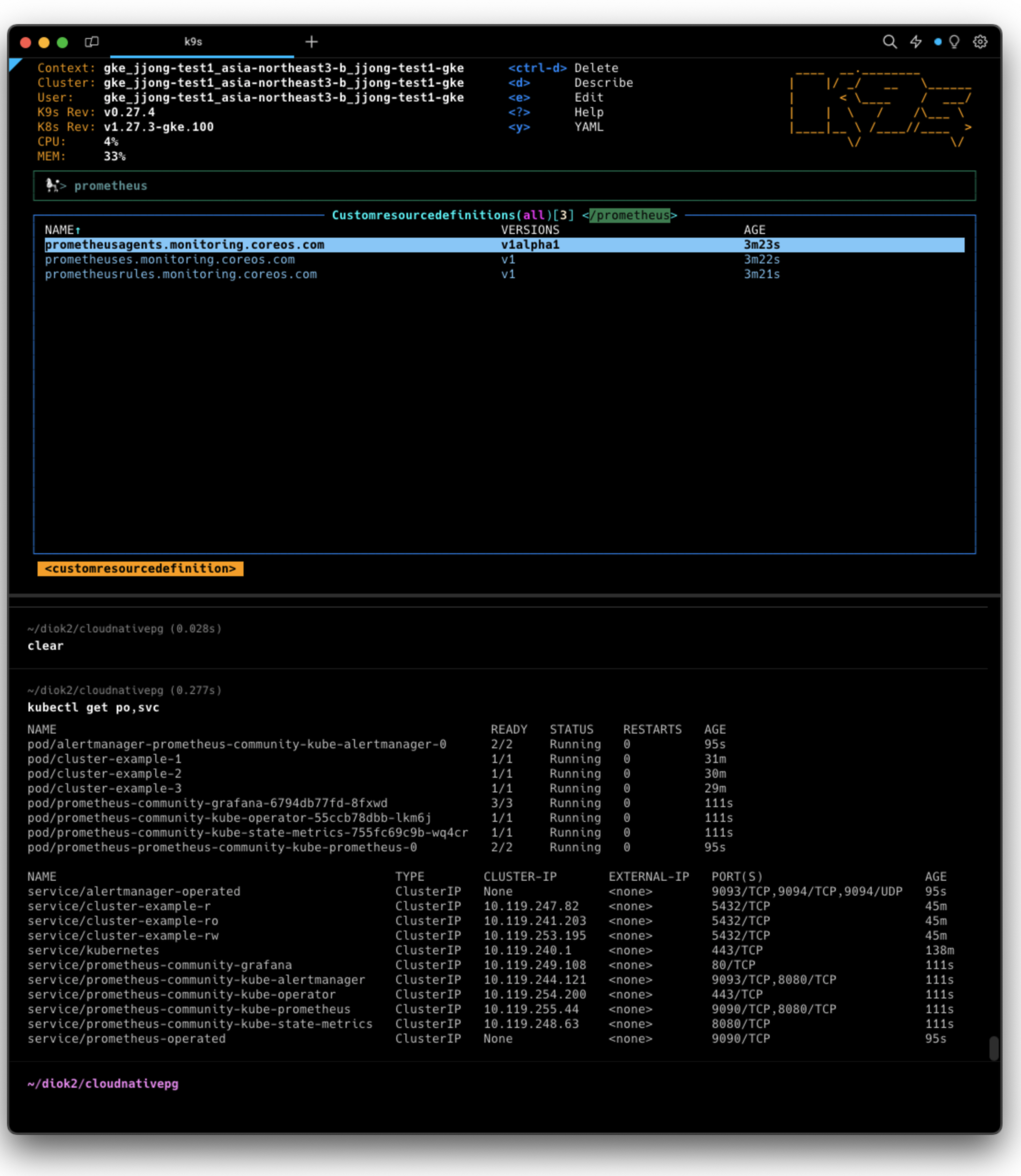

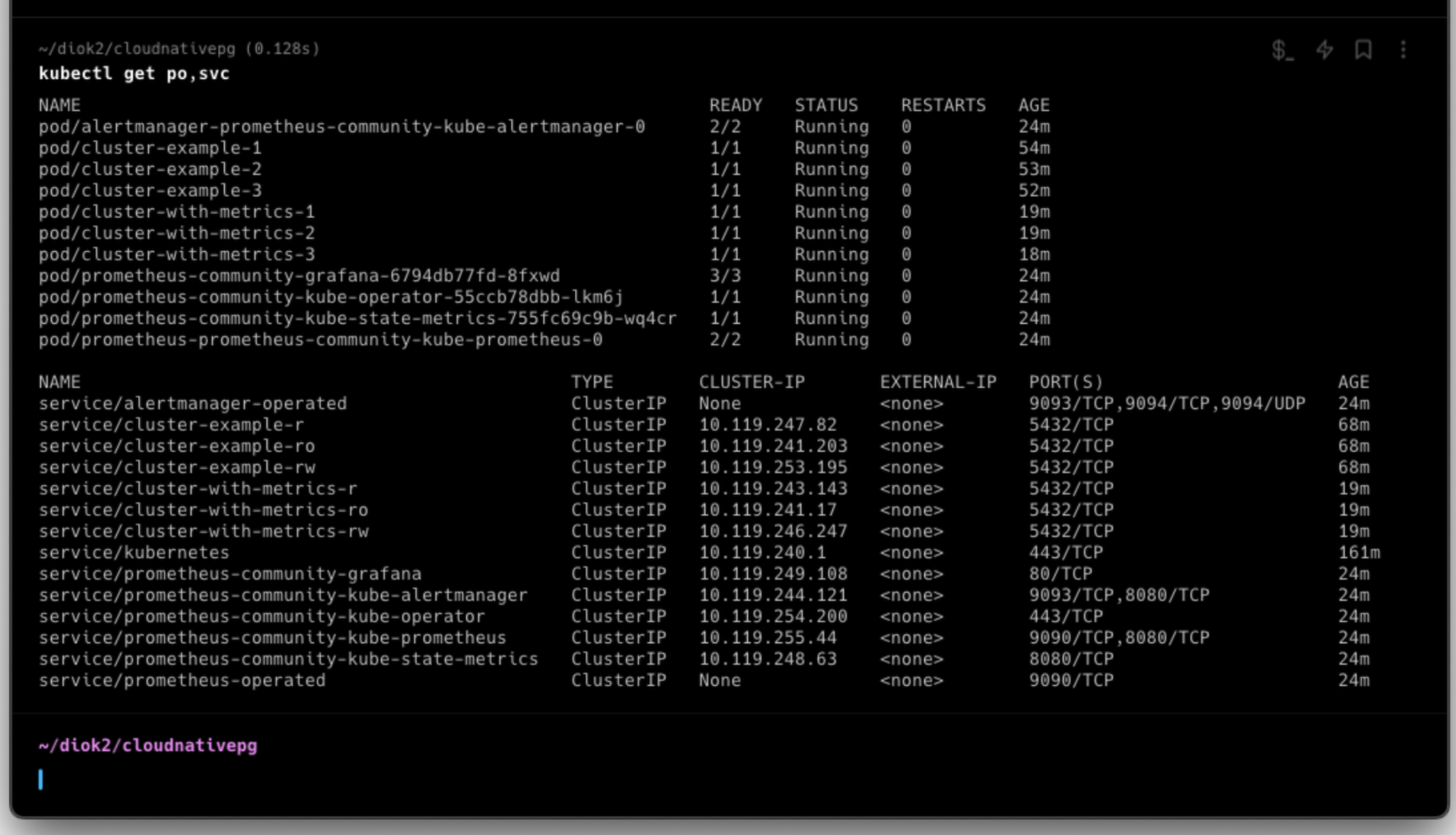

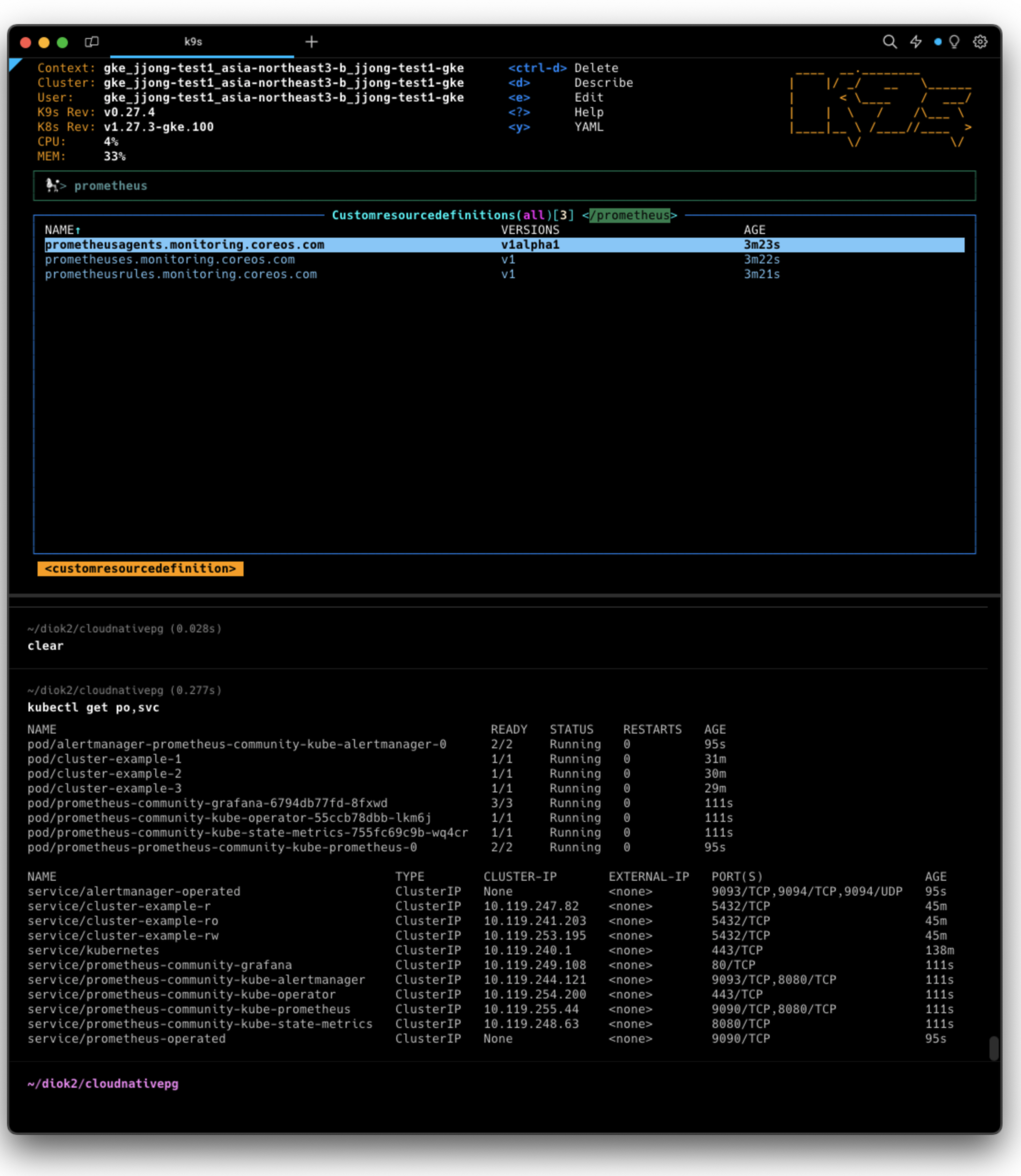

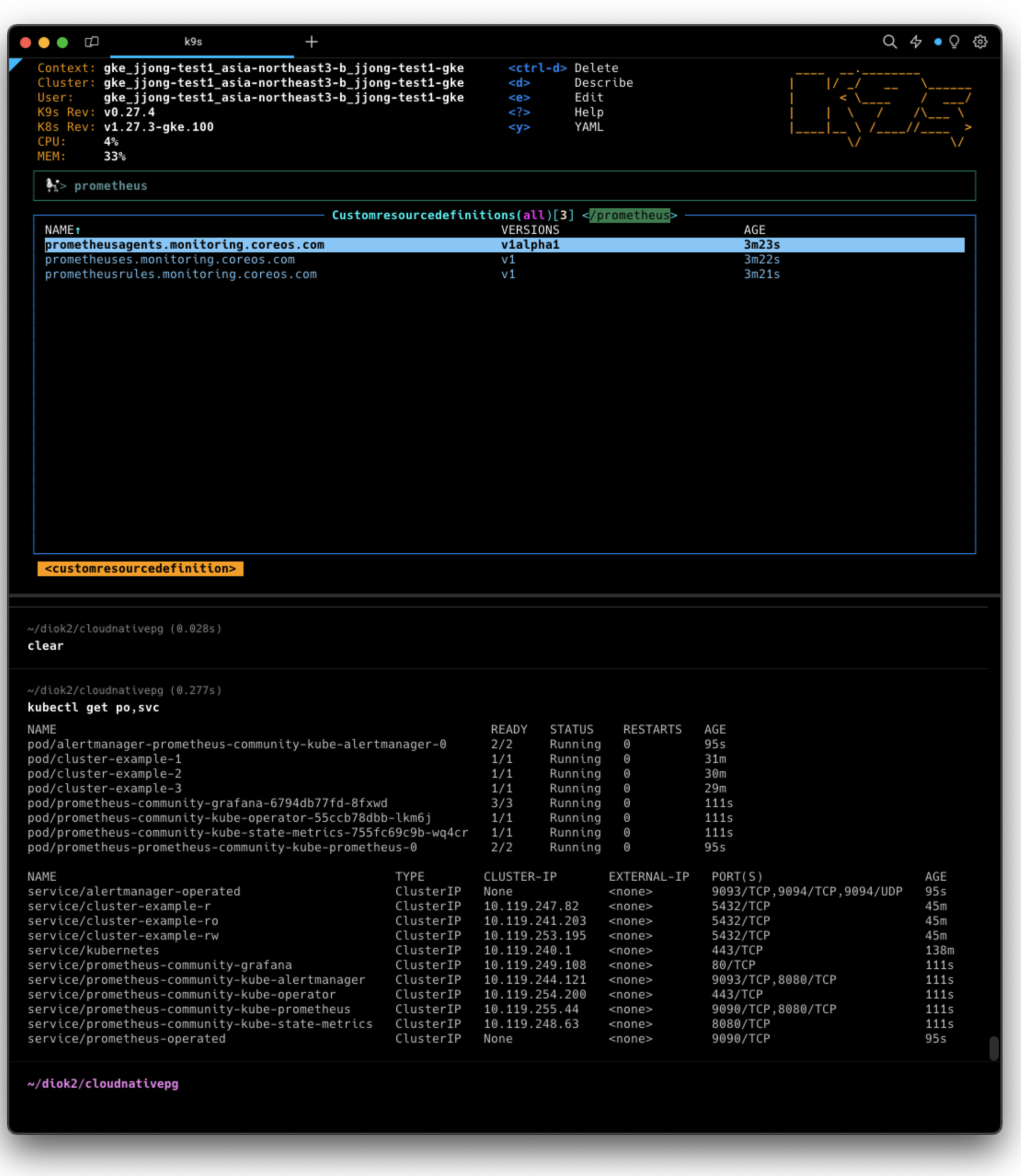

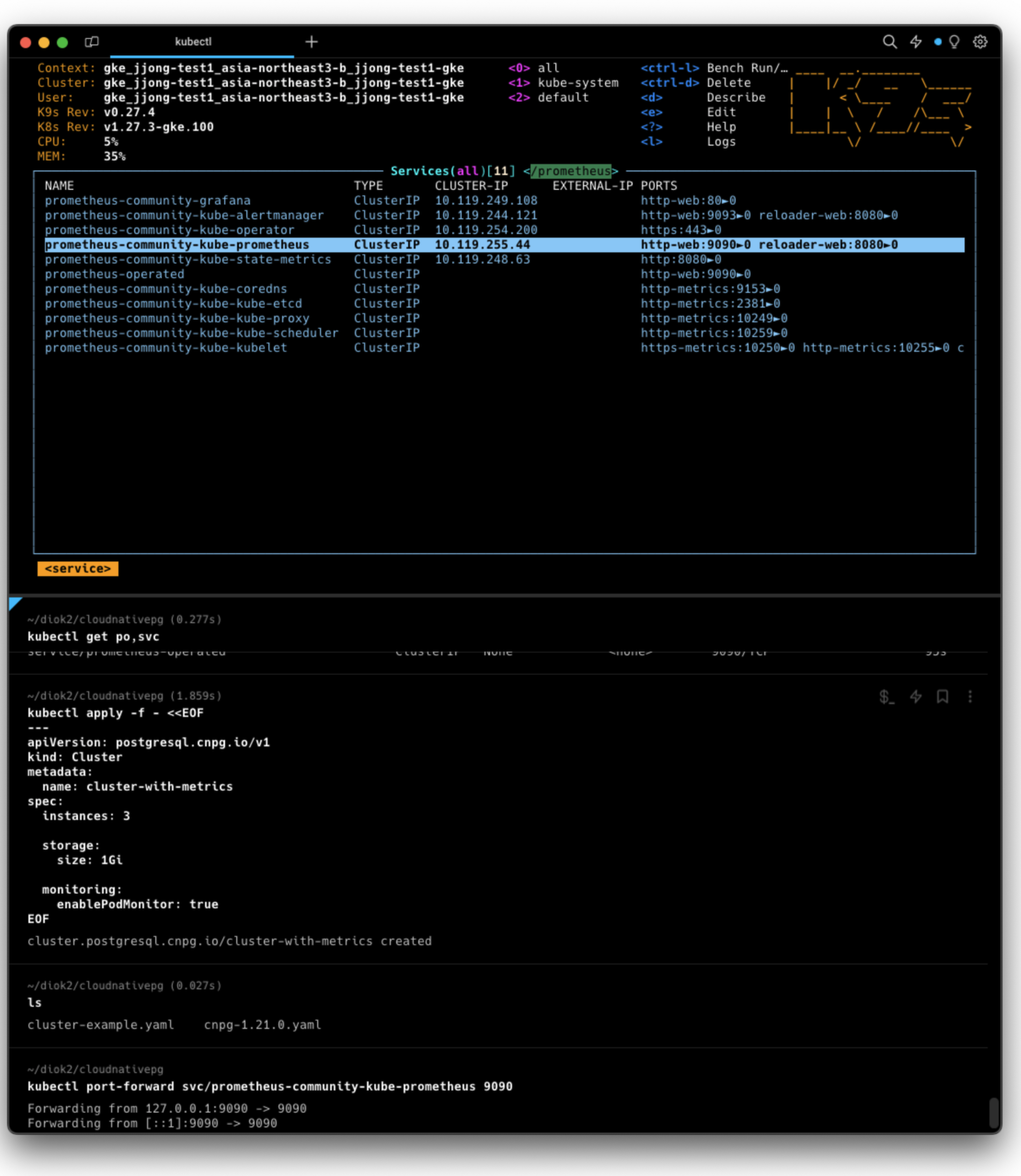

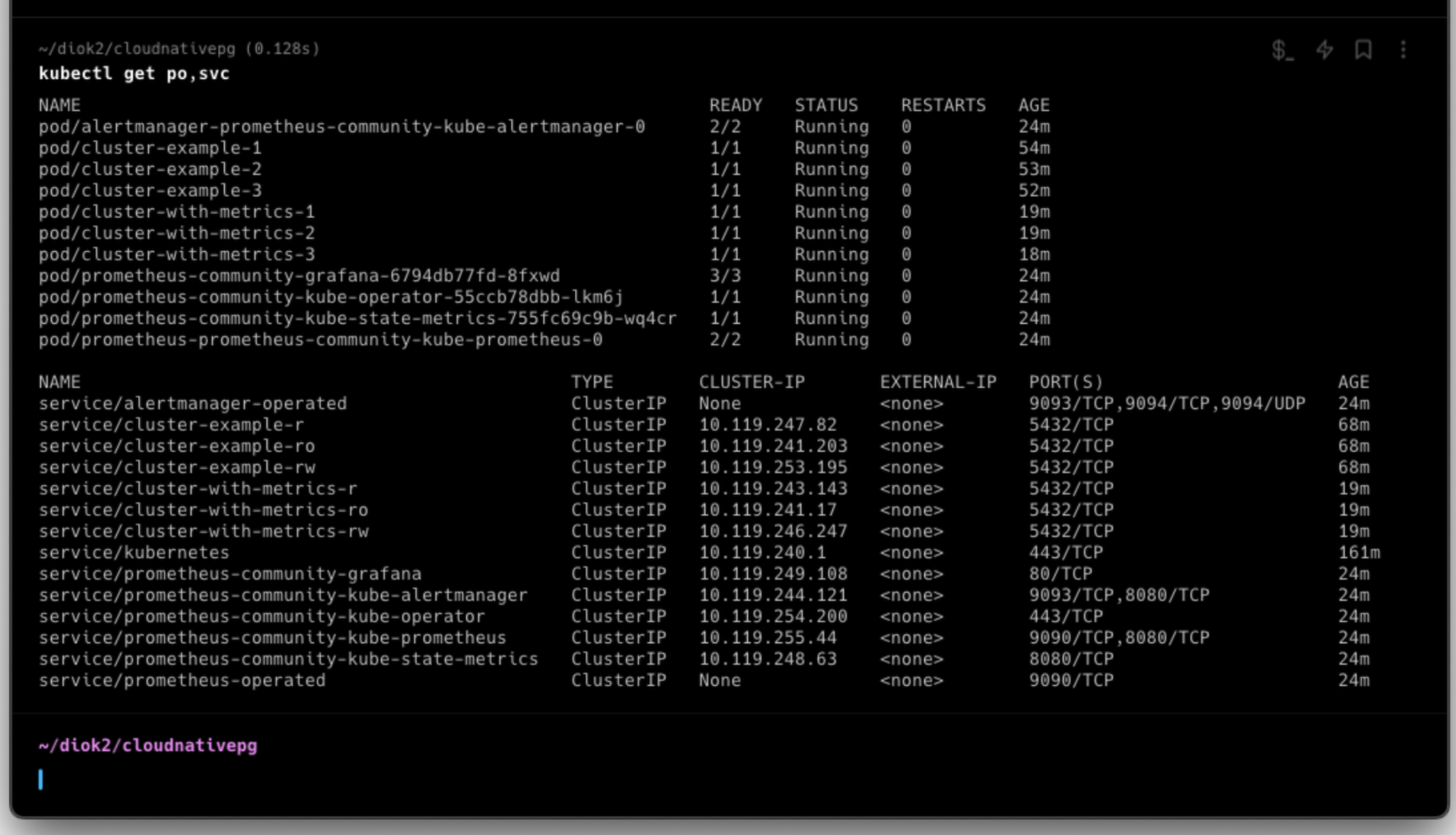

Prometheus 연결

노드 모니터링용도로 적합한 프로메테우스와 그라파나를 설치합니다

helm repo add prometheus-community \

https://prometheus-community.github.io/helm-charts

helm upgrade --install \

-f https://raw.githubusercontent.com/cloudnative-pg/cloudnative-pg/main/docs/src/samples/monitoring/kube-stack-config.yaml \

prometheus-community \

prometheus-community/kube-prometheus-stack현재 오브젝트 확인

- Grafana와 Prometheus 관련 기능이 설치되어있고

- Service에서 아직

Cluster IP로만 띄워져있기때문에, 쿠버네티스 외부로는 아무것도 노출되지 않았음을 확인

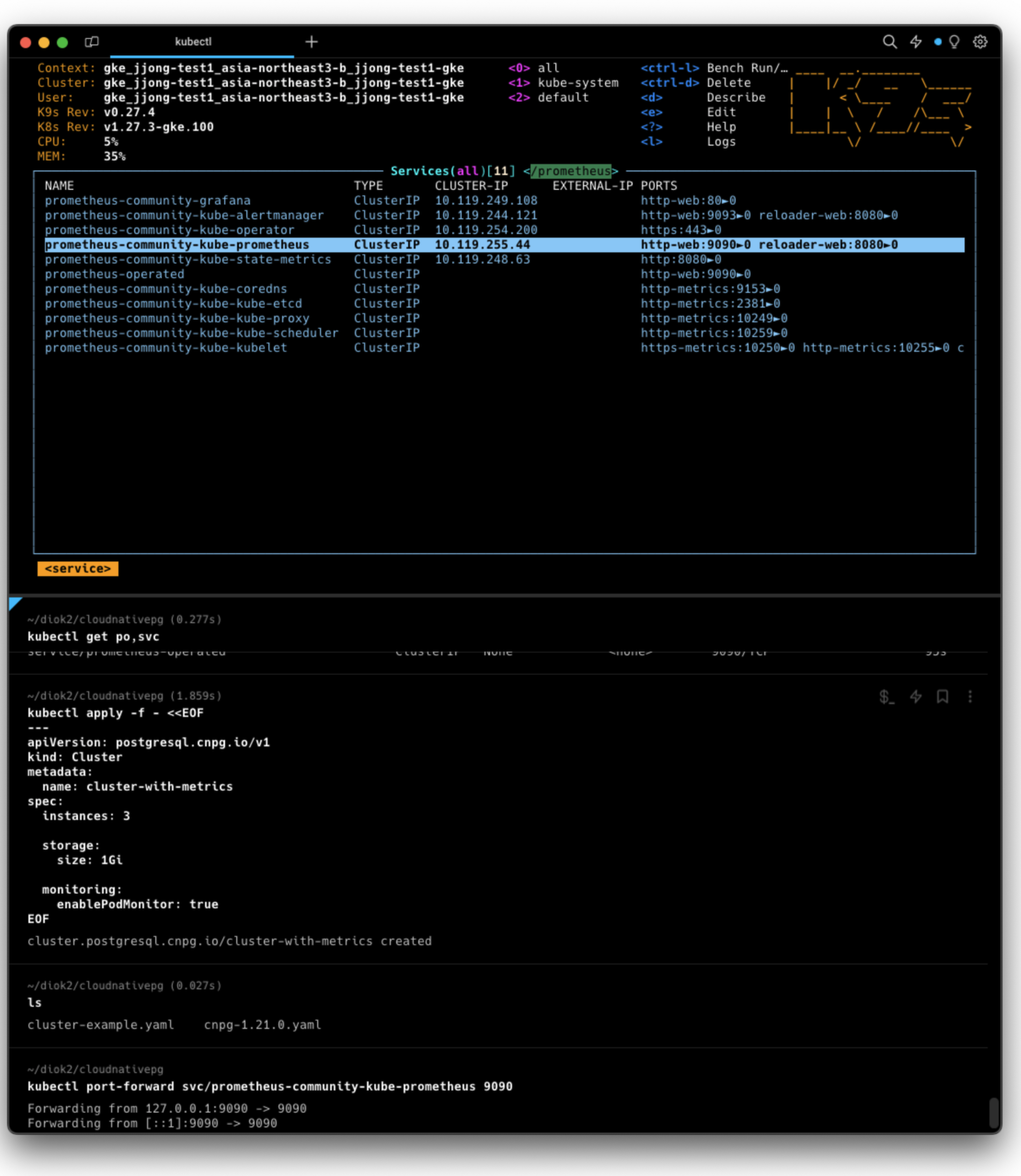

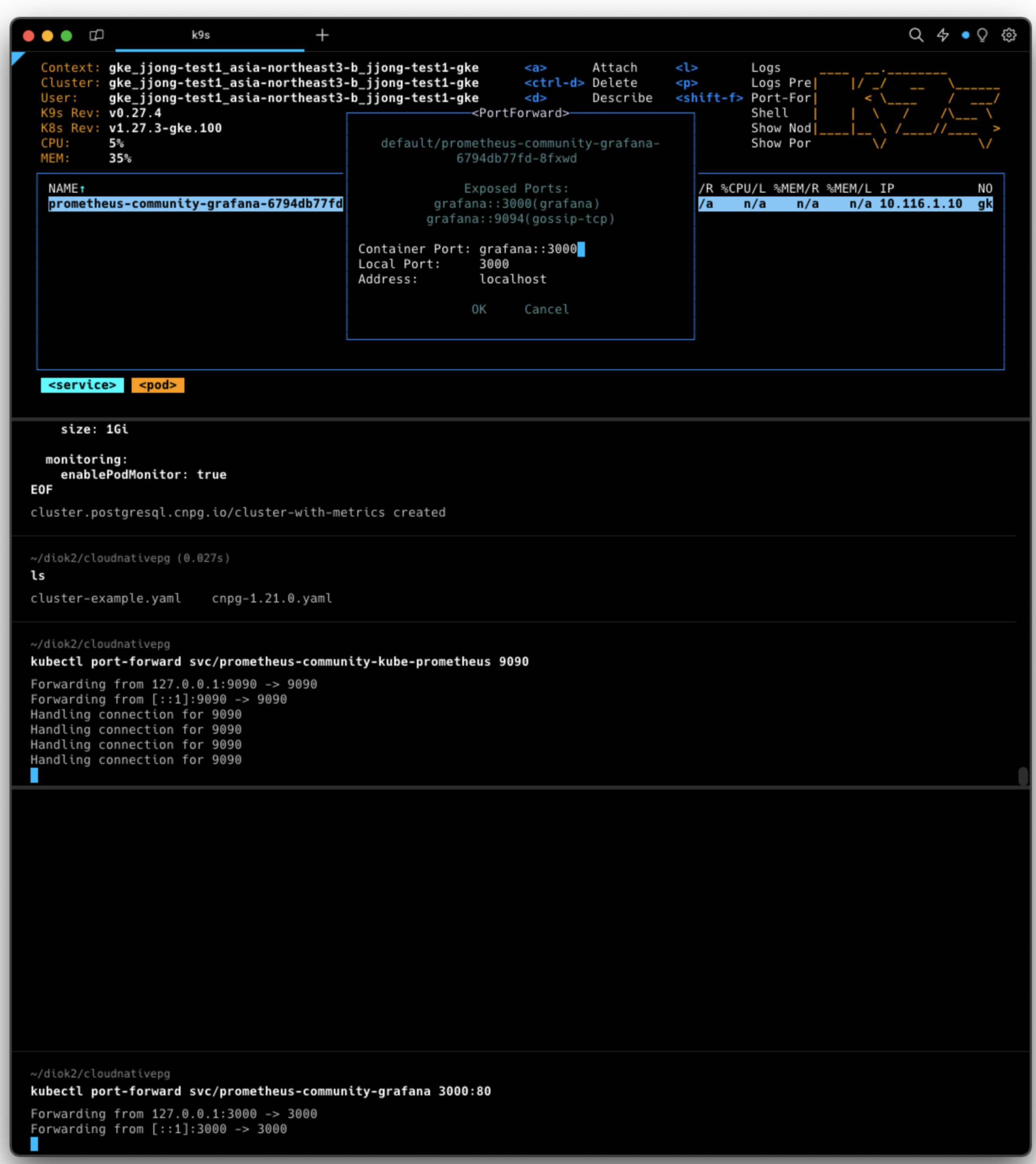

프로메테우스로 노출시키기 위한 CLUSTER 생성

kubectl apply -f - <<EOF

---

apiVersion: postgresql.cnpg.io/v1

kind: Cluster

metadata:

name: cluster-with-metrics

spec:

instances: 3

storage:

size: 1Gi

monitoring:

enablePodMonitor: true

EOF이때 Cluster 오브젝트를 확인하면 cluster-with-metrics 라는 오브젝트가 띄워져있는것을 확인할 수 있다.

서비스 포트 외부노출

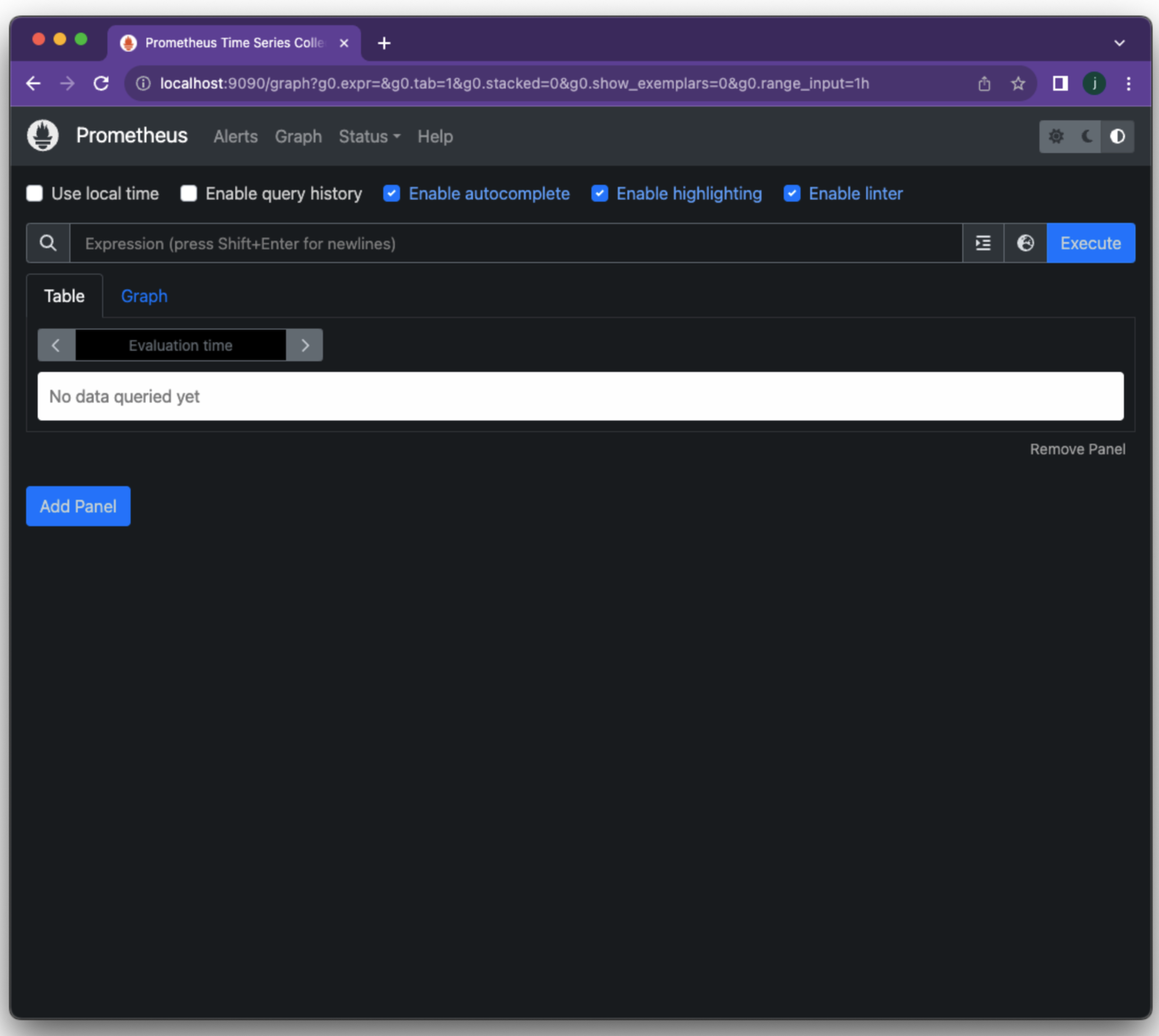

kubectl port-forward svc/prometheus-community-kube-prometheus 9090이때 확인해보면

상단에서 9090→0 8080→0 으로

하단에서 127.0.0.1:9090 → 9090 그리고 [::1]:9090 → 9090 으로 포트포워딩 됨을 볼수있다.

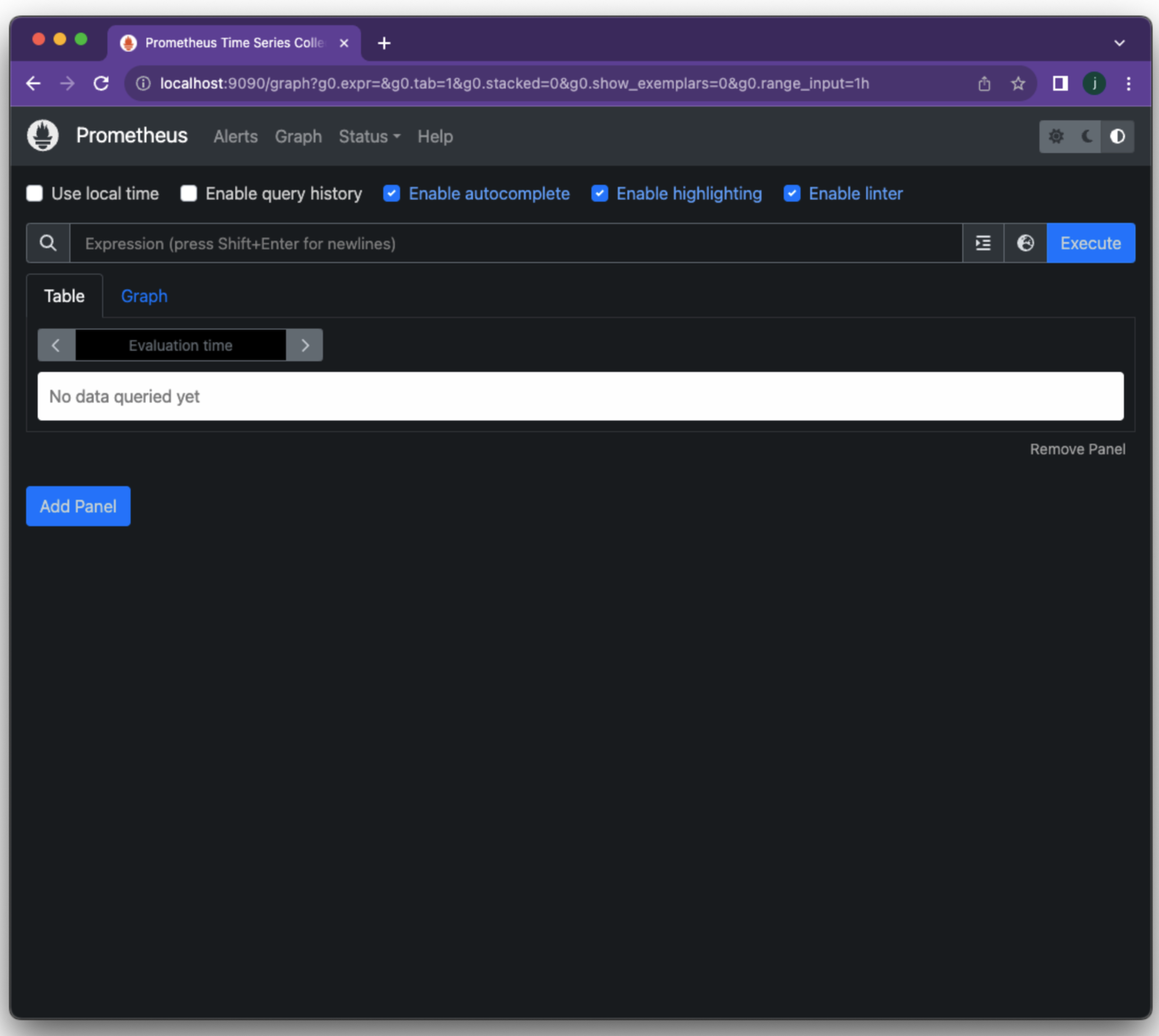

이때 localhost:9090 으로 접근하면 아래와같은 페이지가 나온다

검색창에 검색해보면 엄청 많이 나온다

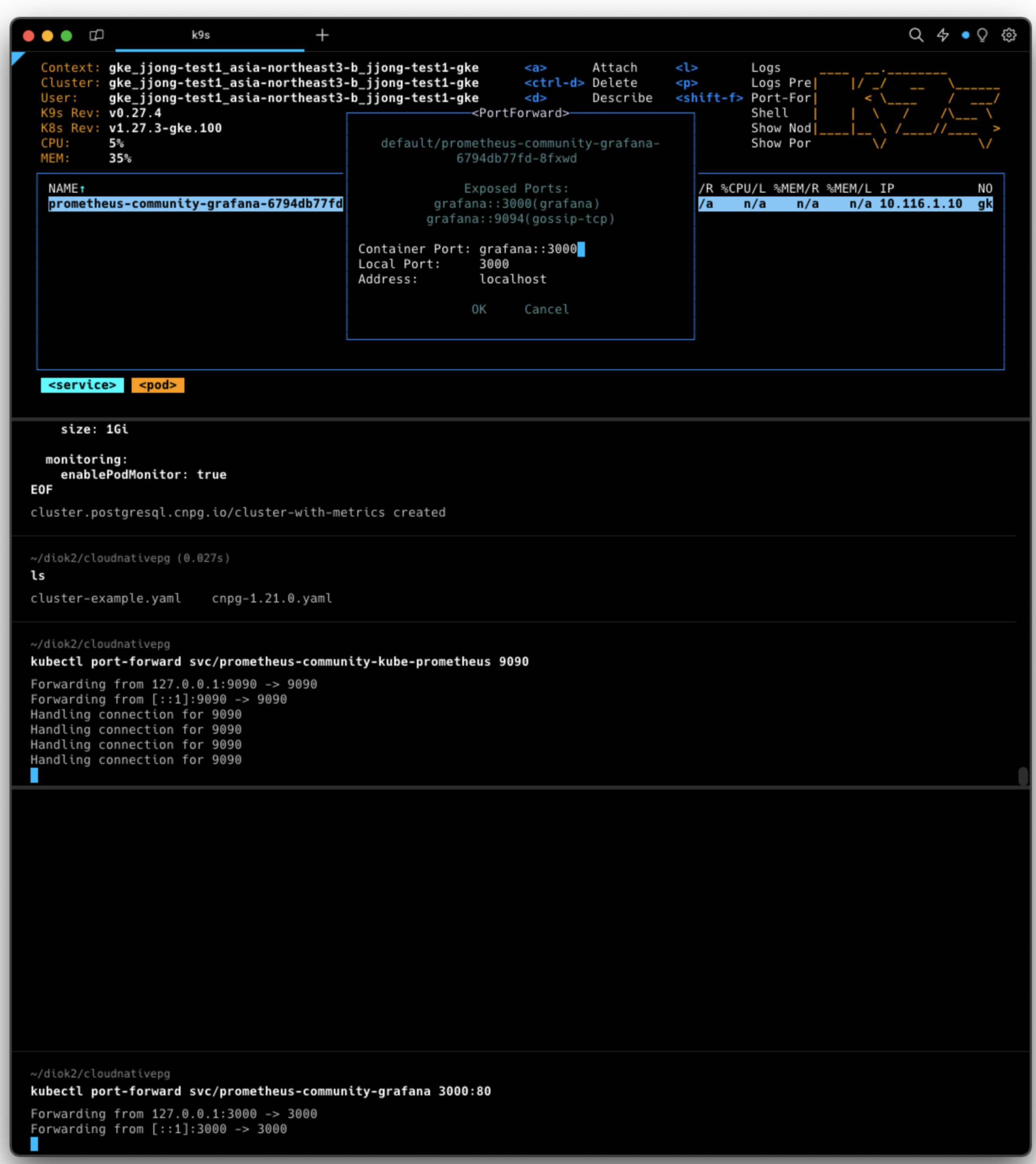

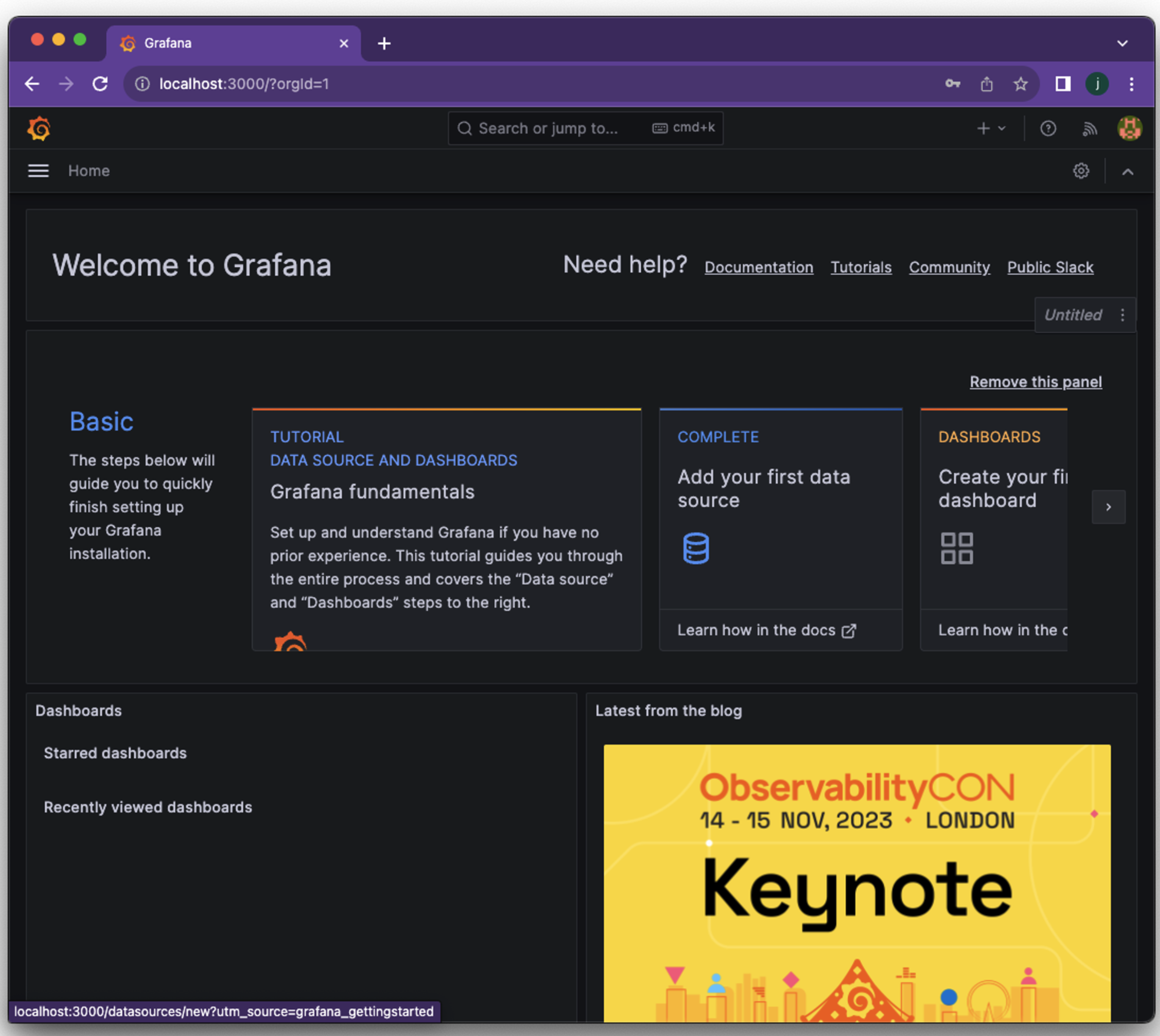

GRAFANA 연결

grafana는 prometheus stack에서 함께 설치되었다. 포트포워딩을 해보자

kubectl port-forward svc/prometheus-community-grafana 3000:80d

하단창에서 portfoward 된 내용을 볼 수 있다.

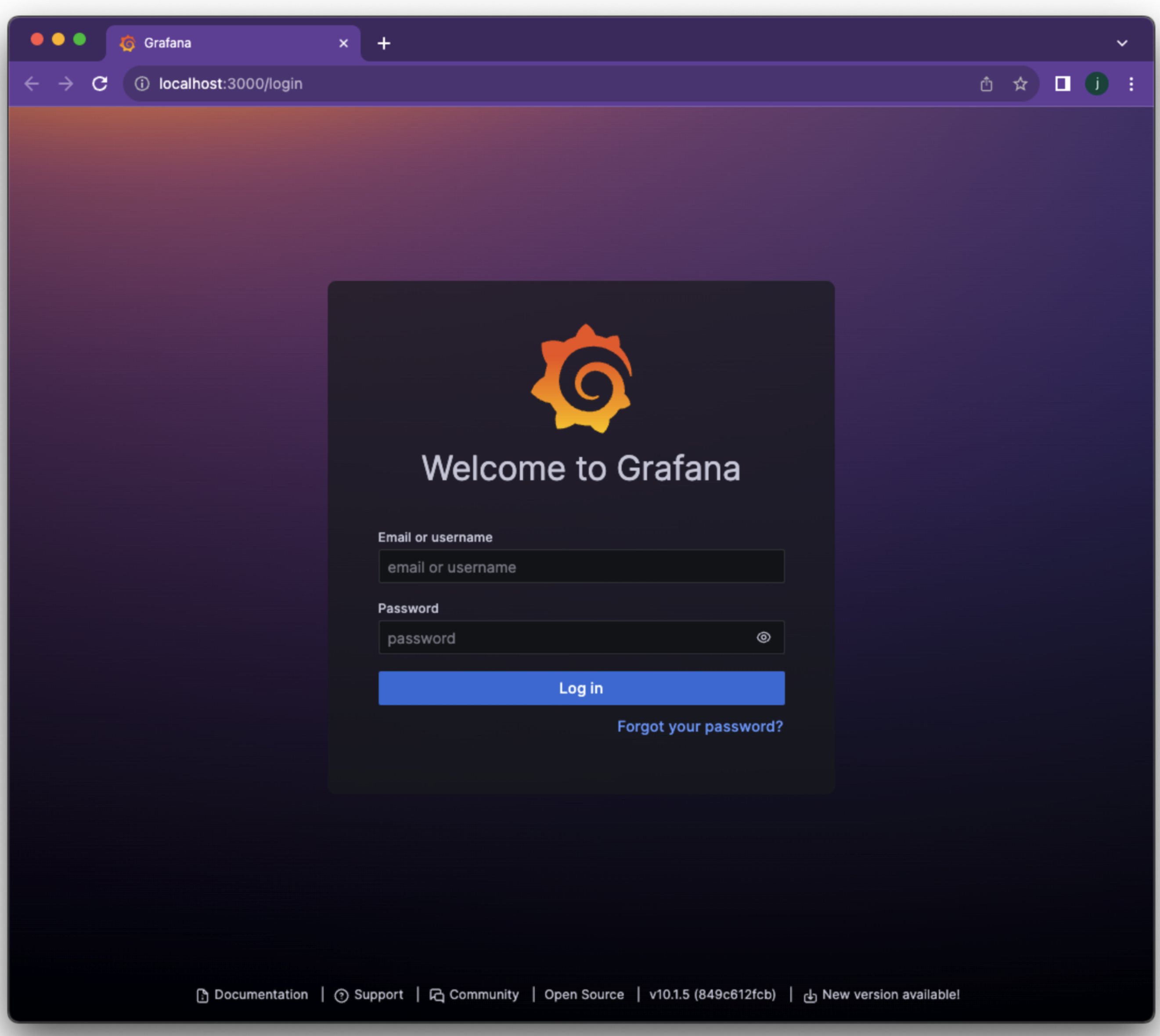

대시보드를 확인하기위해 http://localhost:3000 으로 접근해보자

로그인에 필요한 ID 는 admin, PW는 prom-operator 이다.

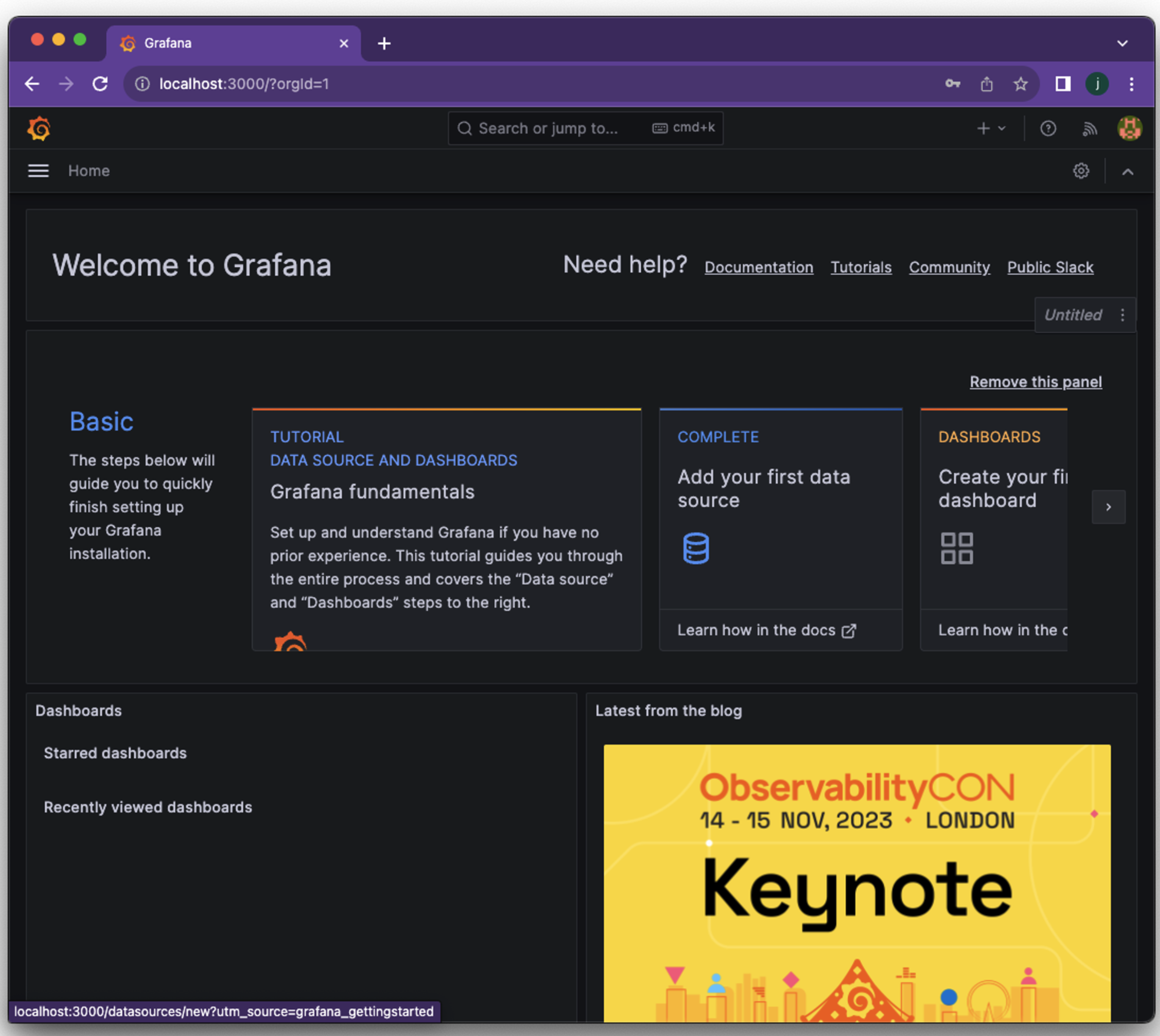

접근완료!!!!

이렇게 하면 기본적인 설치및 적용은 끝났다!

- Grafana의 ID와 PW 기본값은 HelmChart의 kube-prometheus-stack에서 정의되어있다.

- 아래 내용은 현재 페이지에서는 진행하지않는다

#PromethuesRule을 배포해서 알람을 확인하게 할수도있다.

kubectl apply -f \

https://raw.githubusercontent.com/cloudnative-pg/cloudnative-pg/main/docs/src/samples/monitoring/prometheusrule.yaml

kubectl get prometheusrules

NAME AGE

cnpg-default-alerts 3m27s

#Grafana Dashboard를 배포할수 있다.

kubectl apply -f \ https://raw.githubusercontent.com/cloudnative-pg/cloudnative-pg/main/docs/src/samples/monitoring/grafana-configmap.yaml

#이후 CloudNativePG 대시보드로 접근한다

끝내고나서 이상한점

실습내용정리한 이후에 꺼보려고 pod, service 목록을 확인해보니까

cluster-example 클러스터에 해당하는 서비스는 r,ro,rw 로 네이밍이 진행되고

cluste-with-metrics 클러스터에 해당하는 서비스는 r,ro,rw 로 네이밍이 진행된다

pod-service이름을 매칭시켜주려고 한거같긴한데 죠금 이상해서 끄적여봤다

'외부활동' 카테고리의 다른 글

| 구글 클라우드 스터디잼: GenAI 수료후기 (1) | 2023.11.11 |

|---|---|

| [DOIK2] 스터디: Percona Operator for mongoDB (0) | 2023.11.11 |

| [DOIK2] 스터디: Operator 를 사용하는 이유, InnoDB 주요 Component (1) | 2023.10.29 |

| [DOIK2] 스터디: Stateless와 Storage의 관계 (2) | 2023.10.28 |

| [DOIK2] 스터디: Kubernetes 의 Component와 멱등성에 대한 이해 (0) | 2023.10.28 |

주의사항

이 글은 DIOK2스터디에서 진행한 내용을 바탕으로 작성한 내용입니다. 공부중인 내용이기때문에, 틀린 부분이 있을수 있습니다.

CloudNativePG

이번에 정리한 DB는 CloudNativePG 입니다.

쿠버네티스에서 PostgreSQL 워크로드를 관리하는 프로젝트라고 이해하면 될것같습니다.

링크: https://cloudnative-pg.io/documentation/current/

CloudNativePG

CloudNativePG CloudNativePG is an open source operator designed to manage PostgreSQL workloads on any supported Kubernetes cluster running in private, public, hybrid, or multi-cloud environments. CloudNativePG adheres to DevOps principles and concepts such

cloudnative-pg.io

우선 PostgreSQL를 조금 보겠습니다.

일반적으로 PostgreSQL을 부를때는 ‘포스트그레에스큐엘’ 혹은 ‘포스트그레씨퀄’ 중 하나로 지칭합니다. ‘포스트그레씨퀄’ 이라고 많이부르는것이 정론인것같습니다.

PostgreSQL의 프로세스

구조: 클라이언트 - 서버

일반론: 데이터베이스 계층구조

Cluster: 데이터베이스의 집합

Schema: Object(e.g. table, view, index …)의 논리적인 집합

- PostgreSQL은 테이블의 집합을 Schema로 사용한다.

- Schema의 집합을 Database로 사용한다.

- MySQL은 테이블의 집합을 Database로 사용한다.

Table: 가장 기본구조

- Row based vs Column based로 구성된다

- 데이터간 관계라는 의미에서는, Table을 데이터간 relation이라고한다

CloudNativePG 소개

CloudNativePG 이름의 뜻

CloudNative + PostGresql : 포스트그레씨퀄을 클라우드친화적으로 지원하게 만들었다는 뜻을 의미한다.

대표적인 지원기능

- 자체 HA 지원: Direct integration with Kubernetes API server for High Availability, without requiring an external tool

- 셀프힐링: Self-Healing capability, through:

- failover of the primary instance by promoting the most aligned replica

- automated recreation of a replica

- 스위칭: Planned switchover of the primary instance by promoting a selected replica

PostgreSQL Architecture

복제전략: 애플리케이션 수준 복제

- PostgreSQL은 스토리지수준 복제를 권장하지 않는다

WAL(Write Ahead Log):

- PostgreSQL에서 데이터를 일관성 있게 유지하기위해서 사용한다.

- WAL 로그를 기반해서 HA와 Scale을 구성한다.

- Log: DBMS에서 변경되는 모든 작업들을 표시하고 기록하는 개념이다. 로그가 저장되는곳은 데이터가 손실되지 않아야하는 보장이 있어야한다.

- WAL file

- 데이터 변경을 하기 전에, 변경사항을 미리 기록해 두는 파일이다.

- 데이터 변경 작업을 처리하는 순서가 [변경사항을 미리 기록 → 데이터 변경] 의 형태로 진행되기 떄문에, 데이터 변경 작업에서 장애가 발생해도 다시복구하는데 능하다.

스트리밍복제: 비동기/동기 선택해서 스트리밍 복제를 지원한다.

- 복제서버는 Hot Standby 기능으로 읽기처리만 가능하다.

CloudNativePG HA구성

CloudNativePG: 비동기/동기 스트리밍 구성

- HA구성: 하나의 Primary + 여러개의 Standby

Primary: RW(Read/Write)

애플리케이션이 쿠버네티스 Operator를 통해 PostgreSQL Primary에 접근해서 rw(read/write)를 요청할수 있다.

인스턴스에서 -rw 인자를 사용한다.

Standby: RO(Read only)

애플리케이션이 쿠버네티스 Operator를 통해 PostgreSQL hot Standby에 접근해서 ro(read only)를 요청할 수 있다.

인스턴스에서 -ro 혹은 -r 인자를 사용한다.

CloudNativePG 설치

우선 실습환경은 다음과같습니다.

- 무료계정을 사용한 GKE에서 진행하며, 노드 5개로 구성하고있습니다.

- GKE를 배포하는 내용은 다루지않습니다.

- 설치과정 진행중 발생한 이슈로 노드풀(노드의 크기) 를 5→3으로 하향조정합니다.

- 로컬환경에는

kubectl이 설치되어있고, GKE에서 제공하는 노드의 인증정보를 사용합니다.

- GKE를 배포하는 내용은 다루지않습니다.

- CLI를 효율적으로 사용하기위해 k9s를 사용합니다.

- ALIAS 로

k=kubectl이 지정되어있습니다. - 로컬

~/diok2/cloudnativepg디렉토리에서 작업을 진행합니다. - 해당 문서에서는 공식 깃허브 Docs를 참조해서 실습합니다

GKE 정보 확인

Operator manifest 배포

#파일 다운로드

curl -LO https://raw.githubusercontent.com/cloudnative-pg/cloudnative-pg/release-1.21/releases/cnpg-1.21.0.yaml

#배포

k apply -f cnpg-1.21.0.yaml

#확인 명령어

kubectl get deployment -n cnpg-system cnpg-controller-manager

NAME READY UP-TO-DATE AVAILABLE AGE

cnpg-controller-manager 1/1 1 1 57s

Deploy PostgreSQL Cluster

- 이때 배포한 클러스터의 이름은 cluster-example 이라는 것을 명심하자

#파일 생성

cat <<EOF>> cluster-example.yaml

# Example of PostgreSQL cluster

apiVersion: postgresql.cnpg.io/v1

kind: Cluster

metadata:

name: cluster-example

spec:

instances: 3

# Example of rolling update strategy:

# - unsupervised: automated update of the primary once all

# replicas have been upgraded (default)

# - supervised: requires manual supervision to perform

# the switchover of the primary

primaryUpdateStrategy: unsupervised

# Require 1Gi of space

storage:

size: 1Gi

EOF

#배포

kubectl apply -f cluster-example.yaml

GKE 이슈: Cluster가 만들어지지 않는 이슈 확인

현상발견: 분명히 cluster-exmaple.yaml을 배포하면 Cluster가 만들어져야 하는데, 만들어지 않는 증상을 확인

증상

- 만들어지려는 Pod(

cluster-example-1-initdb-x7sfp) 의 로그를 확인했을때initdb, bootstrap-controller이슈 확인 - Pod의 상세상태를 보면 Pending 인것을 확인

원인을 못 찾고 헤매다가, kubectl get pv,pvc 를 했을때 PVC만 존재하길래, 뭔가 이상하다는 낌새를 느꼈다. 뭐지 싶어서 이벤트로그를 확인했다. 그때, 아래의 내용을 확인했고, 원인을 알았다.

(참조링크: https://jjongguet.tistory.com/183#PVC%2FPV%3A 사용자의 요청이 생기면 Pod랑 연결하는 방법-1 )

우리가 주목해야할 내용은 SSD_TOTAL_GB 이다.

대충 이유를 확인해보자면… 아래와같다.

GKE가 배포된 Region에서 제한된 QUOTA보다 많이 할당했기 때문이라고 한다.

노드 프로비저닝 예제( https://jjongguet.tistory.com/171#6-1. ERROR 발생-1 )에서도 비슷한 이슈가 발생했었다.

해당 페이지의 문제는 요청량(600GB) 이 제공량(500GB)보다 많다는것이였고, 노드풀의 노드갯수를 6→5로 하향 조정해서 요청량을 500GB로 맞췄다.

따라서 이번 문제의경우에도 노드풀의 갯수를 줄여서 문제를 해결하면 되겠다고 접근했다.

GCP 콘솔 → Kubernetes Engine → 클러스터 → 클러스터 선택

- GKE에서는 노드풀 에서 지정한 노드갯수를 항상 유지하기때문에, 클러스터의 노드갯수를 수정하려면, 노드풀 갯수를 수정해야한다

상단의 옵션 중 노드 → 노드 풀 선택

수정 → (노드 수 5→3 으로 조정) → 저장

노드풀 갯수 설정이 완료되었다.

노드풀은 쿠버네티스 노드인스턴스와 연결되어있는거라, 갯수설정이후 적용이되기까지 조금 시간이 걸린다.

GKE 이슈: Cluster가 만들어지지 않는 이슈 해결

위에서 노드풀갯수를 설정한 이후 아래처럼 Pod에 init 이 되기 시작했다

Pod가 initializing 되는것을 볼수 있다.

배포확인 명령어

#클러스터 배포 확인

kubectl get pod,pvc,svc,ep

NAME READY STATUS RESTARTS AGE

pod/cluster-example-1 1/1 Running 0 16m

pod/cluster-example-2 1/1 Running 0 15m

pod/cluster-example-3 1/1 Running 0 14m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/cluster-example-1 Bound pvc-b3278008-9f8e-461a-8c35-f3accc29cc4c 1Gi RWO standard-rwo 30m

persistentvolumeclaim/cluster-example-2 Bound pvc-76170d1e-cd2f-4e86-8c32-de129e76db01 1Gi RWO standard-rwo 15m

persistentvolumeclaim/cluster-example-3 Bound pvc-295c9118-de7a-499e-b3cb-944278a5a7fb 1Gi RWO standard-rwo 15m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cluster-example-r ClusterIP 10.119.247.82 <none> 5432/TCP 30m

service/cluster-example-ro ClusterIP 10.119.241.203 <none> 5432/TCP 30m

service/cluster-example-rw ClusterIP 10.119.253.195 <none> 5432/TCP 30m

service/kubernetes ClusterIP 10.119.240.1 <none> 443/TCP 123m

NAME ENDPOINTS AGE

endpoints/cluster-example-r 10.116.1.7:5432,10.116.4.15:5432,10.116.5.9:5432 30m

endpoints/cluster-example-ro 10.116.4.15:5432,10.116.5.9:5432 30m

endpoints/cluster-example-rw 10.116.1.7:5432 30m

endpoints/kubernetes 10.10.0.2:443 123m#파드 라벨로 배포확인

kubectl get pods -l cnpg.io/cluster=cluster-example

NAME READY STATUS RESTARTS AGE

cluster-example-1 1/1 Running 0 20m

cluster-example-2 1/1 Running 0 19m

cluster-example-3 1/1 Running 0 18m

로그 확인

#Pod의 로그 확인

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"setup","msg":"Starting CloudNativePG Instance Manager","logging_pod":"cluster-example-1","version":"1.21.0","build":{"Version":"1.21.0","Commit":"9bc5b9b2","Date":"2023-10-12"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"setup","msg":"starting controller-runtime manager","logging_pod":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Starting EventSource","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","source":"kind source: *v1.Cluster"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Starting Controller","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Starting webserver","logging_pod":"cluster-example-1","address":":9187"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"roles_reconciler","msg":"starting up the runnable","logging_pod":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"roles_reconciler","msg":"setting up RoleSynchronizer loop","logging_pod":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Starting webserver","logging_pod":"cluster-example-1","address":"localhost:8010"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Starting webserver","logging_pod":"cluster-example-1","address":":8000"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Starting workers","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","worker count":1}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Refreshed configuration file","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","filename":"/controller/certificates/server.crt","secret":"cluster-example-server"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Refreshed configuration file","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","filename":"/controller/certificates/server.key","secret":"cluster-example-server"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Refreshed configuration file","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","filename":"/controller/certificates/streaming_replica.crt","secret":"cluster-example-replication"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Refreshed configuration file","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","filename":"/controller/certificates/streaming_replica.key","secret":"cluster-example-replication"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Refreshed configuration file","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","filename":"/controller/certificates/client-ca.crt","secret":"cluster-example-ca"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Refreshed configuration file","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","filename":"/controller/certificates/server-ca.crt","secret":"cluster-example-ca"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Installed configuration file","logging_pod":"cluster-example-1","pgdata":"/var/lib/postgresql/data/pgdata","filename":"pg_hba.conf"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Installed configuration file","logging_pod":"cluster-example-1","pgdata":"/var/lib/postgresql/data/pgdata","filename":"custom.conf"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Cluster status","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","currentPrimary":"","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"First primary instance bootstrap, marking myself as primary","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","currentPrimary":"","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Extracting pg_controldata information","logging_pod":"cluster-example-1","reason":"postmaster start up"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"pg_controldata","msg":"pg_control version number: 1300\nCatalog version number: 202307071\nDatabase system identifier: 7297702981666938898\nDatabase cluster state: shut down\npg_control last modified: Sat 04 Nov 2023 08:14:44 PM UTC\nLatest checkpoint location: 0/2000028\nLatest checkpoint's REDO location: 0/2000028\nLatest checkpoint's REDO WAL file: 000000010000000000000002\nLatest checkpoint's TimeLineID: 1\nLatest checkpoint's PrevTimeLineID: 1\nLatest checkpoint's full_page_writes: on\nLatest checkpoint's NextXID: 0:732\nLatest checkpoint's NextOID: 16386\nLatest checkpoint's NextMultiXactId: 1\nLatest checkpoint's NextMultiOffset: 0\nLatest checkpoint's oldestXID: 722\nLatest checkpoint's oldestXID's DB: 1\nLatest checkpoint's oldestActiveXID: 0\nLatest checkpoint's oldestMultiXid: 1\nLatest checkpoint's oldestMulti's DB: 1\nLatest checkpoint's oldestCommitTsXid:0\nLatest checkpoint's newestCommitTsXid:0\nTime of latest checkpoint: Sat 04 Nov 2023 08:14:44 PM UTC\nFake LSN counter for unlogged rels: 0/3E8\nMinimum recovery ending location: 0/0\nMin recovery ending loc's timeline: 0\nBackup start location: 0/0\nBackup end location: 0/0\nEnd-of-backup record required: no\nwal_level setting: replica\nwal_log_hints setting: off\nmax_connections setting: 100\nmax_worker_processes setting: 32\nmax_wal_senders setting: 10\nmax_prepared_xacts setting: 0\nmax_locks_per_xact setting: 64\ntrack_commit_timestamp setting: off\nMaximum data alignment: 8\nDatabase block size: 8192\nBlocks per segment of large relation: 131072\nWAL block size: 8192\nBytes per WAL segment: 16777216\nMaximum length of identifiers: 64\nMaximum columns in an index: 32\nMaximum size of a TOAST chunk: 1996\nSize of a large-object chunk: 2048\nDate/time type storage: 64-bit integers\nFloat8 argument passing: by value\nData page checksum version: 0\nMock authentication nonce: 974d70f58f0b1e1286845e11b2f31b9a023e3dc9bad2d1863d7d41d90c6122ec\n","pipe":"stdout","logging_pod":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"DB not available, will retry","logging_pod":"cluster-example-1","err":"failed to connect to `host=/controller/run user=postgres database=postgres`: dial error (dial unix /controller/run/.s.PGSQL.5432: connect: no such file or directory)"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"2023-11-04 20:15:17.370 UTC [19] LOG: redirecting log output to logging collector process","pipe":"stderr","logging_pod":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"2023-11-04 20:15:17.370 UTC [19] HINT: Future log output will appear in directory \"/controller/log\".","pipe":"stderr","logging_pod":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.371 UTC","process_id":"19","session_id":"6546a655.13","session_line_num":"1","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"ending log output to stderr","hint":"Future log output will go to log destination \"csvlog\".","backend_type":"postmaster","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"2023-11-04 20:15:17.371 UTC [19] LOG: ending log output to stderr","source":"/controller/log/postgres","logging_pod":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"2023-11-04 20:15:17.371 UTC [19] HINT: Future log output will go to log destination \"csvlog\".","source":"/controller/log/postgres","logging_pod":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.371 UTC","process_id":"19","session_id":"6546a655.13","session_line_num":"2","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"starting PostgreSQL 16.0 (Debian 16.0-1.pgdg110+1) on x86_64-pc-linux-gnu, compiled by gcc (Debian 10.2.1-6) 10.2.1 20210110, 64-bit","backend_type":"postmaster","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.371 UTC","process_id":"19","session_id":"6546a655.13","session_line_num":"3","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"listening on IPv4 address \"0.0.0.0\", port 5432","backend_type":"postmaster","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.371 UTC","process_id":"19","session_id":"6546a655.13","session_line_num":"4","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"listening on IPv6 address \"::\", port 5432","backend_type":"postmaster","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.377 UTC","process_id":"19","session_id":"6546a655.13","session_line_num":"5","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"listening on Unix socket \"/controller/run/.s.PGSQL.5432\"","backend_type":"postmaster","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.392 UTC","process_id":"23","session_id":"6546a655.17","session_line_num":"1","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"database system was shut down at 2023-11-04 20:14:44 UTC","backend_type":"startup","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.398 UTC","user_name":"postgres","database_name":"postgres","process_id":"24","connection_from":"[local]","session_id":"6546a655.18","session_line_num":"1","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"FATAL","sql_state_code":"57P03","message":"the database system is starting up","backend_type":"client backend","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.402 UTC","user_name":"postgres","database_name":"postgres","process_id":"25","connection_from":"[local]","session_id":"6546a655.19","session_line_num":"1","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"FATAL","sql_state_code":"57P03","message":"the database system is starting up","backend_type":"client backend","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"DB not available, will retry","controller":"cluster","controllerGroup":"postgresql.cnpg.io","controllerKind":"Cluster","Cluster":{"name":"cluster-example","namespace":"default"},"namespace":"default","name":"cluster-example","reconcileID":"7cae7773-4464-4be0-a87d-8f788fff0f8f","uuid":"dedbbc2f-7b4e-11ee-98a2-aefc4fc00cc5","logging_pod":"cluster-example-1","err":"failed to connect to `host=/controller/run user=postgres database=postgres`: server error (FATAL: the database system is starting up (SQLSTATE 57P03))"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:17.407 UTC","process_id":"19","session_id":"6546a655.13","session_line_num":"6","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"database system is ready to accept connections","backend_type":"postmaster","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving","logging_pod":"cluster-example-1","walName":"pg_wal/000000010000000000000001","currentPrimary":"cluster-example-1","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:17Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:18Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:18Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:18Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:18Z","msg":"Ignore minSyncReplicas to enforce self-healing","logging_pod":"cluster-example-1","syncReplicas":-1,"minSyncReplicas":0,"maxSyncReplicas":0}

postgres {"level":"info","ts":"2023-11-04T20:15:18Z","msg":"Readiness probe failing","logging_pod":"cluster-example-1","err":"instance is not ready yet"}

postgres {"level":"info","ts":"2023-11-04T20:15:19Z","msg":"Readiness probe failing","logging_pod":"cluster-example-1","err":"instance is not ready yet"}

postgres {"level":"info","ts":"2023-11-04T20:15:42Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:42.129 UTC","process_id":"21","session_id":"6546a655.15","session_line_num":"1","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"checkpoint starting: force wait","backend_type":"checkpointer","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:42Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving","logging_pod":"cluster-example-1","walName":"pg_wal/000000010000000000000002","currentPrimary":"cluster-example-1","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:44Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:15:44.008 UTC","process_id":"21","session_id":"6546a655.15","session_line_num":"2","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"checkpoint complete: wrote 20 buffers (0.1%); 0 WAL file(s) added, 0 removed, 0 recycled; write=1.711 s, sync=0.005 s, total=1.880 s; sync files=15, longest=0.003 s, average=0.001 s; distance=16384 kB, estimate=16384 kB; lsn=0/3000060, redo lsn=0/3000028","backend_type":"checkpointer","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:15:44Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving","logging_pod":"cluster-example-1","walName":"pg_wal/000000010000000000000003","currentPrimary":"cluster-example-1","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:15:44Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving","logging_pod":"cluster-example-1","walName":"pg_wal/000000010000000000000003.00000028.backup","currentPrimary":"cluster-example-1","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:16:39Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving","logging_pod":"cluster-example-1","walName":"pg_wal/000000010000000000000004","currentPrimary":"cluster-example-1","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:16:39Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:16:39.375 UTC","process_id":"21","session_id":"6546a655.15","session_line_num":"3","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"checkpoint starting: force wait","backend_type":"checkpointer","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:16:43Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:16:43.306 UTC","process_id":"21","session_id":"6546a655.15","session_line_num":"4","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"checkpoint complete: wrote 40 buffers (0.2%); 0 WAL file(s) added, 0 removed, 0 recycled; write=3.915 s, sync=0.004 s, total=3.931 s; sync files=10, longest=0.003 s, average=0.001 s; distance=32768 kB, estimate=32768 kB; lsn=0/5000098, redo lsn=0/5000060","backend_type":"checkpointer","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:16:43Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving","logging_pod":"cluster-example-1","walName":"pg_wal/000000010000000000000005","currentPrimary":"cluster-example-1","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:16:43Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving","logging_pod":"cluster-example-1","walName":"pg_wal/000000010000000000000005.00000060.backup","currentPrimary":"cluster-example-1","targetPrimary":"cluster-example-1"}

postgres {"level":"info","ts":"2023-11-04T20:21:39Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:21:39.486 UTC","process_id":"21","session_id":"6546a655.15","session_line_num":"5","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"checkpoint starting: time","backend_type":"checkpointer","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:21:42Z","logger":"postgres","msg":"record","logging_pod":"cluster-example-1","record":{"log_time":"2023-11-04 20:21:42.412 UTC","process_id":"21","session_id":"6546a655.15","session_line_num":"6","session_start_time":"2023-11-04 20:15:17 UTC","transaction_id":"0","error_severity":"LOG","sql_state_code":"00000","message":"checkpoint complete: wrote 29 buffers (0.2%); 0 WAL file(s) added, 0 removed, 0 recycled; write=2.909 s, sync=0.002 s, total=2.927 s; sync files=5, longest=0.002 s, average=0.001 s; distance=16581 kB, estimate=31149 kB; lsn=0/60316B8, redo lsn=0/6031680","backend_type":"checkpointer","query_id":"0"}}

postgres {"level":"info","ts":"2023-11-04T20:21:43Z","logger":"wal-archive","msg":"Backup not configured, skip WAL archiving","logging_pod":"cluster-example-1","walName":"pg_wal/000000010000000000000006","currentPrimary":"cluster-example-1","targetPrimary":"cluster-example-1"}

bootstrap-controller {"level":"info","ts":"2023-11-04T20:14:58Z","msg":"Installing the manager executable","destination":"/controller/manager","version":"1.21.0","build":{"Version":"1.21.0","Commit":"9bc5b9b2","Date":"2023-10-12"}}

bootstrap-controller {"level":"info","ts":"2023-11-04T20:14:58Z","msg":"Setting 0750 permissions"}

bootstrap-controller {"level":"info","ts":"2023-11-04T20:14:58Z","msg":"Bootstrap completed"}

Stream closed EOF for default/cluster-example-1 (bootstrap-controller)

주의사항: Pod label 로 검색했을시 확인이 안되는 경우

공식문서(https://github.com/cloudnative-pg/cloudnative-pg/blob/main/docs/src/quickstart.md#part-3-deploy-a-postgresql-cluster) 에서는 아래의 커맨드로 파드를 확인하라고 한다.

kubectl get pods -l cnpg.io/cluster=<CLUSTER>이때 주의사항으로 현재 버전에서는 라벨값을 cnpg.io/cluster 를 사용하고 있는데

이전 버전에서는 postgresql 을 사용하고 있다고 한다. 라벨 검색시 주의하도록 하자.

Prometheus 연결

노드 모니터링용도로 적합한 프로메테우스와 그라파나를 설치합니다

helm repo add prometheus-community \

https://prometheus-community.github.io/helm-charts

helm upgrade --install \

-f https://raw.githubusercontent.com/cloudnative-pg/cloudnative-pg/main/docs/src/samples/monitoring/kube-stack-config.yaml \

prometheus-community \

prometheus-community/kube-prometheus-stack현재 오브젝트 확인

- Grafana와 Prometheus 관련 기능이 설치되어있고

- Service에서 아직

Cluster IP로만 띄워져있기때문에, 쿠버네티스 외부로는 아무것도 노출되지 않았음을 확인

프로메테우스로 노출시키기 위한 CLUSTER 생성

kubectl apply -f - <<EOF

---

apiVersion: postgresql.cnpg.io/v1

kind: Cluster

metadata:

name: cluster-with-metrics

spec:

instances: 3

storage:

size: 1Gi

monitoring:

enablePodMonitor: true

EOF이때 Cluster 오브젝트를 확인하면 cluster-with-metrics 라는 오브젝트가 띄워져있는것을 확인할 수 있다.

서비스 포트 외부노출

kubectl port-forward svc/prometheus-community-kube-prometheus 9090이때 확인해보면

상단에서 9090→0 8080→0 으로

하단에서 127.0.0.1:9090 → 9090 그리고 [::1]:9090 → 9090 으로 포트포워딩 됨을 볼수있다.

이때 localhost:9090 으로 접근하면 아래와같은 페이지가 나온다

검색창에 검색해보면 엄청 많이 나온다

GRAFANA 연결

grafana는 prometheus stack에서 함께 설치되었다. 포트포워딩을 해보자

kubectl port-forward svc/prometheus-community-grafana 3000:80d

하단창에서 portfoward 된 내용을 볼 수 있다.

대시보드를 확인하기위해 http://localhost:3000 으로 접근해보자

로그인에 필요한 ID 는 admin, PW는 prom-operator 이다.

접근완료!!!!

이렇게 하면 기본적인 설치및 적용은 끝났다!

- Grafana의 ID와 PW 기본값은 HelmChart의 kube-prometheus-stack에서 정의되어있다.

- 아래 내용은 현재 페이지에서는 진행하지않는다

#PromethuesRule을 배포해서 알람을 확인하게 할수도있다.

kubectl apply -f \

https://raw.githubusercontent.com/cloudnative-pg/cloudnative-pg/main/docs/src/samples/monitoring/prometheusrule.yaml

kubectl get prometheusrules

NAME AGE

cnpg-default-alerts 3m27s

#Grafana Dashboard를 배포할수 있다.

kubectl apply -f \ https://raw.githubusercontent.com/cloudnative-pg/cloudnative-pg/main/docs/src/samples/monitoring/grafana-configmap.yaml

#이후 CloudNativePG 대시보드로 접근한다

끝내고나서 이상한점

실습내용정리한 이후에 꺼보려고 pod, service 목록을 확인해보니까

cluster-example 클러스터에 해당하는 서비스는 r,ro,rw 로 네이밍이 진행되고

cluste-with-metrics 클러스터에 해당하는 서비스는 r,ro,rw 로 네이밍이 진행된다

pod-service이름을 매칭시켜주려고 한거같긴한데 죠금 이상해서 끄적여봤다

'외부활동' 카테고리의 다른 글

| 구글 클라우드 스터디잼: GenAI 수료후기 (1) | 2023.11.11 |

|---|---|

| [DOIK2] 스터디: Percona Operator for mongoDB (0) | 2023.11.11 |

| [DOIK2] 스터디: Operator 를 사용하는 이유, InnoDB 주요 Component (1) | 2023.10.29 |

| [DOIK2] 스터디: Stateless와 Storage의 관계 (2) | 2023.10.28 |

| [DOIK2] 스터디: Kubernetes 의 Component와 멱등성에 대한 이해 (0) | 2023.10.28 |